by Judith Curry

There was some discussion of this topic in the context Murry Salby’s talk, but it has been suggested that this topic deserves its own thread.

This post is motivated by the following email from Hal Doiron:

Hello Dr. Curry,

.

In my review of climate change literature related to atmosphereic CO2 sources and sinks, I have run into a wide range of opinions and peer reviewed research conclusions regarding the following specific question that I think is central to the CAGW debate.

.

How long does CO2 from fossil fuel burning injected into the atmosphere remain in the atmosphere before it is removed by natural processes?

.

Sources of confusion in answering this question:

.

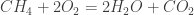

1. In responses to one of my comments at Climate, Etc., Fred Moolten has claimed the answer to this question is about 100 years. http://judithcurry.com/2011/08/18/should-we-assess-climate-model-predictions-in-light-of-severe-tests/#comment-101642 “To focus on the most relevant element in this situation, CO2, the salient feature is the exceedingly long lifetime of any atmospheric excess we generate from anthropogenic emissions – there is no single decay curve, but the trajectory of decline toward equilibrium concentrations can be expressed as a rough average in the range of about 100 years, with a long tail lasting hundreds of millennia. In other words, the CO2 we emittomorrow, or refrain from emitting, is not something we can take back if we later decide we shouldn’t have put it up there. It will warm us for centuries.”

.

2. From an ESRL NOAA website http://www.esrl.noaa.gov/gmd/education/faq_cat-1.html#17 , I found:

· What will happen to Earth’s climate if emissions of these greenhouse gases continue to rise?

Because human emissions of CO2 and other greenhouse gases continue to climb, and because they remain in the atmosphere for decades to centuries (depending on the gas), we’re committing ourselves to a warmer climate in the future. The IPCC projects an average global temperature increase of 2-6°F by 2100, and greater warming there after. Temperatures in some parts of the globe (e.g., the polar regions) are expected to rise even faster. Even the low end of the IPCC’s projected range represents a rate of climate change unprecedented in the past 10,000 years.

.

3. I believe in listening to Dr. Murry Salby’s audio lecture at Climate, Etc., his research led him to the conclusion that the atmospheric residence time of CO2 from fossil fuel burning emissions was only a few years. This was related to investigation of the trends of the ratio of Carbon 12 to Carbon 13 isotopes in the atmosphere.

.

4. There is previous published literature, also based on the ratio of Carbon 12 and Carbon 13 isotopes that Dr. Salby discussed, that concludes the atmospheric residence time of CO2 from fossil fuel burning is about 5 years. This literature is reviewed and cited by my former NASA colleague, Apollo 17 astronaut, and former US Senator, Dr. Harrison “Jack” Schmitt, in his essay on CO2 at: http://americasuncommonsense.com/blog/category/science-engineering/climate-change/4-carbon-dioxide/#r4_14

.

As I monitor the debate on CAGW, it seems to me that if this particular recommended thread topic could be settled with high confidence, then much of the CAGW alarm could be moderated and refocused on a broader range of climate change issues. I suggest it should also be a key research topic for further investigation in an attempt to answer the posed question with high confidence.

Sincerely,

Hal Doiron

.

JC comment: I don’t have a good answer to the question Hal raises. Below are some online references that I’ve spotted, from across the spectrum.

- Residence Time of Atmospheric CO2, H. Lam of Princeton

- Wikipedia

- SkepticalScience

- CO2 Science

- Appinsys

- Robert Essenhigh

And finally an exchange between Freeman Dyson and Robert May in the NY Review of Books:

.

I don’t have time to dig into this issue right now, so I’m throwing the topic open for discussion, hoping for some enlightenment (or at least confusion) from the Denizens.

Judith,

The Freeman Dyson/Robert May links are not working.

ok let me check

Only a few years. For the most part it’s even shorter – the most of it is removed locally and immediately. Atmospheric CO2 is driven/determined by global climatic factors, whatever it means (SST, sea ice extent…). Think H2O.

Edim – I don’t see the relevance of that article to CO2 residence time. I read the full article, not just the abstract, and found it interesting in its analysis of ion-mediated nucleation rates involving sulfuric acid. It did not address the question of how many nuclei could be induced to grow to a size of climate significance for low cloud formation, although earlier data have shown this probably to be relatively small and the results reported here are consistent with that possibility..

Some news:

http://www.nature.com/news/2011/110824/full/news.2011.504.html

http://www.nature.com/nature/journal/v476/n7361/full/nature10343.html

More news (sea level drop):

http://www.jpl.nasa.gov/news/news.cfm?release=2011-262

Not OT. It’s all connected.

My comment above was intended as a response to the “news” item you llnked to.

Time to read up on Cosmic rays and Climate see NATURE latest edition. The game is up

Not latest edition anymore.

http://www.nature.com/news/2011/110824/full/news.2011.504.html

…and there are polarized views on cosmic rays and climate, just like there are polarized views on much to do with climate. At least CERN is doing an experiment in the lab.

Hal Doiron has written:

“As I monitor the debate on CAGW, it seems to me that if this particular recommended thread topic could be settled with high confidence, then much of the CAGW alarm could be moderated and refocused on a broader range of climate change issues.”

I find the abbreviation CAGW to be somewhat objectionable in its misrepresentation of mainstream views, but that is a minor quibble and peripheral to the main topic here. Rather, I would like to ask Hal Doiron a question, because I’m not sure how to interpret his statement.

Hal – At what duration for the residence time of excess CO2 would you perceive that interval to be long enough to be of concern? How many years specifically for an interval representing an average residence time for the excess? For clarity, I’m referring to the time necessary for an excess over an equilibrium concentration to return to baseline, where this can be approximated as a “half-life” although that would not be accurate in the formal sense because there is no single exponential decay curve.

If it takes X years for the excess concentration to decline halfway, what value of X would be worrisome for you?

Fred,

I believe there are clear, proven and well-known beneficial effects of CO2 in the atmosphere with regards to increased rates of plant growth, better crop yields, etc. needed to support the growing population of the planet. I don’t know what a harmful level of CO2 in the atmosphere would be. I have read that US submarines are allowed to have 8,000 ppm CO2 before there is any concern about health related effects (more than 20 times current levels). I don’t know what the optimum level of CO2 in the atmosphere would be, all things being considered, but it is somewhat likely that the level would be higher than it is now, and I have factored this into my thinking about the current situation.

If the human activity related CO2 atmospheric residence time is closer to 5 years as some scientists claim, and not the 100 or so years that you and NOAA apparently believe, then the growth rate of CO2 in the atmosphere from human related causes isn’t at such a high growth rate (compared to natural causes of CO2 sources and sinks that I don’t know how to control) that I should need to take immediate, potentially harmful action with unknown and unintended consequenses to restrict human related CO2 emissions, (your medical triage example suggested in a previous thread on decision making with limited data) as many climate scientists have called for. That is, if we can answer the question of the current thread closer to the 5 year residence time mark, then we can all agree we are not in a triage situation regarding control of CO2 emissions and that we have more time to work the problem of climate change with perhaps different decisions and action plans. After suggesting some “first steps” to take in response to your suggestion for defining such “first steps” in a previous thread http://judithcurry.com/2011/08/18/should-we-assess-climate-model-predictions-in-light-of-severe-tests/#comment-101642 , it occurred to me that if we could answer the question of this present thread, then that would change the crisis atmosphere that many climate scientists believe they are working in, and that makes them worry so much about inaction in the face of such dire, but uncertain predictions of their unvalidated models.

“If the human activity related CO2 atmospheric residence time is closer to 5 years as some scientists claim, and not the 100 or so years that you and NOAA apparently believe”

Again this is the elementary misunderstanding that Judith seems to make no effort to dispel. Both figures are correct. Individual molecules are exchanged on a timescale of five years or so. And the increase in total CO2 takes a century or more to go away.

It’s a bit like worrying about the Government printing money (OK, in pre-electronic days). In fact a huge number of notes are printed and destroyed each year. Any excess printed is small compared to circulation.

But circulation, like exchange of molecules, is just that. What counts is change in the aggregate.

I share your surprise Nick as this is a fairly common and relatively easy-to-dispel source of confusion. Aren’t you a climate scientist Judith? If you can’t take the time to answer simple questions like this, then what is the point of your blog?

Like I said in my main post, I suspect that this whole issue is much more complex than you are making it out to be. Your question, while simple, does not have a simple answer, IMO.

While the particulars of estimating equilibrium response times are governed by multiple processes (as noted by Fred and Chris Colose downthread), it’s nevertheless trivial to point out that the atmospheric lifetime of an individual molecule isn’t the relevant issue…something which you bizarrely failed to do. Is your goal with this blog to sow confusion or dispel it?

The goal of the blog is to discuss scientifically relevant issues, which we are doing.

There’s again a mixture of simple things and less simple things.

That we have two different residence times belongs to the simple things. Estimates on the level sufficient to conclude that the increase in the atmospheric CO2 over last 50 years (and also over the last 100 years) is predominantly of human origin belongs also to rather simple things.

More precise estimates of the uptake of carbon from atmosphere to the other reservoirs is not anymore simple, because none of the subprocesses is accurately known. The balance between surface ocean and the atmosphere is the best understood of all, but even that is influenced strongly on details of the buffering of pH in oceans.

One question that I have not found data on the level that I have been looking for is the role of deep oceans as a reservoir. The total amount of carbon in deep oceans is typically given to be of the order of 40 000 GtC, which is 50 times the amount in the atmosphere. The role of that in the uptake over long periods has not been discussed in papers that I have found. Archer skips the discussion in his papers stating only the the overall conclusion that 20-35% of CO2 remains until removed by sedimentation and weathering and presents the 1990 model analysis of Maier-Reimer as reference, which is not convincing. A Revelle factor of 10 would lead to the value 17%, when a balance has been obtained with the ocean, but is the Revelle factor for deep ocean 10. The value depends on the present pH and on the nature of buffering. Reaching full balance with deep oceans takes also a lot of time, but Archer seems to include that in the faster processes.

On the other hand I don’t see either the importance of the level of the very long tail. I would rather think that, what happens after the excess CO2 concentration has dropped to one half of it’s peak value, is not likely to be a problem for the further future of the Earth and people of those periods. They may very well think that the decreasing trend is then a new problem and the lesser problem the lower it is.

Search under the term “biological pump” and “carbon cycle”

It is really interesting stuff.

It is indeed.The partitions between the pumps (solubility and biological) are asymetrically distributed around 1/3 for the former and 2/3 for the latter.

The effects in peturbation experiments are interesting if we shut the Biological pump off completely, we can observe from the preindustrial an increase from around 280ppm to 450 ppm over similar timescales eg Sarmiento et al 2011.

A study of decay of bomb created radioisotopes

http://nzic.org.nz/CiNZ/articles/Currie_70_1.pdf

suggests a primary decay half life of 13 years.

A. E. Ames, It was only about four months before the background radiation levels moved to a new, sustained level (see: gamma energy range 10)

http://epa.gov/radnet/radnet-data/radnet-billings-bg.html

& does ‘who’s or what’s, make any difference when considering ‘half life’?

Tom, I don’t know for sure, but I expect that the “non replacement removal time” for all CO2 isotopes should be the same within the accuracy of any experiment to measure it. (Molecular weight can matter in packing effects and substrate interactions.) Thanks for the interesting radnet site.

Interesting answer as Marlowe’s questions were

1. Aren’t you a climate scientist Judith?

Obviously a more complex question than Marlowe thought and

2. If you can’t take the time to answer simple questions like this, then what is the point of your blog?

We HAVE been wondering about that.

Eli, you and Marlowe seem to be confusing a climate scientist with someone who parrots the IPCC consensus.

Eli was just pointing out that you are avoiding the questions. This appears to be a sensitive point.

I get 500 comments here per day. I try to do a post per day. Not to mention my two day jobs. I only answer comments/questions that I can respond to within 60 seconds, and I have to be selective in which ones I answer. If someone raises something really interesting, I might do an entire post on it. People that are trying to play “gotcha” with me and want to tell me how I should be doing my job (here or more generally) typically get ignored by me.

Judith,

The point at issue has nothing to do with any IPCC concensus. It is a matter of elementary physical chemistry. Freeman Dyson, hardly a IPCC parroter, set it out simply and explicitly in your second link:

“He says that the residence time of a molecule of carbon dioxide in the atmosphere is about a century, and I say it is about twelve years.

This discrepancy is easy to resolve. We are talking about different meanings of residence time. I am talking about residence without replacement. My residence time is the time that an average carbon dioxide molecule stays in the atmosphere before being absorbed by a plant. He is talking about residence with replacement. His residence time is the average time that a carbon dioxide molecule and its replacements stay in the atmosphere when, as usually happens, a molecule that is absorbed is replaced by another molecule emitted from another plant. “

If FD finds it easy to resolve (and it is) why is it so hard here?

Nick, atmospheric residence time is NOT a simple issue of physical chemistry! I didn’t see any physical chemistry at all in your argument.

Nick, if you agree with Freeman Dyson’s skeptism and it’s already resolved by him, then why do you keep asking?

Judith,

The elementary physical chemistry concept involved is dynamic equilibrium. The nett result of a forward and a back reaction. The time period quoted resulting from isotopes is the one-way process of CO2 molecules being absorbed by plants or whatever. As Dyson points out, there is a back reaction – that CO2 gets back into the atmosphere. If you want to know how total CO2 will diminish, you have to consider the result of both forward and back. That, as Dyson spells out, is the simple basis for the two different figures.

Nick, you are forgetting about the dynamics of the system, that is where the complexity lies

couldn’t the same be said about the greenhouse effect re complexity of the system

Nick, you are thinking of it as a black box system. Some want to consider what is happening inside it, some may know it as a clear box. Why is this a hard concept for you?

Kermit,

I am not talkin g about any sorts of boxes. The issue is clear. Two different figures have been quoted for CO2 residence time. Hal and others ask whether the IPCC is wrong. The answer, as Dyson explained, is simple. It has nothing to do with dynamics, boxes or anything else. They are talking about different definitions. If someone wants to make an issue of it, they need to explain how the figures are comparable.

Which “dynamics” would those be, exactly?

If you’re talking about the carbon cycle, those would be dynamics about which, in your own words, you do not “have any expertise!”

Judith Curry: “The Earth’s carbon cycle is not a topic on which I have any expertise.”

Or has Murry taught you all about the carbon cycle, within the month of August?

Yes Nick, we understand there are 2 definitions being used for residences time. One is large and one is small, both are valid. Which one sounds better to the IPCC? the larger scarier one or the smaller one? This is exactly why you are going on and on about it. Its just another shell game “trick” environmentalists use.

1. You expect people to answer rhetorical questions?

2. If this blog has no point, then why are there so many commenters?

Not that your opinions about the carbon cycle count for anything.

Judith Curry: “Your question, while simple, does not have a simple answer, IMO.”

With all due respect, you have already begged ignorance on this question and should “remain silent and thought a fool” rather than assert things you just don’t know, “and remove all doubt.” Really. Because by your own admission, you’re not qualified to make that judgment, as your opinion is not expert.

Judith Curry: “The Earth’s carbon cycle is not a topic on which I have any expertise.”

(emphasis added due to your repeated refusal, despite numerous requests, to offer any scientific justification of your assertion that “it is sufficiently important that we should start talking about these issues.“)

All that you can truthfully say now about the carbon cycle is that you do not understand it. Or, you can revise your previous statement that you do not “have any expertise.” That of course is up to you. But what you cannot do, at least not consistently (in case you care about that), is claim 100% ignorance while you’re promoting Salby, but then within the time of a month (hardly enough time to have become expert if you weren’t already!), declare with any authority that anybody else’s analysis of same is wrong.

You didn’t have the expertise to articulate any reason then that Salby’s analysis is right or even likely right, therefore you also don’t have the expertise now to say whether anybody else’s is wrong.

Not having “any expertise” on this question, it’s just the analysis you can provide right here, right now, versus theirs. To prove Eli, Marlowe and Nick wrong, you must show that at least one term they neglect, or the sum of terms they neglect in their analysis, have magnitude equal to (at a minimum) or greater than the terms that they include in their analysis. This is not a matter of opinion. As a scientist (former?), you know that “IMO” doesn’t cut it. Your opinion counts for nothing. Either you can show that other terms invalidate their analysis, or you cannot and in that case you have no basis to fault their analysis.

All humanity is divided into two classes; those who don’t understand the carbon cycle and those who don’t understand that they don’t understand the carbon cycle.

=============

No. It isn’t complex. Let me list a quick series of proofs:

1) The ice core record clearly shows that the recent increase in CO2 concentrations is, to use an overused word, unprecedented in the Holocene period, and, indeed, in the last 800,000 years, and non-ice core approaches show that the current CO2 may exceed levels seen for the last 20 million years (Tripati, Science, 2009).

But, maybe you choose to throw out paleoclimate records because… well, because they don’t fit your preconceived notions that human emissions are too small to be meaningful. So…

2) The Revelle factor: straightforward bicarbonate buffer chemistry known since 1957-1958 (Revelle & Suess 1957, Bolin and Eriksson 1958) shows that “a 10% increase in the CO2-content of the atmosphere need merely be balanced by an increase of about 1% of the total CO2 content in sea water” (Sabine et al., Science, 2004 estimate that the uncertainty of this factor ranges from 8 to 16). This is the key element that means that the ocean CANNOT absorb all the CO2 proportionally the way that Henry’s law might suggest, and that therefore a decent percent of any CO2 emission will stay in the atmosphere for thousands of years until sedimentation processes have sufficient time to react (See Archer et al., Annu. Rev. Earth Planet. Sci. 2009, for a review of millenial processes). This is a key concept that many short residence timers have never figured out – they just look at the total gigatons of carbon in the ocean and figure that it can easily soak up any increase in the atmosphere, but they’re wrong.

3) Carbon cycle models have done a pretty good job of explaining the difference between “residence time” and “adjustment time”, dating back at least as far as Rodhe and Björkström in 1979 (turns out that this decoupling is a result of this bicarbonate buffering). This (among other things) is what trips up people like Essenhigh and Segalstad.

4) Carbon cycle models also do a decent job of explaining trends in isotope levels in the atmosphere. Eg, Stuiver et al. Earth and Planetary Science Letters 1981, or Stuiver et al. GRL, 1998. See also, “Suess effect”. People like Salby and Spencer do cute little regressions, but they don’t have real physical models that actually show how and why concentrations and isotope levels have changed the way they have: nor do they test their regressions against existing carbon cycle models – if they did, they’d see that the existing models produce the right signatures. Essenhigh has a model, but first he handwaves the increase in concentrations based on a simplistic understanding of the CO2 solubility-temperature relationship (Revelle and Suess realized that the magnitude of this relationship was insufficient to explain observed CO2 changes back in 1957), and Essenhigh derives lifetimes for 12C and 14C that are different by a factor of more than THREE!!! I still can’t believe that any competent reviewer with a basic knowledge of chemistry could have let that pass – isotope separation is HARD (see Uranium separation): kinetic isotope separation for seawater is on the order of a couple tenths of a percent, and for plant matter is maybe a couple percent at best, so where does a factor of 300% come from?! (also, the carbon cycle field realized that the ocean wasn’t perfectly stirred at least 35 years ago: see Oeschger et al, Tellus, 1975 for an early example of switching to a more realistic diffusive model).

5) These things have been debunked before. I recommend O’Neill B, Measuring Time in the Greenhouse, Climatic Change, 1997.

Does this mean that we understand the carbon cycle, or that our models are perfect? Not hardly. I recommend Doman AJ; van der Werf GR; Ganssen G; Erisman, JW; Strengers B, A Carbon Cycle Science Update Since IPCC AR-4, A Journal of the Human Environment 39(5-6):402-412, 2010 as a review of what the actual interesting questions in carbon cycle science these days are.

So, to review: your suspicions are totally off-base. If this was a biology blog, this kind of post would be the equivalent of wondering whether the “missing link” disproves evolution. If it was an astronomy blog, it would be equivalent of giving press-space to the guys who claim that the double shadow from a flag prove that the Moon landing was faked. By sticking to this position that this is a scientifically relevant topic of discussion, your status as a “meta-expert” is cast in doubt since you are apparently not “able to distinguish a genuine expert from a pretender or a charlatan.” I grant you that you at least have figured out the Sky Dragons are charlatans – but, to go back to my astronomy blog analogy, that’s like figuring out the guys who claim the Moon is made of green cheese are charlatans. Given your impressive publication record, you should be able to do a lot better than this. And maybe this should make you wonder whether you are a little too hasty to give credence to the “not-IPCC” crowd. And yes, the IPCC is certainly not perfect, but that doesn’t mean that if the IPCC says that a clear mid-day sky looks blue, you should go around believing people who claim that actually it is more like a purple.

-M

(and yes, I do get frustrated at having to debunk stupid myths over and over and over again. There are plenty of real, interesting uncertainties regarding an issue as complex as climate change, and especially with regards to appropriate mitigation or adaptation measures, so it is really frustrating that the debate keeps getting bogged down in questions that were solved 50 years ago)

M,

The Appeal to Evolution is always a curiosity, but it’s irrelevant to questions of climate, even the 1,000,000th time it’s tried.

Andrew

It may be irrelevant to questions of climate, but it is very relevant to questions of self-delusion. I note that you did not address a single one of my technical arguments. I could also keep going:

6) The northern-hemisphere/southern-hemisphere gradient, and the relationship of that gradient to increasing CO2 emissions (see graph in IPCC AR4 Chapter 7).

7) The mass argument that many here have used before.

8) Surface ocean pH is increasing faster than pH at depth (indication of diffusion, and of which direction the CO2 is going).

I’d also point out Engelbeen’s webpage.

Anyway, have fun with your delusions,

-M

M,

would you like to point out the empirical data that were used to show the Revelle Factor??

OK, how about the experiments that were done??

Well, what have you got other than assertions??

Please copy from the papers copiously as I am very ignorant.

(and yes, I do get tired of having to debunk the same old tired Junk Science over and over again every time someone doesn’t actually read and COMPREHEND the papers they link.)

Good job, kuhnkat – recognizing your own ignorance is the first step to wisdom!

If you were to read Sabine et al., you’d find out that they used data from the World Ocean Circulation Experiment (WOCE) and the Joint Global Ocean Flux Study (JGOFS) to measure inorganic carbon. The Revelle factor can be calculated as proportional to the ratio between DIC and alkalinity. Therefore, Sabine et al. were able to produce a global map of the Revelle factor, ranging from 16 in cold Antarctic waters to 8 in warm tropical basins.

M,

you just made the mistake of using bald assertions again. I told you, copy a lot. I do not expect people to remember stuff I wouldn’t if I were on the other side. I DO expect them to actually support their assertions with more than more arm waving. You simply state they say you can do it.

SHOW ME!!! Show me why THEIR assertions are meaningful!!

Sigh. I’m not a carbon-cycle expert myself, but I have read enough of the literature to be able to give you somewhat of a tutorial.

First: in pure H2O, CO2 is not very soluble, but it would follow Henry’s law. But seawater isn’t pure, there’s a lot of buffer in there, and that buffer allows seawater to dissolve a _lot_ more carbon than pure H2O would be able to.

Second: The total carbon in seawater is equal to the carbon in the dissolved CO2/H2CO3 plus the HCO3- plus the CO3=.

Third: Adding CO2 to the solution increases the acidity, driving the equilibrium towards CO2/H2CO3.

Fourth: Therefore, the ratio of CO2/H2CO3 to (HCO3- plus CO3=) increases with added CO2.

Fifth: The CO2 in the atmosphere is in Henry’s law equilibrium only with the CO2/H2CO3 in the solution. So, if we were to add a strong acid to the solution, increasing the ratio in part 4, that would decrease the total carbon in the ocean. Of course, when adding CO2 we’re adding both an acid and CO2 at the same time, and so the increased acidity merely means that the increase in total oceanic carbon is _less_ than the 1:1 you’d expect by pure Henry’s law rather than leading to a net loss of carbon.

Okay: so that’s the theory. We can measure DIC (that’s Dissolved Inorganic Carbon, see part 2) experimentally. Bolin and Eriksson found that [CO2] = 0.0133 mmol, [HCO3-] = 1.9 mmol, and [CO3=] = 0.235 mmol. We can also measure alkalinity experimentally, which is important because alkalinity = [HCO3-] + 2[CO3=], and Bolin and Eriksson found alkalinity equal to 2.37 mval. You can combine that with the disassociation constants of H2CO3 and the solubility of calcium carbonate – k1/[H+] = 143 and k1k2/[H+]^2 = 18, the calcium concentration of seawater [Ca++]=10 mmol, and with Henry’s law you can solve for the Revelle factor and you can get about 12.5.

And now I’m tired of typing stuff in, but I’ve found a non-paywalled reference for you: http://ocean.mit.edu/~mick/Papers/Omta-Goodwin-Follows-GBC-2010.pdf. I will note one caveat to my above data, which is that Egleston (2010) referenced in Omta (2010) show that inclusion of borate in the alkalinity equation changes the answer somewhat, especially as the pH of the solution approaches 7.5. If you want more experimental data, Egleston uses alkalinity and DIC from the GLODAP project and temperature and salinity from the World Ocean Atlas (because disassociation constants are functions of temperature and salinity).

Sadly, I suspect that you are a troll, and that my work here is therefore meaningless, but perhaps others can learn something…

Thank you, M!

It’s good stuff, what we are calling multi-physics these days.

“The quantity O has turned out to be particularly useful for a

number of reasons. First of all, it is much more constant than

the Revelle buffer factor that has been extensively applied in

theories of the ocean‐atmosphere carbon partitioning.”

So, this paper that finds the Revelle buffer factor to be less useful you think I should accept as proving the Revelle buffer factor??

Ah, yes, the subtle* difference between “this approach is better” and “the old approach is not useful”.

Omta et al: “Unfortunately, R is not constant: it varies between approximately 8 and 15 at the ocean surface [Watson and Liss, 1998]. Furthermore, the globally averaged Revelle buffer factor depends strongly on the total amount of carbon in the ocean‐atmosphere system [e.g., Goodwin et al., 2007]. We now derive an alternative index that is more constant than R.”

Translation for the purposes of my original argument: The Revelle factor is large (in this case, greater than 8). That’s all that’s needed for the use of the Revelle factor in support of the fact that CO2 has a long residence time in the atmosphere. You’ll note that in my original post I cited Sabine et al who also discussed this range of 8 to 16. Omta et al. also point out that the Revelle factor will increase with increasing emissions, up to a factor of 19 at 1000 ppm CO2, which just increases the proportion of CO2 which stays in the atmosphere. Nowhere does Omta say that the Revelle factor is wrong, merely that they prefer their new “O” factor because its behavior is less sensitive to changes in emissions and other factors (and that behavior is monotonic, even past 1000 ppm).

So yes, this paper supports my point quite well. It also demonstrates the difference between real science (“let’s figure out how the Revelle factor changes over space and different scenarios, and whether there might be other factors that could be useful descriptions of the system”) and junk science (“the Revelle factor doesn’t exist, and the historic CO2 concentration increase might be due to magic fairies rather than human CO2 emissions, despite the fact that several completely independent methods all demonstrate otherwise”).

-M

*This is meant to be sarcastic, by the way. I realize you might not do well with subtle.

M,

sorry, your sarcasm doesn’t work very well.

Let’s start with the basics. Where is the definition of the Revelle Factor/ That is, what are the components, the constant(s) if any, the equations, relationships?

Next, what are the obnservations or experiments that these are based on?

Sadly, you are typical of the knowledgeable types who deal with Climate Science regularly and never question the basics. They do not exist in your explanations and do not exist in that paper. Whether they actually exist in the papers referred to by you and the paper you linked I do not know.

Here is a discussion about the same issue by Jeff Glassman and Pekka Pirilla:

http://judithcurry.com/2011/08/13/slaying-the-greenhouse-dragon-part-iv/#comment-99490

http://judithcurry.com/2011/08/13/slaying-the-greenhouse-dragon-part-iv/#comment-99650

Again, there are no basics establishing that the Revelle Factor was EVER fundamentally established as a part of science. Pekka, like you, points to many issues that very well could have been a part of a Revelle Factor IF IT HAD EVER BEEN ESTABLISHED!!!

It wasn’t. It is one of several myths of Climate Science that modern scientists are working around and filling in. Notice that Pekka states that the Revelle Factor could actually range to 1. So we have a mythological factor that can range from 1 to over 16 that is a kind of buffer effect for Co2 and water. Gee whiz. Color me impressed by all the hard science going on!! Any idea what the temperature curves are like? How about what actually causes the buffering and its curve or linear relationship? Yup, I am just overwhelmed by all the data you have inundated me with about the Revelle Factor.

This is sarcasm in case you didn’t notice.

Let’s try a little reading comprehension here, Kuhnkat:

You say: “Notice that Pekka states that the Revelle Factor could actually range to 1.”

Pekka states: “Thus Henry’s law remains valid for fixed pH. The Revell factor is 1.0 in that case.”

Um. Note the conditional in that sentence (you do understand conditionals, right? I don’t want to overtax your small brain, as you have previously admitted that you are “very ignorant”). If we keep pH fixed, then the Revelle factor is 1.0. But, in the real world, pH is not constant, and adding CO2 will make a solution more acidic. If you want to test that, extract some red cabbage juice (which makes a good pH indicator), and you can show that adding dry ice (or even just waving a juice-soaked towel in the air to absorb CO2) will increase the acidity. If you read my post starting at “First:” you’d see that the chemical theory is clear. Yes, the Revelle factor depends on the buffering, dissolved carbon, and temperature of the solution. That doesn’t make it “mythological”, it just makes it dependent on conditions. Is gravity mythological because it is 9.8 m/s^2 here, but 0 m/s^2 in interstellar space far from any massive bodies? I think not. I actually gave you every single constant and equation you needed (assuming you know what a disassociation constant is, but then, I’m not here to teach you high school chemistry, though you could clearly use a refresher). So the Revelle factor can be experimentally measured, and it has been, with the answer being “between 8 and 16 depending on where in the ocean you look”.

Heck, you could dissolve some sodium bicarb in solution and measure Revelle factors yourself.

You, my dear troll, are “very ignorant”. And this is why Curry’s attachment to her “e-salon” is of little value. It is saturated with people like you. Which is why the best blogs use moderation to keep the signal to noise ratio at some reasonable level.

You, my dear troll, are “very ignorant”. And this is why Curry’s attachment to her “e-salon” is of little value. It is saturated with people like you. Which is why the best blogs use moderation to keep the signal to noise ratio at some reasonable level.

Curry’s E-salon is of high value because people here are very willing to learn from experts who drop by and impart knowledge, and occasionally the experts acknowledge something they pick up here which they were not previously aware of.

Of course, the ‘experts’ running blogs which censor anything which threatens their apparent intellectual superiority won’t gain in this way, because experts who behave like arrogant pricks are generally unlikely to create an ambience in which they can teach well, or indeed learn.

M,

how many other laws do climate scientists deal with that say they are only applicable at a fixed temp or at equilibrium, say Stefan-Boltzman, yet, they are used anyway KNOWING that the temp and pressure and flux are continuously changing?? Sorry, don’t want to tax your brain too much. You obviously have much more important things to do than try and educate this ignorant person.

Now, do you think that every point in the ocean is changing quickly enough 24/7 that there is NEVER a time that the Ph actually stays constant for a measurable length of time? Even if it doesn’t, wouldn’t the 1 be a LIMIT of the range??

It was a nice try though, distracting from the issue that there is no support for an actual Revell Buffer Factor presented here or in the papers linked other than many scientists using or referring to it without definition or derivation. I will accept this as your acquiescence that YOU and Pekka do not have this to hand. Maybe you can research it and provide the information?

M

I am humbled.

Thank you.

M wrote “and yes, I do get frustrated at having to debunk stupid myths over and over and over again.”

I have submitted a comment to Energy and Fuels explaining the error in Prof. Essenhigh’s paper; I did so in the hope of limiting the spread of this particular error, which does neither side of the debate any good. Prof. Essenhigh is right that residence time is about five years, but this is entirely uncontraversial, the IPCC put the figure at about four years. However the rise and fall of atmospheric CO2 is not governed by the residence time, but the adjustment time, and hence the conclusion is incorrect. My paper uses a one-box model, essentially identical to that used by Essenhigh, to explain the difference between residence time and adjustment time (amongst other things) and demonstrates that the observations are completely consistent with the generally accepted anthropogenic origin, but not with a natural origin. The paper has been conditionally accepted, I am just working on the corrections at the moment.

This particular aspect of the carbon cycle is not straightforward and it is perhaps not surprising that this confusion of residence time and adjustment time should occur. I wouldn’t say this was a stupid myth, the solution wasn’t immediately obvious to me before I looked into it. Part of the reason why it has peristed is perhaps it has been deemed to basic to have been discussed in detail in the peer-reviewed litterature (until now).

Congratulations on your paper!

I am glad that you and am M have used “adjustment time” as the proper name to give to the impulse response settling time. As a part-time researcher, I haven’t run across this before, and didn’t realize that Rodhe defined this in 1979.

Do you give a single value to the adjustment time or do you give it a range of values?

This is exactly what I continuously discover. Casual readers see the results being presented and they assume that some unverified software program is spewing nonsense because they don’t get the fundamental explanation. Once you start apply a compartment model (or box model as you refer to it) to the problem space, the the answer becomes obvious.

Take a look at the comment in this thread I made a couple of days ago concerning my own attempt at a box model for sequestration:

http://judithcurry.com/2011/08/24/co2-discussion-thread/#comment-106310

I bet that it supports your findings, and I did the analysis because I was having a hard time coming up with a fundamental understanding of the fat tail response curve. My only disagreement is that I do think it is straightforward, because it is the same thing I would do to model diffusion of dopants and other low concentration particles in a semiconductor material. Electrical engineers and material scientists consider that a straightforward problem, and this understanding is what enables all computers that we are using today (as we proceed to type away).

Mr. Marlowe Johnson, solve this problem, with the IPCC product? Where: (GI+GO)=0

That’s easily solved with a simple change of variables:

Tom = G[arbage]

settledscience, We all know that AGW science has shown absolutely no problem changing the variable, to suit themselves. Once again, your crowd has no intention to address the question I put forward: How may we ‘solve this problem, with the IPCC “product”:) which is= 0.’

Stupid analogy. The it’s not just the difference that is important. Knowing where the money is going and what it is doing provides insightful.

Kermit says, “Knowing where the money is going and what it is doing provides insightful.” Sad to know that there is nothing left & that’s not right. What differnce does it make now, thats the question, we don’t know? What a problem a day makes…

http://www.kitco.com/ind/willie/aug252011.html

Hope, this will help.

I’ll waste a little bit of time on some basic chemistry here. Hopefully nobody else raised these points.

The “residence time” is a pretty nebulous concept and doesn’t have much to do with the chemistry of the CO2 in and on the earth. The climate concept is that humans have injected(are injecting) a significantly large amount of CO2 into the atmosphere over a relatively short period of time. This excess CO2 does react and does not all stay in the atmosphere. So the concept of residence time would be “how long will it take for all the human-induced CO2 to be removed from the atmosphere and the level go back to the 280 ppm or so equilibrium”.

The first question is “has CO2 EVER been in equilibrium in the atmosphere? The paleo records all indicate that it has not been. The level has varied widely in different eras, generally lagging behind changes in temperature. Equilibrium chemistry is a very chancy thing. The best way to determine equilibrium is by determining the free energies of the reactions involved, the amounts of reactants, and calculate what the equlibrium values are in a phase diagram. This is totally impoosible to do because we do not know the reactions involved or their rates- simple solution/dissolution mass flow in the oceans, uptake of CO2 as carbonate into plants and animals, rate of release from decay, rate of carbon accumulation in sediments, rate at which sediments are being subducted at the plate boundaries, etc.

The second question is “who cares?”; The amount of CO2 in the atmosphere fluctuates quite a bit on many time scales. Any notion of a residence time for CO2 in the atmosphere will depend almost entirely on the assumptions used to calculate it. Given the small numbers involved(CO2 in the atmosphere<<CO2 in the oceans<<CO2 and C in mantle and surface rock) it is only a small blip in the noise. Part of the who cares is that the climate doesn't distinguish between molecules or atoms, only the concentrations involved, and to a tiny extent the isotopes involved. Once it is there, it goes where it goes and does what it does.

George M

Wouldn’t your 280 ppm equilibrium be more properly 230+/-50 ppm steady state, seasonally and geographically normalized, if we’re talking paleo (to six sigma, and then allowing for uncertainty)?

Though I doubt this much alters your argument.. whatever it is.

Hal – Since it will become clear to you from the various responses below that the actual decline of excess CO2 toward baseline occurs over centuries, rather than 5 years, with a long tail requiring many thousands of years, I would be interested in your response to the original question – how much should that concern us?

can you please not sidetrack the discussion before it starts

Stephen – I don’t think it is a sidetrack to refer back to Hal’s email that served as the reason for this entire post. The final paragraph of his email captured the essence of his point – if the residence time is very short (5 years), there is little reason to be concerned. He never answered whether there was a reason for concern if the residence time is much longer, which it is. That is a legitimate question to ask in my view.

Correct. Your comment is clearly on topic, not a sidetrack.

By “long tail” you are referring to a probability distribution, aren’t you?

Some subsequent comments make reference to that phrase without seeming to understand it. Or maybe you’re using it differently than I expect. Please clarify.

That is nothing but unscientific hand-wavy claptrap.

That does not address the perfectly clear, direct question you were asked, nor in your rambling, off-topic comment, did you ever directly, responsively answer that perfectly clear, direct question you were asked.

Fred Moolten asked you:

“I don’t know” would have been a perfectly respectable, honest answer, Harold.

Faking it is not respectable.

In fact, you did even state categorically that you don’t know.

But you should have been honest and just stopped there instead of trying to pretend you know something relevant to the subject, by babbling on about meaningless factoids.

You mean “poisoning.” The level of CO2 that’s considered poisonous is 100% irrelevant to informed discussion of the CO2 greenhouse effect. It’s just a means of faking knowledge, which you don’t have.

No, you don’t, nor do you know anything relevant to making informed estimates of the probabilities of longer or shorter residence times.

Oh, is it really “somewhat likely” Harold? Based on what scientific expertise can you make that assertion? None. Can you quantify “somewhat likely?” No, you cannot. You don’t even know what quantities to compute, so you really have nothing of value to contribute — except for name-dropping, which obviously serves Curry’s interest in increasing her notoriety.

Funny that “scientist” is nowhere on his résumé! Less funny is that neither you nor Curry care that he has no scientific credentials whatsoever.

You just advocated fudging the science (“closer to the 5 year residence time mark”) in favor of a particular policy outcome (“different decisions and action plans”), the exact thing that you climate science deniers are always falsely accusing all the legitimate scientists of doing. I’m very sure that from his political days, your old pal “Jack” can explain to you what “unintentional truth-telling” means. :-)

Amateur.

Harold H Doiron

I believe there are clear, proven and well-known beneficial effects of CO2 in the atmosphere with regards to increased rates of plant growth, better crop yields, etc. needed to support the growing population of the planet. I don’t know what a harmful level of CO2 in the atmosphere would be. I have read that US submarines are allowed to have 8,000 ppm CO2 before there is any concern about health related effects (more than 20 times current levels). I don’t know what the optimum level of CO2 in the atmosphere would be, all things being considered, but it is somewhat likely that the level would be higher than it is now, and I have factored this into my thinking about the current situation.

That’s quite the credo. Been worshipping at the temple of Idsos, have we?

I’ve considered your opinion for a week, as I wanted to give it careful thought.

I see Chief Hydrologist has punctured and lampooned your faith, and he needs no help from me.

However.

For on the scale (we have good cause to believe by the paleo record, extrapolations, and SWAG) of ten million years, the CO2 level of the atmosphere has been ergodically steady at 230 ppm +/- 50 ppm. We’re over 44% above that mean now, which appears to be unprecedented on the span of a geological epoch.

Certainly the ice core record of the past 800,000 years indicates this range to a high degree of certainty, as confirmed by the stomata count of plant fossils and myriad other evidences.

Where we substitute our own judgement of ‘best’ for what has been the dominant mode of a principle component of our complex, dynamical, spatiotemporal world-spanning climate for a span of time an order of magnitude longer than the existence of our species, we exhibit what can only be called arrogance.

Where we do it based on spinmeistering and public relations, we display profound folly.

CO2 at levels above 200 ppm up to about 2500 ppm functions as an analog to plant hormones, not as a ‘nutrient’ or fertilizer.

It’s not so very different to plants from steroids in professional athletes. It alters primary and secondary sexual characteristics, modifies structures, and results in additional mass in some parts of the plants.

Over seasons and generations, plants adapt to higher CO2 levels in some ways, gradually tapering off in the ‘benefits’ realized, but retaining negative effects longer than they keep the benefits.

That’s why plants as a group flourished quite as well at 180 ppm as at 280 ppm and at 380 ppm.

The purported benefits of CO2 elevation have only one medium term experiment in the field that I know of, and although it demonstrates selective benefits in field conditions in terms of favoring some species over others, it doesn’t match the levels seen in hothouse conditions with unlimited nutrient and ideal growing conditions.

In short, there is nothing clear, proven, or — if well-known — especially correct in mad schemes to profit from higher CO2.

It won’t end world hunger, as Lord Lawson suggested in his book based on nothing more than speculation and wishful thinking.

It’s a silly opinion. You’re welcome to hold it, but please don’t claim it’s clear or proven.

Please factor the Uncertainty of your beliefs into your thinking.

Because to my thinking, a Perturbation on unprecedented scales in a Chaotic system will tend to disturb ergodicity in unpredictable ways, which increases the cost of climate-related Risks to me.

Those costs are real, and translate into money taken from me.

And I don’t recall consenting to your CO2-worship picking my pocket.

And let us not over look the role of freshwater bodies as carbon sinks.

This is apparently much larger than previously recognized by the climate science community.

I would sugest that before we start diverting the topic, however nicely, into predictions about “X”, we should define the behavior of CO2 in the atmosphere more clearly.

“I would suggest that before we start diverting the topic, however nicely,

into predictions about “X”, we should define the behavior of CO2 in the

atmosphere more clearly.”

hunter, Why?… Of course, you already accounted for this? Well?…

http://www.dailymail.co.uk/sciencetech/article-2030337/Scientists-underground-river-beneath-Amazon.html

Scientists confirm that this is to be their ‘last’ surprise.

Tim Ball: “Pre-industrial levels were 50 ppm higher than those used in the IPCC computer models. Models also incorrectly assume uniform atmospheric distribution and virtually no variability from year to year. Beck found, “Since 1812, the CO2 concentration in northern hemispheric air has fluctuated exhibiting three high level maxima around 1825, 1857 and 1942 the latter showing more than 400 ppm.” Here is a plot from Beck comparing 19th century readings with ice core and Mauna Loa data…

“Elimination of data occurs with the Mauna Loa readings, which can vary up to 600 ppm in the course of a day. Beck explains how Charles Keeling established the Mauna Loa readings by using the lowest readings of the afternoon. He ignored natural sources, a practice that continues. Beck presumes Keeling decided to avoid these low level natural sources by establishing the station at 4000 meters up the volcano. As Beck notes “Mauna Loa does not represent the typical atmospheric CO2 on different global locations but is typical only for this volcano at a maritime location in about 4000 m altitude at that latitude.” (Beck, 2008, “50 Years of Continuous Measurement of CO2 on Mauna Loa” Energy and Environment, Vol 19, No.7.) Keeling’s son continues to operate the Mauna Loa facility and as Beck notes, “owns the global monopoly of calibration of all CO2 measurements.” Since Keeling is a co-author of the IPCC reports they accept Mauna Loa without question.”

(Time to Revisit Falsified Science of CO2, December 28, 2009)

Wagathon, the Beck paper is a joke. What they measure at Mauna Loa is a consistent record, same place year on year, clear of urban influences. Beck’s results are from multiple records, often close to industrial CO2 sources, using multiple analytical methods. Notice how the variability suddenly falls when more precise methods are adopted.

Consistent?

From May 15th to 21st CO2 went up 1.84ppm

From July 17th to 23rd CO2 went down 1.34ppm

ftp://ftp.cmdl.noaa.gov/ccg/co2/trends/co2_weekly_mlo.txt

There was a even a 1.93ppm jump in 7 days recently.

No consistency there.

wow thats an entirely new class of retarded argument

Your lack of curiosity never surprises me.

If CO2 goes up 1ppm in a year it is a sign of man-made catastrophic climate change.

If it goes up almost 2 ppm in 7 days it is a sign of the natural in and out breathing of the earth …

“The sawtooth pattern represents the natural carbon cycle. Every summer in the northern hemisphere, grass grows, leaves sprout, and plants flower. These natural processes draw CO2 out of the air. During the northern winters, plants wither and rot, releasing their CO2 back into the air. This sawtooth pattern shows the planet breathing.”

http://www.terrapass.com/blog/posts/science-corner

The above explanation is a joke when you look at the weekly data.

Bruce,

You miss the point entirely. While there may be short term fluctuations of the same order as the yearly increase, those fluctuations do not scale over time. So while you may indeed get an average reading on one day which is equal to the lowest reading from a year or so before, you are not going to get one which is equal to the lowest reading from a decade earlier, still less from several decades earlier, and it is this which reveals the trend, clearly and unmistakeably.

Your point is to ignore the explanation that explains the sawtooth component of the Mana Loa graph because it is a joke.

Why are their short term fluctuations? Science would explain them. Propaganda explains them away with joke “causes”.

If the “natural carbon cycle” can be 2ppm over 7 days, why not 2ppm over 365 days?

CO2 follows temperature historically.

wow that’s a not entirely a new class of numbnut argument

Bruce,

Take those variations, and divide them by the average atmospheric concentration over the relevant period, and tell me what sort of variation you get in percentage terms. It is going to be well under 1% in all cases.

This would seem more that sufficiently consistent for the purposes it is being used for.

2ppm over 1 day?

http://cdiac.ornl.gov/ftp/trends/co2/Jubany_2009_Daily.txt

OK, here is the graphical representation of that data.

http://img197.imageshack.us/img197/9555/co2.gif

Read again what jimbo said above: “… tell me what sort of variation you get in percentage terms ..”

“tell me what sort of variation you get in percentage terms.”

Ok.

AGW = .5% change in ppm per 365 days

Jubany = .5% change in CO2 per 1 day

But more importantly, this “sawtooth” pattern explanation seems awfully bogus since daily changes can be 2ppm.

““The sawtooth pattern represents the natural carbon cycle. Every summer in the northern hemisphere, grass grows, leaves sprout, and plants flower. These natural processes draw CO2 out of the air. During the northern winters, plants wither and rot, releasing their CO2 back into the air. This sawtooth pattern shows the planet breathing.”

I mean … really! If you only looked at the yearly graph you might be naive to believe such an explanation.

Bruce, Why can you not just look at the data impartially? If that curve charted my pay rate in $/hour based on working commissions, I would not be complaining about the fact that there was a ripple and some noise in the overall upward slope.

You may be having a problem with understanding signal processing mathematics, and in particular its digital counterpart. What oscillations you see and their amplitude are really a matter of the impulse response caused by a forcing function. Depending on the frequency response, daily fluctuations can be filtered out, yearly fluctuations filtered out less, and the longest periods filtered out the least. The fact that samples are taken at the same time each day points out the stark reality of the Nyquist criteria.

The Nyquist criteria states that digital sampling at the same rate as a naturally occurring frequency will fold that value over to look like a constant value. You actually have to sample at twice the natural frequency, i.e. the Nyquist frequency, or measure the numbers twice per day to see the daily fluctuations. We electrical engineers had this burned in our skulls during school so really see nothing weird about the data. I am not sure what your background is.

If your pay changed daily, and Human Resources explained it was because of yearly variations in the business cycle you would be very, very suspicious.

Bruce, Do you have a problem with reading comprehension too? Note that I crafted my analogy to state that I worked based on commissions. Do I have to explain to you that commissions are susceptible to random fluctuations?

Paul B – perhaps the Beck paper is a joke – I haven’t read it. But Wagathon has touched on a real difficulty with the Mauna Loa data. The site is not ideal for assessing baseline CO2 in the atmosphere as it lies on the flank of a volcano which itself emits large and uncontrolled amounts of CO2. The only way I can think of to allow for such sporadic local contamination is to use the minimum values recorded within each time period, presumably daily, and dump the rest. Perhaps the resulting data are a reliable and meaningful guide to global CO2 levels. Indeed I’m fairly happy to accept that they are, although I would prefer to see a demonstration of this.

This oft-repeated canard about Mauna Loa being an unsuitable site for CO2 measurements is dealt with here:

http://www.skepticalscience.com/mauna-loa-volcanoco2-measurements.htm

Could you check your link FiveString. I could not get it to work. Thanks.

The link is missing a dash between volcano and co2. Perhaps WordPress software removed it. It should be this

Wish this site had an ‘edit’ function… Thanks for the correction Pekka.

“It is true that volcanoes blow out CO2 from time to time and that this can interfere with the readings. Most of the time, though, the prevailing winds blow the volcanic gasses away from the observatory. But when the winds do sometimes blow from active vents towards the observatory, the influence from the volcano is obvious on the normally consistent records and any dubious readings can be easily spotted and edited out”

They edit the data based on supposition? Not good.

When you said the “canard” “is dealt with” I thought you might be talking scientifically not craptastically.

Now that your whining that by your speculation they might use some seemingly spurious measurements has been debunked, you’ve started whining that they should use known spurious measurements.

You do know that 1984 was not intended as an instruction manual, don’t you?

CO2 to from underwater volcanoes

http://nwrota2009.blogspot.com/2009/04/getting-gas.html

Estimated number of underwater volcanoes? 3 million+

Where?

If this was anything but Plimmer’s pipe dream we would see interesting profiles of various stuff like HCO3- in the oceans. We don’t

Hal,

Lets have a closer look at the 100-year lag idea…

.

The IPCC states that humans started to introduce CO2 and other dry GHGs ‘markedly’ in 1750:

.

http://www.ipcc.ch/publications_and_data/ar4/wg1/en/spmsspm-human-and.html

.

[“Global atmospheric concentrations of carbon dioxide, methane and nitrous oxide have increased markedly as a result of human activities since 1750.”]

.

So, by 1850 (100 years later) we should have been feeling the ‘full effect’ of the initial input. Thereafter, every year would see an increase in the ‘full effect’ of CO2. This would, logically, lead to an acceleration of the effect of CO2 and, according to the cAGW theory, an acceleration in global warming. So now we can look at the temperature data since 1850:

.

http://www.woodfortrees.org/plot/hadcrut3gl/from:1850/to:2011

.

The question is: Do you think that shows an acceleration? If you apply enough smoothing you may do so but then you are adjusting how you interpret the data. The raw data graph shows no warming at all in the last 13 years (if anything a cooling) which itself would cast serious doubt on any interpretation of ‘acceleration’. In addition, any reason given for the cooling – such as ‘natural variation’ – has to consider that any ‘natural variation’ has to be powerful enough to combat not only the initial CO2 effect, but also the acceleration in the initial effect. In that case, natural factors easily outweigh any CO2 effect.

.

Personally, I think a more important question is: What is the contribution made by CO2 to the Greenhouse Effect? Until we can answer that question correctly, any theory/hypothesis/assertion regarding the supposed warming effect of CO2 is flawed as being based on an assumption.

.

I agree with hunter that the behaviour of CO2 needs to be ascertained more clearly.

.

Thanks for an interesting post.

Arfur – There is no reason necessarily to expect an acceleration simply because CO2 is increasing. That is because any warming reduces the radiative imbalance caused by the increased CO2 and thereby reduces the tendency for further warming. Depending on the rate of CO2 rise, we could see a steady warming, an acceleration, or warming at a reduced rate, although as long as CO2 is rising, the temperature trend averaged over multiple decades can be expected to be upward. Over shorter intervals, the effects of other, short-term climate drivers will modify the long term trend, as can be seen by examining the behavior of global temperature anomalies over the past 100 years, with their fluctuating ups and downs overlying an upward trend.

The relevance of the residence time function is that it tells us how long a given CO2 concentration will continue to exert warming effects if the planet is out of balance. In general, that will be centuries.

Fred,

Not according to the IPCC…

http://www.ipcc.ch/pdf/assessment-report/ar4/wg1/ar4-wg1-chapter3.pdf

See FAQ 3.1 Fig 1 and note:

[“Note that for shorter recent periods, the slope is greater, indicating accelerated warming.”]

.

So its not just the CO2 increase, but the slight acceleration in the increase that supports my argument. If the increased CO2 leads to a radiation imbalance which leads to warming which leads to a reduction in the radiation balance which leads to a reduction in warming – as you state – then what is the problem? The trend you speak of is 0.06 C per decade since 1850 and is not increasing from 1850! That size of trend is not even mildly threatening, let alone ‘catastrophic’ (IPCC term, not mine)!

.

CO2 may ‘exert warming effects’ but these effects are not significant, as demonstrated by observed data. I repeat, until you know what the CO2 effect is, you are basing your argument on an assumption.

.

Off to bed now, will have to leave any further reply until tomorrow…

Regards,

The recent temperature record is consistent with continued warming of about 0.15C/decade. Which in turn is consistent with AGW.

That’s all there is to it. And where precisely has the IPCC used the specific phrase “catastrophic”?

So if the temperature falls 4C to the depths of the LIA, thats natural, but if it recovers by .8C, it is mans fault?

And using any other start point other than the IPCC chosen start date of ‘accurate data’, ie, 1850, is misleading. What is your definition of ‘recent’? How about this one…?

http://www.woodfortrees.org/plot/hadcrut3gl/from:1998/to:2011/plot/hadcrut3gl/from:1998/to:2100/trend

.

That’s ‘recent’, so where is your 0.15 C per decade trend there? If you move the start date to the right of 1850, you can get all sorts of trends but not one of them is the ‘overall trend’. So I just stick to the overall trend. That way, if the temperature starts to increase in an accelerative fashion WILL increase. In fact, the trend today is much lower than it was in 1880. Give me a call back when you can find the trend increasing above that one!

.

Oh, and the IPCC used the term ‘catastrophic’ here:

http://www.ipcc.ch/publications_and_data/ar4/wg3/en/ch2s2-2-4.html

The 0.15C/decade trend is still there even in HadCRUT past 1998, it’s just masked by variation (solar cycle and ENSO). See: http://tamino.wordpress.com/2011/01/20/how-fast-is-earth-warming/

trend is still there

it’s just masked

Lol. Wot?

how-fast-is-earth-warming?

It isn’t. And hasn’t been since 2003.

There have been cooling impacts since about 2003 (2003 was solar max! there’s a been a low solar minimum recently!). Plus 2003-2007 was pretty much el nino after el nino whereas since 2007 there have been 2 quite strong La Ninas.

The earth is still warming, it’s just that since 2003 the above-mentioned cooling impacts have suppressed it.

lolwot,

Faith is a truly powerful debating tool. If you say…

[“There have been cooling impacts since about 2003 (2003 was solar max! there’s a been a low solar minimum recently!). Plus 2003-2007 was pretty much el nino after el nino whereas since 2007 there have been 2 quite strong La Ninas.”]

…Does that mean that there were no La Nina events between 1910 and 1940 or 1970 and 1998? What caused those warmings? You want to use CO2 to explain a warming but that it is overcome by natural forcings during a cooling period. That denies the likelihood that natural forcings can work both ways.

Arthur, any period of time which starts with El Ninos and ends with La Ninas (eg 2005 to 2011) will have a cooling bias due to ENSO that has nothing to do with the longterm warming trend.

I mean what you are doing is little more sophisticated than saying temperature dropped from 1998 to 2000 and claiming this contradicts global warming. It does not. 1998 to 2000 was El NIno to La Nina. That’s short term noise able to overwhelm the longterm trend.

2005 to 2011 has about a 0.1C cooling impact from falling ENSO. It also has a cooling impact from the solar cycle.

In short that’s why the period 2005-present is kind of flat. It’s not because the longterm warming has stopped, it’s because ENSO and the solar cycle happen to line up over that period to cancel it out.

“any period of time which starts with El Ninos and ends with La Ninas (eg 2005 to 2011) will have a cooling bias due to ENSO that has nothing to do with the longterm warming trend.”

Excellent point, so how much did the 30 year run of positive PDO starting with more la ninas and ending with more el ninos 1975-1998 contribute to the longterm warming trend?

About 0.1C warming

So. lolwot, what caused the other 0.5 C warming in that period? You are condensing your ENSO argument into a short period and appear unwilling to consider that the same natural causes you claim work against the cAGW theory in the short term can also exist during the warming periods that you appear to attribute to CO2. If, as you state above, ENSO only contribute 0.1C in that period, are you suggesting that CO2 is powerful enough to contribute another 0.5 deg C?

.

If that is your argument, what caused the warming from 1910 to 1945, and what caused the subseuquent cooling?

“If, as you state above, ENSO only contribute 0.1C in that period, are you suggesting that CO2 is powerful enough to contribute another 0.5 deg C?”

It maybe even more powerful than that. Without aerosol emissions the amount of warming would have been greater than 0.5C

.

“If that is your argument, what caused the warming from 1910 to 1945, and what caused the subseuquent cooling?”

Solar activity increased in the early 20th century. I think that plays a significant part of it. Between that and the reliability of the records back then I am not sure there is a problem there. Global temperature went just about flat after the 1940s until it started rising again during the 70s.

lolowt,

If the records were so unreliable back then, how do you know the increase since 1970 are unusual? The HadCRUt dataset goes back to 1850 and clearly shows three distinct warming periods. You want to discount the first two and concentrate on the latter one. The you want to discount the levelling/cooling after the latter one. How many goalposts do the warmists want to move?

http://www1.ncdc.noaa.gov/pub/data/cmb/bams-sotc/climate-assessment-2008-lo-rez.pdf

“El Niño–Southern Oscillation is a strong driver of interannual global mean temperature variations. ENSO and non-ENSO contributions

can be separated by the method of Thompson et al. (2008) (Fig. 2.8a). The trend in the ENSO-related component for 1999–2008 is +0.08±0.07°C decade–1, fully accounting for the overall observed trend. The trend after removing

ENSO (the “ENSO-adjusted” trend) is 0.00°±0.05°C decade–1, implying much greater disagreement

with anticipated global temperature

rise.”

Arfur – The IPCC site you linked to reinforces the points I made above,but if you have a question about a specific item, I’ll try to explain the reasoning behind it.

Fred,

You could start with the bit that explains why the slight acceleration in CO2 can be construed as both leading to a reduction in warming and an acceleration in warming depending on how you feel at the time.

.

I repeat, the overall trend of 0.06 C per decade is NOT increasing. What is the problem?

How does a line “increase”? If you stick a line of best fit through date 1850 onwards then of course the line is going to be straight. That is what a line is.

the overall trend of 0.06 C per decade is NOT increasing

That’s t-r-e-n-d Lolwot, not l-i-n-e

Of course it’s not increasing. It’s a straight line you’ve fit through all the data. What do you expect when you apply a line of best fit? That the end part will curve upwards?

The fact is that recent warming is greater than the “overall trend of 0.06C” anyway.

What recent warming?

Oh, you mean that warming, last millenium.

:)

If you correct for ENSO and the solar cycle the warming has continued with no pause. You should correct for these things as they are noise not signal.

Ok lolwot, I’ll explain.

Draw a hockey-stick curve (one that shows an acceleration in warming). Draw a straight line from the origin of the curve to a point a short way along the x-axis. Then draw successive lines, each starting from the origin, to successive points further along the x-axis. Each successive ‘line’ will be showing an increasing ‘trend’ because each successive line will be steeper than the last. Got it?

.

In terms of global temperature, IF the radiative forcing theory was correct, then the overall trend (drawn from the 1850 origin) would show a relatively consistent INCREASE.

.

It doesn’t. Its really that simple. The observed data does NOT support the theory. The overall trend from 1850 to 2011 is 0.06 C per decade. The overall trend in 1998 was 0.067 Cpd. The overall trend in 1944 (another peak) was 0.06 Cpd and the overall trend in 1888 (the first peak) was 0.17 C pd!

Where you are wrong is that climate models don’t show your acceleration. Look at 20th century hindcasts. They simply do not show the acceleration you claim and yet I am 100% sure they show AGW.

Fine lolwot, you believe in your models and I’ll believe in observed data.

Humans have an intuitive sense of the way a model works. Take the place of an outfielder who is trying to catch a fly-ball. He will instantaneously model the trajectory based on minimal information. If the fielder followed your advice, he would wait for the baseball to land on the grass and then run to it. Lot of good that will do.

And yo can laugh at this analogy but that’s the way we work. The only difference is that the practical human will innately use a model based on heuristics and acquired knowledge, whereas the scientist will use math and the corpus of scientific evidence.

You’re kidding me, right? How many fly-balls would the catcher have caught if he’d used the IPCC models instead of his own models based on his own historical evidence (ie. practice)?

Well they wouldn’t catch any if they used Postma’s or the skydragon’s models.

Precisely!

Which is why I don’t believe either set :)

They would be looking at where the average baseball lands and then they would try to catch the average every time. They would also overestimate the hitters ability due to the amount of co2 in the air

I know why they call them deniers. At every chance they try to deny another person from actively thinking and advancing an argument. The majority (with a couple of exceptions from the truly skeptical POV) will never actually help you and offer constructive criticism. Instead they just stomp around.

WHT,

.

[” The majority (with a couple of exceptions from the truly skeptical POV) will never actually help you and offer constructive criticism. Instead they just stomp around.”]

.

If that is directed at me, then I beg to disagree. I have engaged with several warmists on this thread alone and I think I have done so in a respectful and reasonable manner. I will be happy to discuss CO2 (the thread subject) with you if you so wish.

.

How do you think it is possible for a trace gas at less than 0.04% concentration to significantly affect global temperature?

.

If you don’t wish to discuss this basic premise of the cAGW debate, just let me know. I will assure you of my respectfulness as long as you do the same.

.

Regards,

Arfur

Arfur, so if the 2000’s was 0.15 C warmer than the 1990’s and the previous average was only 0.06 C, you don’t count that as an acceleration? Could you clarify your definition of acceleration?

Jim D,

You are averaging out in decades? Yes, the 2000s were averagely warmer. But cAGW was sold to Joe Public as an impending catastrophe on the basis of not-averaged data. If the cAGW theory is correct, the MBH98 curve would still be increasing today. The FACT that the temperature has not increased above 1998 by itself effectively disproves the theory.

.

You can hide all sorts of significant data if you smooth out enough. Look here:

http://www.woodfortrees.org/plot/hadcrut3gl/from:1990/to:2010

.

Do you see an acceleration between the 90s and the 00s? Imagine you were a climber who walked from the start of the graph to the finish. You would have had to climb to the highest peak in 1998 but you would have spent a longer time at an averagely greater height in the 00s. By your argument of using decades, the climber would say he was ‘higher’ in the 00s, whereas history will show that he climbed the ‘highest’ in 1998.

We have just had the warmest decade by 0.15 degrees. Why would that not be a sign of warming faster than your average 0.06 degrees/per decade. It is an acceleration by any definition, and the projections have it accelerating further to 0.3 or more degrees per decade if the CO2 increase continues to accelerate.

Jim D,

.

Please read what I wrote.

.

Or try this… If the temperatures stay flat for the next two hundred decades (or more), you will still be able to say that each decade ‘is the (equal) highest decade’! Unfortunately, there will have been no temperature increase and no acceleration. The climber will be walking across a very large, flat and high plateau…

.

Of course, if the temperature increases, we may reach a point where the overall trend increases above what it is today. That will show an increased trend but not necessarily an acceleration. For an acceleration each successive overall trend has to be increased over the previous..

.

Using short-term, intermediate trend lines (as the IPCC did), is irrelevant, as each short-term trend can change greatly. Only the overall trend counts.

I would probably try to account for volcanoes and ENSO.

http://www.drroyspencer.com/wp-content/uploads/UAH_LT_current.gif