by Ross McKitrick

Challenging the claim that a large set of climate model runs published since 1970’s are consistent with observations for the right reasons.

Introduction

Zeke Hausfather et al. (2019) (herein ZH19) examined a large set of climate model runs published since the 1970s and claimed they were consistent with observations, once errors in the emission projections are considered. It is an interesting and valuable paper and has received a lot of press attention. In this post, I will explain what the authors did and then discuss a couple of issues arising, beginning with IPCC over-estimation of CO2 emissions, a literature to which Hausfather et al. make a striking contribution. I will then present a critique of some aspects of their regression analyses. I find that they have not specified their main regression correctly, and this undermines some of their conclusions. Using a more valid regression model helps explain why their findings aren’t inconsistent with Lewis and Curry (2018) which did show models to be inconsistent with observations.

Outline of the ZH19 Analysis:

A climate model projection can be factored into two parts: the implied (transient) climate sensitivity (to increased forcing) over the projection period and the projected increase in forcing. The first derives from the model’s Equilibrium Climate Sensitivity (ECS) and the ocean heat uptake rate. It will be approximately equal to the model’s transient climate response (TCR), although the discussion in ZH19 is for a shorter period than the 70 years used for TCR computation. The second comes from a submodel that takes annual GHG emissions and other anthropogenic factors as inputs, generates implied CO2 and other GHG concentrations, then converts them into forcings, expressed in Watts per square meter. The emission forecasts are based on socioeconomic projections and are therefore external to the climate model.

ZH19 ask whether climate models have overstated warming once we adjust for errors in the second factor due to faulty emission projections. So it’s essentially a study of climate model sensitivities. Their conclusion, that models by and large generate accurate forcing-adjusted forecasts, implies that models have generally had valid TCR levels. But this conflicts with other evidence (such as Lewis and Curry 2018) that CMIP5 models have overly high TCR values compared to observationally-constrained estimates. This discrepancy needs explanation.

One interesting contribution of the ZH19 paper is their tabulation of the 1970s-era climate model ECS values. The wording in the ZH19 Supplement, which presumably reflects that in the underlying papers, doesn’t distinguish between ECS and TCR in these early models. The reported early ECS values are:

- Manabe and Weatherald (1967) / Manabe (1970) / Mitchell (1970): 2.3K

- Benson (1970) / Sawyer (1972) / Broecker (1975): 2.4K

- Rasool and Schneider (1971) 0.8K

- Nordhaus (1977): 2.0K

If these really are ECS values they are pretty low by modern standards. It is widely-known that the 1979 Charney Report proposed a best-estimate range for ECS of 1.5—4.5K. The follow-up National Academy report in 1983 by Nierenberg et al. noted (p. 2) “The climate record of the past hundred years and our estimates of CO2 changes over that period suggest that values in the lower half of this range are more probable.” So those numbers might be indicative of general thinking in the 1970s. Hansen’s 1981 model considered a range of possible ECS values from 1.2K to 3.5K, settling on 2.8K for their preferred estimate, thus presaging the subsequent use of generally higher ECS values.

But it is not easy to tell if these are meant to be ECS or TCR values. The latter are always lower than ECS, due to slow adjustment by the oceans. Model TCR values in the 2.0–2.4 K range would correspond to ECS values in the upper half of the Charney range.

If the models have high interval ECS values, the fact that ZH19 find they stay in the ballpark of observed surface average warming, once adjusted for forcing errors, suggests it’s a case of being right for the wrong reason. The 1970s were unusually cold, and there is evidence that multidecadal internal variability was a significant contributor to accelerated warming from the late 1970s to the 2008 (see DelSole et al. 2011). If the models didn’t account for that, instead attributing everything to CO2 warming, it would require excessively high ECS to yield a match to observations.

With those preliminary points in mind, here are my comments on ZH19.

There are some math errors in the writeup.

The main text of the paper describes the methodology only in general terms. The online SI provides statistical details including some mathematical equations. Unfortunately, they are incorrect and contradictory in places. Also, the written methodology doesn’t seem to match the online Python code. I don’t think any important results hang on these problems, but it means reading and replication is unnecessarily difficult. I wrote Zeke about these issues before Christmas and he has promised to make any necessary corrections to the writeup.

One of the most remarkable findings of this study is buried in the online appendix as Figure S4, showing past projection ranges for CO2 concentrations versus observations:

Bear in mind that, since there have been few emission reduction policies in place historically (and none currently that bind at the global level), the heavy black line is effectively the Business-as-Usual sequence. Yet the IPCC repeatedly refers to its high end projections as “Business-as-Usual” and the low end as policy-constrained. The reality is the high end is fictional exaggerated nonsense.

I think this graph should have been in the main body of the paper. It shows:

- In the 1970s, models (blue) had a wide spread but on average encompassed the observations (though they pass through the lower half of the spread);

- In the 1980s there was still a wide spread but now the observations hug the bottom of it, except for the horizontal line which was Hansen’s 1988 Scenario C;

- Since the 1990s the IPCC constantly overstated emission paths and, even more so, CO2 concentrations by presenting a range of future scenarios, only the minimum of which was ever realistic.

I first got interested in the problem of exaggerated IPCC emission forecasts in 2002 when the top-end of the IPCC warming projections jumped from about 3.5 degrees in the 1995 SAR to 6 degrees in the 2001 TAR. I wrote an op-ed in the National Post and the Fraser Forum (both available here) which explained that this change did not result from a change in climate model behaviour but from the use of the new high-end SRES scenarios, and that many climate modelers and economists considered them unrealistic. The particularly egregious A1FI scenario was inserted into the mix near the end of the IPCC process in response to government (not academic) reviewer demands. IPCC Vice-Chair Martin Manning distanced himself from it at the time in a widely-circulated email, stating that many of his colleagues viewed it as “unrealistically high.”

Some longstanding readers of Climate Etc. may also recall the Castles-Henderson critique which came out at this time. It focused on IPCC misuse of Purchasing Power Parity aggregation rules across countries. The effect of the error was to exaggerate the relative income differences between rich and poor countries, leading to inflated upper end growth assumptions for poor countries to converge on rich ones. Terence Corcoran of the National Post published an article on November 27 2002 quoting John Reilly, an economist at MIT, who had examined the IPCC scenario methodology and concluded it was “in my view, a kind of insult to science” and the method was “lunacy.”

Years later (2012-13) I published two academic articles (available here) in economics journals critiquing the IPCC SRES scenarios. Although global total CO2 emissions have grown quite a bit since 1970, little of this is due to increased average per capita emissions (which have only grown from about 1.0 to 1.4 tonnes C per person), instead it is mainly driven by global population growth, which is slowing down. The high-end IPCC scenarios were based on assumptions that population and per capita emissions would both grow rapidly, the latter reaching 2 tonnes per capita by 2020 and over 3 tonnes per capita by 2050. We showed that the upper half of the SRES distribution was statistically very improbable because it would require sudden and sustained increases in per capita emissions which were inconsistent with observed trends. In a follow-up article, my student Joel Wood and I showed that the high scenarios were inconsistent with the way global energy markets constrain hydrocarbon consumption growth. More recently Justin Ritchie and Hadi Dowladabadi have explored the issue from a different angle, namely the technical and geological constraints that prevent coal use from growing in the way assumed by the IPCC (see here and here).

IPCC reliance on exaggerated scenarios is back in the news, thanks to Roger Pielke Jr.’s recent column on the subject (along with numerous tweets from him attacking the existence and usage of RCP8.5) and another recent piece by Andrew Montford. What is especially egregious is that many authors are using the top end of the scenario range as “business-as-usual”, even after, as shown in the ZH19 graph, we have had 30 years in which business-as-usual has tracked the bottom end of the range.

In December 2019 I submitted my review comments for the IPCC AR6 WG2 chapters. Many draft passages in AR6 continue to refer to RCP8.5 as the BAU outcome. This is, as has been said before, lunacy—another “insult to science”.

Apples-to-apples trend comparisons requires removal of Pinatubo and ENSO effects

The model-observational comparisons of primary interest are the relatively modern ones, namely scenarios A—C in Hansen (1988) and the central projections from various IPCC reports: FAR (1990), SAR (1995), TAR (2001), AR4 (2007) and AR5 (2013). Since the comparison uses annual averages in the out-of-sample interval the latter two time spans are too short to yield meaningful comparisons.

Before examining the implied sensitivity scores, ZH19 present simple trend comparisons. In many cases they work with a range of temperatures and forcings but I will focus on the central (or “Best”) values to keep this discussion brief.

ZH19 find that Hansen 1988-A and 1988-B significantly overstate trends, but not the others. However, I find FAR does as well. SAR and TAR don’t but their forecast trends are very low.

The main forecast interval of interest is from 1988 to 2017. It is shorter for the later IPCC reports since the start year advances. To make trend comparisons meaningful, for the purpose of the Hansen (1988-2017) and FAR (1990-2017) interval comparisons, the 1992 (Mount Pinatubo) event needs to be removed since it depressed observed temperatures but is not simulated in climate models on a forecast basis. Likewise with El Nino events. By not removing these events the observed trend is overstated for the purpose of comparison with models.

To adjust for this I took the Cowtan-Way temperature series from the ZH19 data archive, which for simplicity I will use as the lone observational series, and filtered out volcanic and El Nino effects as follows. I took the IPCC AR5 volcanic forcing series (as updated by Nic Lewis for Lewis&Curry 2018), and the NCEP pressure-based ENSO index (from here). I regressed Cowtan-Way on these two series and obtained the residuals, which I denote as “Cowtan-Way adj” in the following Figure (note both series are shifted to begin at 0.0 in 1988):

The trends, in K/decade, are indicated in the legend. The two trend coefficients are not significantly different from each other (using the Vogelsang-Franses test). Removing the volcanic forcing and El Nino effects causes the trend to drop from 0.20 to 0.15 K/decade. The effect is minimal on intervals that start after 1995. In the SAR subsample (1995-2017) the trend remains unchanged at 0.19 K/decade and in the TAR subsample (2001-2017) the trend increases from 0.17 to 0.18 K/decade.

Here is what the adjusted Cowtan-Way data looks like, compared to the Hansen 1988 series:

The linear trend in the red line (adjusted observations) is 0.15 C/decade, just a bit above H88-C (0.12 C/decade) but well below the H88-A and H88-B trends (0.30 and 0.28 C/decade respectively)

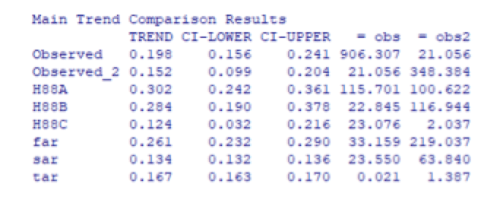

The ZH19 trend comparison methodology is an ad hoc mix of OLS and AR1 estimation. Since the methodology write-up is incoherent and their method is non-standard I won’t try to replicate their confidence intervals (my OLS trend coefficients match theirs however). Instead I’ll use the Vogelsang-Franses (VF) autocorrelation-robust trend comparison methodology from the econometrics literature. I computed trends and 95% CI’s in the two CW series, the 3 Hansen 1988 A,B,C series and the first three IPCC out-of-sample series (denoted FAR, SAR and TAR). The results are as follows:

The OLS trends (in K/decade) are in the 1st column and the lower and upper bounds on the 95% confidence intervals are in the next two columns.

The 4th and 5th columns report VF test scores, for which the 95% critical value is 41.53. In the first two rows, the diagonal entries (906.307 and 348.384) are tests on a null hypothesis of no trend; both reject at extremely small significance levels (indicating the trends are significant). The off-diagonal scores (21.056) test if the trends in the raw and adjusted series are significantly different. It does not reject at 5%.

The entries in the subsequent rows test if the trend in that row (e.g. H88-A) equals the trend in, respectively, the raw and adjusted series (i.e. obs and obs2), after adjusting the sample to have identical time spans. If the score exceeds 41.53 the test rejects, meaning the trends are significantly different.

The Hansen 1988-A trend forecast significantly exceeds that in both the raw and adjusted observed series. The Hansen 1988-B forecast trend does not significantly exceed that in the raw CW series but it does significantly exceed that in the adjusted CW (since the VF score rises to 116.944, which exceeds the 95% critical value of 41.53). The Hansen 1988-C forecast is not significantly different from either observed series. Hence, the only Hansen 1988 forecast that matches the observed trend, once the volcanic and El Nino effects are removed, is scenario C, which assumes no increase in forcing after 2000. The post-1998 slowdown in observed warming ends up matching a model scenario in which no increase in forcing occurs, but does not match either scenario in which forcing is allowed to increase, which is interesting.

The forecast trends in FAR and SAR are not significantly different from the raw Cowtan-Way trends but they do differ from the adjusted Cowtan-Way trends. (The FAR trend also rejects against the raw series if we use GISTEMP, HadCRUT4 or NOAA). The discrepancy between FAR and observations is due to the projected trend being too large. In the SAR case, the projected trend is smaller than the observed trend over the same interval (0.13 versus 0.19). The adjusted trend is the same as the raw trend but the series has less variance, which is why the VF score increases. In the case of CW and Berkeley it rises enough to reject the trend equivalence null; if we use GISTEMP, HadCRUT4 or NOAA neither raw nor adjusted trends reject against the SAR trend.

The TAR forecast for 2001-2017 (0.167 K/decade) never rejects against observations.

So to summarize, ZH19 go through the exercise of comparing forecast to observed trends and, for the Hansen 1988 and IPCC trends, most forecasts do not significantly differ from observations. But some of that apparent fit is due to the 1992 Mount Pinatubo eruption and the sequence of El Nino events. Removing those, the Hansen 1988-A and B projections significantly exceed observations while the Hansen 1988 C scenario does not. The IPCC FAR forecast significantly overshoots observations and the IPCC SAR significantly undershoots them.

In order to refine the model-observation comparison it is also essential to adjust for errors in forcing, which is the next task ZH19 undertake.

Implied TCR regressions: a specification challenge

ZH19 define an implied Transient Climate Response (TCR) as

where T is temperature, F is anthropogenic forcing, and the derivative is computed as the least squares slope coefficient from regressing temperature on forcing over time. Suppressing the constant term the regression for model i is simply

The TCR for model i is therefore where 3.7 (W/m2) is the assumed equilibrium CO2 doubling coefficient. They find 14 of the 17 implied TCR’s are consistent with an observational counterpart, defined as the slope coefficient from regressing temperatures on an observationally-constrained forcing series.

Regarding the post-1988 cohort, unfortunately ZH19 relied on an ARIMA(1,0,0) regression specification, or in other words a linear regression with AR1 errors. While the temperature series they use are mostly trend stationary (i.e. stationary after de-trending), their forcing series are not. They are what we call in econometrics integrated of order 1, or I(1), namely the first differences are trend stationary but the levels are nonstationary. I will present a very brief discussion of this but I will save the longer version for a journal article (or a formal comment on ZH19).

There is a large and growing literature in econometrics journals on this issue as it applies to climate data, with lots of competing results to wade through. On the time spans of the ZH19 data sets, the standard tests I ran (namely Augmented Dickey-Fuller) indicate temperatures are trend-stationary while forcings are nonstationary. Temperatures therefore cannot be a simple linear function of forcings, otherwise they would inherit the I(1) structure of the forcing variables. Using an I(1) variable in a linear regression without modeling the nonstationary component properly can yield spurious results. Consequently it is a misspecification to regress temperatures on forcings (see Section 4.3 in this chapter for a partial explanation of why this is so).

How should such a regression be done? Some time series analysts are trying to resolve this dilemma by claiming that temperatures are I(1). I can’t replicate this finding on any data set I’ve seen, but if it turns out to be true it has massive implications including rendering most forms of trend estimation and analysis hitherto meaningless.

I think it is more likely that temperatures are I(0), as are natural forcings, and anthropogenic forcings are I(1). But this creates a big problem for time series attribution modeling. It means you can’t regress temperature on forcings the way ZH19 did; in fact it’s not obvious what the correct way would be. One possible way to proceed is called the Toda-Yamamoto method, but it is only usable when the lags of the explanatory variable can be included, and in this case they can’t because they are perfectly collinear with each other. The main other option is to regress the first differences of temperatures on first differences of forcings, so I(0) variables are on both sides of the equation. This would imply an ARIMA(0,1,0) specification rather than ARIMA(1,0,0).

But this wipes out a lot of information in the data. I did this for the later models in ZH19, regressing each one’s temperature series on each one’s forcing input series, using a regression of Cowtan-Way on the IPCC total anthropogenic forcing series as an observational counterpart. Using an ARIMA(0,1,0) specification except for AR4 (for which ARIMA(1,0,0) is indicated) yields the following TCR estimates:

The comparison of interest is OBS1 and OBS2 to the H88a—c results, and for each IPCC report the OBS-(startyear) series compared to the corresponding model-based value. I used the unadjusted Cowtan-Way series as the observational counterparts for FAR and after.

In one sense I reproduce the ZH19 findings that the model TCR estimates don’t significantly differ from observed, because of the overlapping spans of the 95% confidence intervals. But that’s not very meaningful since the 95% observational CI’s also encompass 0, negative values, and implausibly high values. They also encompass the Lewis & Curry (2018) results. Essentially, what the results show is that these data series are too short and unstable to provide valid estimates of TCR. The real difference between models and observations is that the IPCC models are too stable and constrained. The Hansen 1988 results actually show a more realistic uncertainty profile, but the TCR’s differ a lot among the three of them (point estimates 1.5, 1.9 and 2.4 respectively) and for two of the three they are statistically insignificant. And of course they overshoot the observed warming.

The appearance of precise TCR estimates in ZH19 is spurious due to their use of ARIMA(1,0,0) with a nonstationary explanatory variable. A problem with my approach here is that the ARIMA(0,1,0) specification doesn’t make efficient use of information in the data about potential long run or lagged effects between forcings and temperatures, if they are present. But with such short data samples it is not possible to estimate more complex models, and the I(0)/I(1) mismatch between forcings and temperatures rule out finding a simple way of doing the estimation.

Conclusion

The apparent inconsistency between ZH19 and studies like Lewis & Curry 2018 that have found observationally-constrained ECS to be low compared to modeled values disappears once the regression specification issue is addressed. The ZH19 data samples are too short to provide valid TCR values and their regression model is specified in such a way that it is susceptible to spurious precision. So I don’t think their paper is informative as an exercize in climate model evaluation.

It is, however, informative with regards to past IPCC emission/concentration projections and shows that the IPCC has for a long time been relying on exaggerated forecasts of global greenhouse gas emissions.

I’m grateful to Nic Lewis for his comments on an earlier draft.

Comment from Nic Lewis

These early models only allowed for increases in forcing from CO2, not from all forcing agents. Since 1970, total forcing (per IPCC AR5 estimates) has grown more than 50% faster than CO2-only forcing, so if early model temperature trends and CO2 concentration trends over their projection periods are in line with observed warming and CO2 concentration trends, their TCR values must have been more than 50% above that implied by observations.

Moderation note: As with all guest posts, please keep your comments civil and relevant.

Reblogged this on Climate Collections and commented:

Conclusion: “…I don’t think their paper [ZH19] is informative as an exercize in climate model evaluation.

It is, however, informative with regards to past IPCC emission/concentration projections and shows that the IPCC has for a long time been relying on exaggerated forecasts of global greenhouse gas emissions.”

exaggerated? to exagerrate is intentional you can no prove that or hardly.

just use wrong.

Ross, this post is of interest to me on several levels, but I will comment on only one point here.

Using an arima(0,1,0) model to obtain stationarity with a temperature or forcing series does not make sense from a physical point of view as it implies a random walk. An arfima model with a fractional d value would not imply a random walk but with a d value less than 0.5 would imply a long memory model which might not be applicable and difficult to impossible to test with a short series.

What if a secular trend could be extracted from a non-linear and non-stationary series with an empirical method like complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) and then regressing the resulting temperature and forcing CEEMDAN trends.

Hi Ross

An important summary. I hope you will also submit for publication.

My comment:

The acceptance of the surface temperature anomaly as quantitatively robust remains an issue. We have shown several issues with its accuracy on land.

Pielke Sr., R.A., C. Davey, D. Niyogi, S. Fall, J. Steinweg-Woods, K. Hubbard, X. Lin, M. Cai, Y.-K. Lim, H. Li, J. Nielsen-Gammon, K. Gallo, R. Hale, R. Mahmood, S. Foster, R.T. McNider, and P. Blanken, 2007: Unresolved issues with the assessment of multi-decadal global land surface temperature trends. J. Geophys. Res., 112, D24S08, doi:10.1029/2006JD008229. http://pielkeclimatesci.wordpress.com/files/2009/10/r-321.pdf

The clearest issue is the use of minimum temperatures and temperatures at high latitudes in the winter when the surface layer is stably stratified. As shown in

McNider, R.T., G.J. Steeneveld, B. Holtslag, R. Pielke Sr, S. Mackaro, A. Pour Biazar, J.T. Walters, U.S. Nair, and J.R. Christy, 2012: Response and sensitivity of the nocturnal boundary layer over land to added longwave radiative forcing. J. Geophys. Res., 117, D14106, doi:10.1029/2012JD017578. Copyright (2012) American Geophysical Union. https://pielkeclimatesci.files.wordpress.com/2013/02/r-371.pdf

where is is concluded

“Based on these model analyses, it is likely that part of the observed long-term increase in minimum temperature is reflecting a redistribution of heat by changes in turbulence and not by an accumulation of

heat in the boundary layer. Because of the sensitivity of the shelter level temperature to parameters and forcing, especially to uncertain turbulence parameterization in the SNBL, there should be caution about the use of minimum temperatures as a diagnostic global warming metric in either observations or models.”

This will introduce a warm bias in your use of surface temperature when applying to estimate global warming.

Best Regards Roger Sr.

“…past IPCC emission/concentration projections and shows that the IPCC has for a long time been relying on exaggerated forecasts of global greenhouse gas emissions.”

When we know the ECS and the TCR we aren’t done. Controlling CO2 emissions for one, and land use for another brings in more uncertainty. Where is the control on these two things? Governments and individuals. And governments don’t seem to be reaching a lot of agreement and most individuals in this world don’t care enough to do anything. Because of their economic situation, they can’t.

Using emissions to drive Antarctic ice sheet collapse. Whatever the prediction, it’s based on emissions. So when they say by the year 2050, it’s based upon what individuals and governments do. It’s science based on what people do in the next 10, 20 and 25 years. It’s science that says, if you don’t want this to happen, do this. It is prescriptive. I can’t see that someone can argue otherwise.

To attempt to clarify, any study that mentions a year in the future such as 2050, relies on X amount of emissions. No future year can exist without an emission assumption.

“It’s science that says, if you don’t want this to happen, do this. It is prescriptive. I can’t see that someone can argue otherwise.”

An interpretation of the data allows some to predict catastrophe implying that we not only know its cause but have the means to avoid it. I believe you are correct in your interpretation that the predictions are all based on emissions. An alternate view of the data rejects that emissions control temperature or even atmospheric concentration of CO2. Same science, different assumptions, different conclusions. Temperature leads CO2 concentration on all time scales. Solid physical analysis concludes that only about 15% of the recent increase in CO2 is due to human activity. There is no correlation of emissions to temperature. I do not think reduction of fossil fuel use would make any measurable difference in any climate attributes.

@Judith: Gavin Schmidt had a nice thread yesterday summarizing the key scientific evidence behind the CO2-as-main-driver hypothesis. It would be nice to read a qualified response: https://threader.app/thread/1217885474502729728

I’m not Judy, however: the question is NOT if the anthropogenic forcing is a main reason of the observed increase of the GMST BUT how much? In other words: what sensitivity do we observe? Following this paper https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2019MS001838?af=R which made a plausibility test in fig. 14 it’s clear that every TCR estimate above 1.6 K/2*CO2 gives too much warming:

https://i.imgur.com/qPClsMm.jpg

The model mean is 1.83! Only 5 models are within a 20% margin and it’s mean is 1.45 K/2*CO2 but IMO the whole approach is questionable due to too less skill.

So the paper you linked argues that with a TCR of 1.45 K and CO2 as the main driver you can explain all of modern warming since 1880? If so, why does the IPCC a much higher TCR?

Tobias, a last response: The IPCC doesen’t argue with “much higher TCR”-indeed it calculates with an interval 1K/ 2*CO2….2.5K/2*CO2 – but the CMIP5 climate models. After evaluating with the observed warming retrospectively to 2018 it seems that the model mean TCR is (much) too high, when following the cited paper which excludes values above 2K/2*CO2 as “unlikely”, see the attached figure.

Thanks Frank, but this wasn’t my question. I asked about Gavin Schmidt and the IPCC, not the cmip5 model

@Frank: It’s not about “a main driver” but “the main driver”, as the IPCC suggests. If it’s the main driver, it has to fully explain modern warming. In fact it has to explain more than observed warming, as the IPCC includes cooling effects.

Tobias, sorry…the TCR approach I mentioned also includes “cooling effects”, e.g. from aerosols. It’s helpful to take notice of the “ERF” data, you’ll find it in AR5 WG1. Good luck for educating.

See my response above. Why does the IPCC assume much higher values to explain observed modern warming?

If you neglect solar indirect effects and multi-decadal and longer internal variation of ocean circulations, you are easily led to a conclusion of CO2 as main driver.

Thanks Judith. Gavin writes in his thread:

–

We can also look at the testable, falsifiable, theories that were tested, and failed.

Solar forcing? Fails the strat cooling test.❌

Ocean circulation change? Fails the OHC increase test ❌

Orbital forcing? Fails on multiple levels ❌

–

Are these points false, or contested?

Surely Willie (Soon) and solar scientists are right about the primacy of the sun. Why? Because the observable real world is the final test of science. And the data – actual evidence – shows that global temperatures follow changes in solar brightness on all time-scales, from decades to millions of years. On the other hand, CO2 and temperature have generally gone their own separate ways on these time scales.

https://wattsupwiththat.com/2018/12/02/dr-willie-soon-versus-the-climate-apocalypse/

We can contrast low solar intensity and Holocene maximum temperature with low solar intensity and the LIA. Both seem associated with more upwelling in the eastern Pacific Ocean – that may indeed be indirectly solar triggered through the Mansurov effect. But changes in solar intensity are minor. To explain the contrast we would need to consider ice, cloud or CO2 changs – or all three and more.

https://www.pnas.org/content/pnas/109/16/5967/F3.medium.gif

curryja:

“If you neglect solar indirect effects and multi-decadal and longer internal variation of ocean circulations…”

The AMO is an inverse response to solar wind variability. With stronger solar wind we see global cooling like in the 1970’s, and with weaker solar wind we see global warming like post 1995. That is the most important dynamic in the climate system.

Brava, Judith. Bravo,Ordvic

“Changes in the Earth’s radiation budget are driven by changes in the balance between the thermal emission from the

top of the atmosphere and the net sunlight absorbed. The shortwave radiation entering the climate system depends

on the Sun’s irradiance and the Earth’s reflectance. Often, studies replace the net sunlight by proxy measures of solar

irradiance, which is an oversimplification used in efforts to probe the Sun’s role in past climate change. With new helioseismic data and new measures of the Earth’s reflectance, we can usefully separate and constrain the relative roles of the net sunlight’s two components, while probing the degree of their linkage. First, this is possible because helioseismic data provide the most precise measure ever of the solar cycle, which ultimately yields more profound physical limits on past irradiance variations. Since irradiance variations are apparently minimal, changes in the Earth’s climate that seem to be associated with changes in the level of solar activity—the Maunder Minimum and the Little Ice age for example would then seem to be due to terrestrial responses to more subtle changes in the Sun’s spectrum of radiative output. This leads naturally to a linkage with terrestrial reflectance, the second component of the net sunlight, as the carrier of the terrestrial amplification of the Sun’s varying output. Much progress has also been made in determining this difficult to measure, and not-so-well-known quantity. We review our understanding of these two closely linked, fundamental drivers of climate.” http://bbso.njit.edu/Research/EarthShine/literature/Goode_Palle_2007_JASTP.pdf

Although the adults are talking indirect effects – in this and in decadal variability;

Gavin plus + and minuses x

“First off, we start with the observations:

1) spectra from space showing absorption of upward infra-red radiation from the Earth’s surface.

– yes +

2) Measurements from around the world showing increases in CO2, CH4, CFCs, N2O.

-No x

Fails to mention biggest GHG atmpospheric water.

Fails to quantify the amount of increase.

Fails to mention all possible causes of these increases som3 of which are natural in a warming world

3) In situ & space based observations of land use change

No x

Fails to tie in why valid

Fails to mention increased vegetation observed from space

Fails to mention highest CO2 in jungle areas

We develop theories.

1) Radiative-transfer (e.g. Manabe and Wetherald, 1967)

yes+

2) Energy-balance models (Budyko 1961 and many subsequent papers)

yes+

3) GCMs (Phillips 1956, Hansen et al 1983, CMIP etc.)

Yes +

We make falsifiable predictions. Here are just a few:

1967: increasing CO2 will cause the stratosphere to cool

Blatant half truth, the corollary was that there should be a detectable hot spot.

Sorry that part of the hypothesis was falsified

1981: increasing CO2 will cause warming at surface to be detectable by 1990s No x will cause warming is correct. Detectable warming is not due to large natural variability it is quite easy to have such a small signal hidden

1988: warming from increasing GHGs will lead to increases in ocean heat content. No brainer

1991: Eruption of Pinatubo will lead to ~2-3 yrs of cooling. No brainer

2001: Increases in GHGs will be detectable in space-based spectra

No brainer spectra known for centuries

2004: Increases in GHGs will lead to continued warming at ~0.18ºC/decade.

No xxx

This is so blatantly wrong by Gavin. There are so many predictions of continued warming. Most of them much higher and much scarier and most supported by him. So what does he do?

Picks out the current warming rate and claims that was the prediction.

Wimp

–

We test the predictions:

Stratospheric cooling? ✅

Detectable warming? X . Not detectable CO2 warming, Gavin, no fingerprint of CO2.

OHC increase? X who would know? Vast tracts of made up data 0.01 -0.2 C in the upper ocean over 60 years with a higher yearly SD error multiplied x60 Don’t claim what you cannot test precisely enough.

Pinatubo-related cooling?✅

Space-based changed in IR absorption? ✅

post-2004 continued warming? Conveniently excluding his little increases in GHG? XXX There is warming , but no link to CO2 is needed for warming or cooling XXX

With this validated physics, we can estimate contributions to the longer term trends.

Hold on, the physics is not validated

This too is of course falsifiable. If one could find a model system that matches all of the previously successful predictions in hindcasts, and gives a different attribution, we could test that. [Note this does not (yet) exist, but let’s keep an open mind].

xx like Tamino Gavin is of course telling others not to prejudge because he has already judged it for them

We can also look at the testable, falsifiable, theories that were tested, and failed.

Solar forcing? Fails the strat cooling test.❌

Ocean circulation change? Fails the OHC increase test

Returns to the great misinformation. When all else fails claim unprovable OHC increase.

When the models fail blame it on Ocean circulation.

All that missing heat has gone in currents deep under the ocean and will emerge in hundreds of years time

When the current theory threatens the CO2 theory , flush it down the drain.

Orbital forcing? Fails on multiple levels ❌

Clouds? x Can’t trust those Spencer and Christie fellows, heads in the clouds you know.

If you have a theory that you don’t think has been falsified, or you think you can falsify the mainstream conclusions, that’s great!

Join us at modifythedata.com and we can make your theory just as good as ours.

Thanks angech, that’s a concise rebuttal. But do you agree with his point that “Solar forcing? Fails the strat cooling test.”, or is this also moot?

Tobias

“Thanks angech, that’s a concise rebuttal.”

Thank you. Appreciated.

But do you agree with his point that “Solar forcing? Fails the strat cooling test.”, or is this also moot?

I do not know.

In default I would defer to Gavin unless Ross or others here wish to contest it.

–

I would say that the sun shows a remarkably narrow range of temperature flux which indicates an extremely well mixed substrate of homogenous material and while some solar forcing is possible this is unlikely to be a significant cause of temperature variation.

–

The reasons for “natural” temperature variations over the course of hours to centuries is the incredible mixing of the gases, water, ice, land and subterranean water in the cement mixer of the rotating earth combined with the “steam” ( clouds) that come off from the earth and hide it (change the albedo constantly) from the sun.

These changes in circulation and distribution of the heat load can cause widespread temperature changes in some of the substrates that can persist for up to hundreds of years despite the constant return of the overall heat input from the sun.

–

The concept of solar forcing and providing provenance is arcane and arguable, like the hot spot . If wrong on one does that give him double credit for being right on the other?

My first +1 ever. 😊

angech | January 17, 2020 at 4:44 pm

Thank you angech; that was a good post.

” If so, why does the IPCC a much higher TCR?”

Because if there’s a low TCR, then we can’t see the scary big rises in temperature, so no need for CO2 taxes, indulgences &, indeed, the IPCC itself. A complete collapse in the global scares market, no more Carry on Partyings, Funding for Climastrological research reduced by a logarithmic amount, mass redundancies amongst Climastrological departments at universities & producers of wind & solar subsidy farming equipment. Plenty of politicians trying to salvage their careers & a few billion people asking some very hard questions as to why they’ve paid all this money in taxes to “solve” a non-problem.

He’s being ridiculous. He knows full well that changing ocean currents can both warm the surface and increase OHC at the same time. Did the stratosphere start cooling? The last I’d heard that stopped about 1995 but that doesn’t it hasn’t been re-evaluated since the last time I heard.

Ross,

It’s been a few decades since I had to sign off on any design control documents, but I concur with you that at a minimum .”this graph should have been in the main body of the paper. It shows:….”.

I would of included some comment about the graph in the Abstract as well.

From Section 1 of The coming cooling: usefully accurate climate forecasting for policy makers. https://journals.sagepub.com/doi/pdf/10.1177/0958305X16686488

and an earlier accessible blog version at http://climatesense-norpag.blogspot.com/2017/02/the-coming-cooling-usefully-accurate_17.html See also https://climatesense-norpag.blogspot.com/2018/10/the-millennial-turning-point-solar.html

“For the atmosphere as a whole therefore cloud processes, including convection and its interaction with boundary layer and larger-scale circulation, remain major sources of uncertainty, which propagate through the coupled climate system. Various approaches to improve the precision of multi-model projections have been explored, but there is still no agreed strategy for weighting the projections from different models based on their historical performance so that there is no direct means of translating quantitative measures of past performance into confident statements about fidelity of future climate projections.The use of a multi-model ensemble in the IPCC assessment reports is an attempt to characterize the impact of parameterization uncertainty on climate change predictions. The shortcomings in the modeling methods, and in the resulting estimates of confidence levels, make no allowance for these uncertainties in the models. In fact, the average of a multi-model ensemble has no physical correlate in the real world.

The IPCC AR4 SPM report section 8.6 deals with forcing, feedbacks and climate sensitivity. It recognizes the shortcomings of the models. Section 8.6.4 concludes in paragraph 4 (4): “Moreover it is not yet clear which tests are critical for constraining the future projections, consequently a set of model metrics that might be used to narrow the range of plausible climate change feedbacks and climate sensitivity has yet to be developed”

What could be clearer? The IPCC itself said in 2007 that it doesn’t even know what metrics to put into the models to test their reliability. That is, it doesn’t know what future temperatures will be and therefore can’t calculate the climate sensitivity to CO2. This also begs a further question of what erroneous assumptions (e.g., that CO2 is the main climate driver) went into the “plausible” models to be tested any way. The IPCC itself has now recognized this uncertainty in estimating CS – the AR5 SPM says in Footnote 16 page 16 (5): “No best estimate for equilibrium climate sensitivity can now be given because of a lack of agreement on values across assessed lines of evidence and studies.” Paradoxically the claim is still made that the UNFCCC Agenda 21 actions can dial up a desired temperature by controlling CO2 levels. This is cognitive dissonance so extreme as to be irrational. There is no empirical evidence which requires that anthropogenic CO2 has any significant effect on global temperatures.

The climate model forecasts, on which the entire Catastrophic Anthropogenic Global Warming meme rests, are structured with no regard to the natural 60+/- year and, more importantly, 1,000 year periodicities that are so obvious in the temperature record. The modelers approach is simply a scientific disaster and lacks even average commonsense. It is exactly like taking the temperature trend from, say, February to July and projecting it ahead linearly for 20 years beyond an inversion point. The models are generally back-tuned for less than 150 years when the relevant time scale is millennial. The radiative forcings shown in Fig. 1 reflect the past assumptions. The IPCC future temperature projections depend in addition on the Representative Concentration Pathways (RCPs) chosen for analysis. The RCPs depend on highly speculative scenarios, principally population and energy source and price forecasts, dreamt up by sundry sources. The cost/benefit analysis of actions taken to limit CO2 levels depends on the discount rate used and allowances made, if any, for the positive future positive economic effects of CO2 production on agriculture and of fossil fuel based energy production. The structural uncertainties inherent in this phase of the temperature projections are clearly so large, especially when added to the uncertainties of the science already discussed, that the outcomes provide no basis for action or even rational discussion by government policymakers. The IPCC range of ECS estimates reflects merely the predilections of the modellers – a classic case of “Weapons of Math Destruction” (6).

Harrison and Stainforth 2009 say (7): “Reductionism argues that deterministic approaches to science and positivist views of causation are the appropriate methodologies for exploring complex, multivariate systems where the behavior of a complex system can be deduced from the fundamental reductionist understanding. Rather, large complex systems may be better understood, and perhaps only understood, in terms of observed, emergent behavior. The practical implication is that there exist system behaviors and structures that are not amenable to explanation or prediction by reductionist methodologies. The search for objective constraints with which to reduce the uncertainty in regional predictions has proven elusive. The problem of equifinality ……. that different model structures and different parameter sets of a model can produce similar observed behavior of the system under study – has rarely been addressed.” A new forecasting paradigm is required.”

“The climate model forecasts, on which the entire Catastrophic Anthropogenic Global Warming meme rests, are structured with no regard to the natural 60+/- year and, more importantly, 1,000 year periodicities that are so obvious in the temperature record. The modelers approach is simply a scientific disaster and lacks even average common sense”

Ignoring the evidence of natural variability allows them to put their brains on sterile autopilot. No mess. No questions. No complexity. It’s a great public relations/propaganda strategy, known as fast food science, just stop in for a few seconds and fill up with the latest orders on how to think. An appeal to the lowest common denominator.

Good posts.

One doesn’t need to be a scientist to see that there’s a good amount of so called science that “lacks even average common sense”. It’s probably not in the peripheral vision of many serious scientists how the blame game complex seriously damages science, or even how it works; how brand marketing is sadly leveraged against the best interests of scientific truth, and how entrenched the forces that create it are. But it’s these forces that drive confirmation biases, desires for particular political outcomes, world views; all feeding the consensus “malarkey” beast (thanks Joe for the revival of malarkey, it all comes into focus now).

Certain “peer consensus” labels add leverage to advance falsehoods, in cases where the label is abused to facilitate biased work for use in branding efforts. It enables a cloak, spearheading unreproachable science (at least where it counts, with politics). Such branded work provides just enough fuel to drive politics, but it’s much louder than real science. This is a basic methodology for how nonsense science usurps real science. These ideas are birthed to the media, who then lobby scientific falsehoods, or exaggerations to the public. Some of the false science: climate change causing wildfires; more hurricanes occurring; harsher hurricanes; lower crop yields; higher rates of disease; the list goes on and on, all of these are designs to coerce policy.

While I believe there’s a good argument that AGW has caused some of the recent warming, there’s yet too many variables that make it unconvincing relative to advertised “degree”. Science proves CO2 is an aggravator of temperature because it’s a GHG, but I still haven’t seen anything that has ruled out CO2 being primarily follower of temperature as demonstrable in the historical record: http://joannenova.com.au/global-warming-2/ice-core-graph/

Mann’s hockey stick doesn’t look like much of a stick within a 12k year temperature record, it looks like a small blip in fact; a blip that’s only demonstrable because contemporary science has the means to add granularity to data over the last 150 years. If it were possible to add this level of “noise” to an 800k year chart, overlayed with a CO2 chart, my bet is there would probably have to be a lot of “Mann-spraining” be done from the massive population of “sticks” at peak temperature cycles. The paleo record is rounded off, all noisy “sticks” are absorbed in the peaks.

Sorry, there must have been a freudian slip, “Mann-splaining”, not “spraining”.

Thank-you.

Just a very minor point you say :”Yet the IPCC repeatedly refers to its high end projections as “Business-as-Usual” and the low end as policy-constrained.”

It isn’t quite that bad – AR5 WG1 doesn’t use the term as far as I can see, and Riahi et al originally presented it as “RCP 8.5—A scenario of comparatively high greenhouse gas emissions” and only uses “business as usual” qualified by “relatively conservative” and “high-emission”.

HAS, I researched how the IPCC named RCP8.5 their “Business as Usual” (BAU) case. As you point out, they didn’t use the term in AR5, instead they began to use the term during press conferences and presentation of AR5 contents in late 2013 and early 2014. The BAU term became the standard they used in interviews and discussions, this was picked up by the media, and almost immediately we began to see papers referring to RCP8.5 as BAU.

This extended to training material used in universities, and to position statements written by scientific organizations in numerous countries. By 2015 almost all climate change papers referred to RCP8.5 as BAU, and this was also picked up by US government agencies during the Obama administration.

The period 2013 to 2018 saw a significant number of comments, articles and papers explaining RCP8.5 wasn’t BAU. I myself saturated the comments sections in newspapers and blogs with repetitive remarks about this error. In my case I came tendencies we observed: fossil fuel resources were increasingly more difficult to extract, competing technology prices were dropping, and the assumptions in RCP8.5 didn’t make sense (I’m not going to get into it here, but do remember the RCPs were scenarios prepared to meet an arbitrary IPCC request: they wanted four cases with four forcings, and the team preparing RCP8.5 had to include absurd system behavior to reach the 8.5 watts per m2).

We can’t blame the RCP8.5 authors because they were asked to deliver the target forcing. But I think a case can be made to accuse IPCC principals of scientific fraud for: 1. using the BAU term for RCP8.5 on a consistent basis, and 2. Failing to inform the scientific community and decision makers that RCP8.5 wasn’t really BAU.

I think we can also consider the ongoing (but increasingly feeble) defense of RCP8.5 as BAU without a corresponding correction by the IPCC as a sign that it can be considered a political organization with clear political goals, no regard for the quality of its products or their adequate use by the scientific community, and lacking in ethics to such an extent that it deserves to be shut down and replaced by a new organization outside of the UN structure.

The problems we see with the RCP8.5 use are a symptom of a very serious disease which has pervaded thus field for decades, a disease which is now entering the realm of criminal behavior, because faked science is ysed to justify trillions of dollars in spending which are going to bring hefty profits to certain actors, and give geopolitical advantages to nations such as China and Russia (because they aren’t about to commit economic suicide cutting CO2 emissions to zero).

Criminal behavior can also be seen in the use of exaggerated alarmism to scare children, and put teenagers on the street asking for political changes (which conveniently demand the end of capitalism and parrot Neomarxist lines about climate justice, the white patriarchate, and etc). Scaring and traumatizing children using false information is a criminal act, and such abuse ought to stop, but we already know that radical political movements will stop at nothing, and unfortunately the climate change problem is now a weapon used by Neomarxist radicals as a means to take over.

And when we combine the economic harm they will cause with their repressive and social engineering methods, we may be about to see the West fall in the hands of a political faction which may eventually rival Stalin, Mao, Castro, and Chavez when it comes to its innate evil nature.

Ross M: ” Using a more valid regression model helps explain why their findings aren’t inconsistent with Lewis and Curry (2018) which did show models to be inconsistent with observations.”

I cannot understand this.

Never mind, I think I got it. If you use more valid regression model, then Zack’s findings are more parallel to L&C’s. Is that it?

There are assumptions that are insupportable. That models have unique deterministic solutions (McWilliams 2007, Slingo and Palmer 2012). That “the outlines and dimension of anthropogenic climate change are understood and that incremental improvement to and application of the tools used to establish this outline are sufficient to provide society with the scientific basis for dealing with climate change” (Palmer and Stevens 2019). That surface temperature is a measure of surface energy flux (Pielke 2004). That internal variability is short term, self cancelling noise superimposed on a forced signal (Koutsoyiannis 2013).

The behavior of long term climate series is defined by the Hurst law. A value for the Hurst exponent (H) of 0.5 is the statistical expectation of reversion to the mean. The value of 0.72 calculated by Hurst from 849 years of Nile River flow records reveal an underlying tendency for climate data to cluster around a mean and a variance for a period and then shift to another state. This is best understood in modern terms of dynamical complexity – or given the nature of the Earth system – patterns of spatio-temporal chaos in a turbulent flow field.

R(n)/S(n) ∝ n^H

where R(n) is referred to as the adjusted range of cumulative departures of a time series of n values (Table 1), S(n) is the standard deviation and R(n)/S(n) is the rescaled range of cumulative departures.

e.g. https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2016WR020078

It leads to a perspective on the future evolution of climate in which change and uncertainty are essential parts (Koutsoyiannis, 2013). What is far more fruitful at this time than the simple math and simpler assumptions of TCR and ECS calculations – would be a creative metaphysical synthesis such as is essential to the fundamental advancement of science (Baker 2017).

The emission pathways have – btw – been superseded by new ‘Shared Socioeconomic Pathways’. SSP5 is I suspect intended as a cautionary tale – but it seems to me to be our inevitable future. Whatever the sensitivity.

“SSP5 Fossil-fueled Development – Taking the Highway (High challenges to mitigation, low challenges to adaptation) This world places increasing faith in competitive markets, innovation and participatory societies to produce rapid technological progress and development of human capital as the path to sustainable development. Global markets are increasingly integrated. There are also strong investments in health, education, and institutions to enhance human and social capital. At the same time, the push for economic and social development is coupled with the exploitation of abundant fossil fuel resources and the adoption of resource and energy intensive lifestyles around the world. All these factors lead to rapid growth of the global economy, while global population peaks and declines in the 21st century. Local environmental problems like air pollution are successfully managed. There is faith in the ability to effectively manage social and ecological systems, including by geo-engineering if necessary.” https://www.sciencedirect.com/science/article/pii/S0959378016300681

I suggested the other day that we geoengineer the hell out of the place. Some of us have been doing just that with great success for forty years.

https://judithcurry.com/2020/01/10/climate-sensitivity-in-light-of-the-latest-energy-imbalance-evidence/#comment-907471

“The behavior of long term climate series is defined by the Hurst law.” An interesting proposal – and testable. What exactly is a “climate series”? Can we describe climate by a single number? Would it be local or global?

Hurst studied the Nile River for sixty years and published his seminal analysis in 1951. A series is a collection of observations made over time – aka time series. The answer is 42 and it is universal. Now – what is the question? Do you mean 0.72? It is calculated on Nile River data. The value itself is not critical – the departure from the value of 0.5 expected in Gaussian distributions is the point. The method has been applied to many series from economics to nerve impulses, There have been many attempts at explanations.

Here’s an explanation in terms of regimes.

https://onlinelibrary.wiley.com/doi/pdf/10.1111/j.1538-4632.1997.tb00947.x

Julia Slingo and Tim Palmer discuss it in terms of a ‘fractionally dimensioned state space’. It’s just words.

“Hints that the climate system could change abruptly came unexpectedly from fields far from traditional climatology. In the late 1950s, a group in Chicago carried out tabletop “dishpan” experiments using a rotating fluid to simulate the circulation of the atmosphere. They found that a circulation pattern could flip between distinct modes.” https://history.aip.org/climate/rapid.htm

I am old enough to remember physical models in a fluid dynamics lab. I like the physicality of it.

“You can see spatio-temporal chaos if you look at a fast mountain river. There will be vortexes of different sizes at different places at different times. But if you observe patiently, you will notice that there are places where there almost always are vortexes and they almost always have similar sizes – these are the quasi standing waves of the spatio-temporal chaos governing the river. If you perturb the flow, many quasi standing waves may disappear. Or very few. It depends.” https://judithcurry.com/2011/02/10/spatio-temporal-chaos/

https://watertechbyrie.files.wordpress.com/2017/08/river-in-mountains.jpg

That’s the explanation I favor.

Yes, rapid climate changes have occurred in pre history, so without anthropogenic CO2, CH4 etc. Is present human society unable to react to rapid changes? How fast? The 2004 Indian Ocean tsunami was too fast /hours: in 2020 the number of deaths would be less because of new undersea warning systems.

Why did rapid climate change(s) occur before?

What’s evidence those causes are behind modern warming?

Why did rapid climate change(s) occur before?

What’s evidence those causes are behind modern warming?

In warm times it snows more and ice piles up until it advances. When the ice advances it causes rapid cooling.

In cold times it snows less and ice depletes until it retreats.

When the ice retreats it allows rapid warming.

Simple stuff, that is recorded in ice core data and history.

We just came out it the little ice age. They claim the warming caused the ice retreat. The ice retreat caused the warming.

Your ice chest warms when the ice is depleted, climate works the same way. Then the oceans thaw and more evaporation and snowfall rebuilds the ice. If you watch the nightly news, the ice is being rebuilt as we watch. An open Arctic Ocean is necessary to keep Greenland supplied with ice. When the oceans are cold enough, the Arctic freezes and shuts down the ice machine. When the oceans are warm and thawed, the Arctic turns on the ice machine.

Consensus science builds ice in cold times and removes ice in warm times. They never explain how that they get evaporation and snowfall from frozen polar oceans.

I focus on change and uncertainty in the context of building prosperity and resilience along the lines of SSP5. But change can be very rapid and involve biology and hydrology.

Robert I Ellison: The behavior of long term climate series is defined by the Hurst law. A value for the Hurst exponent (H) of 0.5 is the statistical expectation of reversion to the mean. The value of 0.72 calculated by Hurst from 849 years of Nile River flow records reveal an underlying tendency for climate data to cluster around a mean and a variance for a period and then shift to another state.

A process requires more than one summary statistic (or its theoretical large sample limit) to “define” its behavior. For a process that shifts from state to state, you would want at minimum the means and variances and autocorrelations within states, and the frequencies of shifting (the distributions of the times between states).. With more than 2 states, you would want the state-to-state transition probability matrix as well, and you’d want to know if state-to-state transitions were Markovian..

You need to understand that it is about data analysis and not theory or modelling.

The Hurst exponent is a measure of the departure of the series from an idealized random Gaussian process.

“We are then in one of those situations, so salutary for theoreticians, in which empirical discoveries stubbornly refuse to accord with theory. All of the researches described above lead to the conclusion that in the long run, E(Rn) should increase like n^0.5 , whereas Hurst’s extraordinarily well documented empirical law shows an increase like n^H where H is about 0.7. We are forced to the conclusion that either the theorists interpretation of their own work is inadequate or their theories are falsely based: possibly both conclusions apply.” Lloyd 1967

https://watertechbyrie.files.wordpress.com/2020/01/structured-random.png

https://watertechbyrie.files.wordpress.com/2018/05/nile-e1527722953992.jpg

We know what the data did in the past. That’s a given. This is the spatial pattern of a relatively new temperature based Interdecadal Pacific Oscillation index.

https://watertechbyrie.files.wordpress.com/2018/05/nile-e1527722953992.jpg

http://joellegergis.com/wp-content/uploads/2007/01/Henley_ClimDyn_2015.pdf

It can be plotted.

https://www.esrl.noaa.gov/psd/tmp/gcos_wgsp/tsgcos.corr.124.170.65.87.16.21.18.3.png

It can be mapped.

https://watertechbyrie.files.wordpress.com/2019/02/tpi-sst.png

What it can’t be is predicted or modelled. There is as I have said to you a difference between a hydrologist and a statistician.

“It also is only natural and perfectly in order for the mathematician to concentrate on the formal geometric structure of a hydrologic series, to treat it as a series of numbers and view it in the context of a set of mathematically consistent assumptions unbiased by the peculiarities of physical causation. It is, however, disturbing if the hydrologist adopts the mathematician’s attitude and fails to see that his mission is to view the series in its physical context, to seek explanations of its peculiarities in the underlying physical mechanism rather than to postulate the physical mechanism from a mathematical description of these peculiarities.” Klemes 1974

This is the spatial basis of the TPI IPO index. Shifts in Pacific Ocean circulation cause megadroughts and megafloods across the planet and modulate the global energy budget.

https://watertechbyrie.files.wordpress.com/2019/04/maptpiipo.sm_.png

https://watertechbyrie.files.wordpress.com/2019/04/maptpiipo.sm_.png

https://www.mdpi.com/climate/climate-06-00062/article_deploy/html/images/climate-06-00062-g002-550.jpg

groan… drought in Australia…

https://watertechbyrie.files.wordpress.com/2014/06/vance2012-antartica-law-dome-ice-core-salt-content-e1540939103404.jpg

Robert I Ellison: You need to understand that it is about data analysis and not theory or modelling.

The Hurst exponent is a measure of the departure of the series from an idealized random Gaussian process.

Either way, in modeling or in data series, one statistic is not sufficient to define a series.

You need to understand that you wrote a false statement.

“By ‘Noah Effect’ we designate the observation that extreme precipitation can be very extreme indeed, and by ‘Joseph Effect’ the finding that a long period of unusual (high or low) precipitation can be extremely long. Current models of statistical hydrology cannot account for either effect and must be superseded.” Benoit B. Mandelbrot James R. Wallis, 1968, Noah, Joseph, and Operational Hydrology

You need to understand that there is a big picture that shadowing me with trivial nitpicking doesn’t begin to encompass.

Robert I Ellison: You need to understand that there is a big picture that shadowing me with trivial nitpicking doesn’t begin to encompass.

It is not trivial to point out that the following sentence is false:The behavior of long term climate series is defined by the Hurst law.

There might be an underlying tendency for climate data to cluster around a mean and a variance for a period and then shift to another state. , but such a system can not be defined by a Hurst coefficient of 0.72.

It is, however, disturbing if the hydrologist adopts the mathematician’s attitude and fails to see that his mission is to view the series in its physical context, to seek explanations of its peculiarities in the underlying physical mechanism rather than to postulate the physical mechanism from a mathematical description of these peculiarities

You need to understand that there is a big picture that shadowing me with trivial nitpicking doesn’t begin to encompass.

There is no good justification for writing and then defending actual mistakes in the mathematical presentations. The Hurst coefficient discredits one particularly simple and naive mathematical model of a time series, and that is all it does. The Hurst exponent is a measure of the departure of the series from an idealized random Gaussian process. Full Stop.

The Hurst law was a 1951 revolution in the understanding of geophysical time series. It has been utilized across many fields of study. Implicit in the rescaling method is standard deviation across the sample and variance of a sub-sample. As usual you miss the point in favor of generic – and petty – complaints. You shadow me with nonsense like this until you get especially rude and your comments disappear. Surely a pointless exercise.

https://www.tandfonline.com/na101/home/literatum/publisher/tandf/journals/content/thsj20/2016/thsj20.v061.i09/02626667.2015.1125998/20160613/images/medium/thsj_a_1125998_f0001_oc.jpg

https://www.tandfonline.com/doi/full/10.1080/02626667.2015.1125998

And I have cited that and other reference above.

Robert I Ellison: And I have cited that and other reference above.

Perhaps you are merely having trouble with the concept of define . Once you infer from the Hurst coefficient that a complex model is needed, the Hurst coefficient provides little information toward defining a model. That might require, among other things, computing the spectral density function and the partial autocorrelation function.

The underlying ‘structured random’ nature of geophysical time series is revealed – for which there is still yet no statistical ‘model’. We have instead data analysis in the natural sciences.

Robert I Ellison: The underlying ‘structured random’ nature of geophysical time series is revealed

Your earlier comment is close to the mark: The Hurst exponent is a measure of the departure of the series from an idealized random Gaussian process. That is a tiny amount of “The underlying ‘structured random’ nature of geophysical time series.” Certainly not a “definition”.

“The empirical investigation of several geophysical time series indicates that they are composed of segments representing different natural regimes, or periods when events are strongly autocorrelated. Using a data transformation method developed by Hurst, these regimes are diferentiated by rescaling the time series and examining the resulting transformed trace for inflections. As regime signals are not completely mixed and have rather long run lengths, Hurst rescaling produces a clustering of extremes of the same sign and elevates the Hurst exponent to values greater than 0.5. These regimes have a characteristic distribution, as defined by the mean and standard deviation, which differ from the statistical characteristics of the complete record.” https://wattsupwiththat.files.wordpress.com/2012/07/sio_hurstrescale-1.pdf

Yeah right.

Robert I Ellison: The behavior of long term climate series is defined by the Hurst law.

The Hurst “law” has morphed into a procedure:

Robert I Ellison: Using a data transformation method developed by Hurst, these regimes are diferentiated by rescaling the time series and examining the resulting transformed trace for inflections. As regime signals are not completely mixed and have rather long run lengths, Hurst rescaling produces a clustering of extremes of the same sign and elevates the Hurst exponent to values greater than 0.5. These regimes have a characteristic distribution, as defined by the mean and standard deviation, which differ from the statistical characteristics of the complete record.”

Or has it?

Now the regimes (plural) have “a” characteristic distribution as “defined by” “the mean” and standard deviation. What, no autocorrelation?

Robert I Ellison: Yeah right.

In my youth this was called a “snow job”: following up a false statement with a bunch of thematically (associatively?) related stuff that does not show the false statement to have in fact been a true statement.

“For some 900 annual time series comprising streamflow and precipitation records, stream and lake levels, Hurst established the following relationship, referred to as Hurst’s Law:

https://www.tandfonline.com/na101/home/literatum/publisher/tandf/journals/content/thsj20/2016/thsj20.v061.i09/02626667.2015.1125998/20160613/images/thsj_a_1125998_m0001.gif (1)

where Rn is referred to as the adjusted range of cumulative departures of a time series of n values (Table 1), Sn is the standard deviation, Rn/Sn is referred to as the rescaled range of cumulative departures, and H is a parameter, henceforth referred to as the Hurst coefficient.”

I have dealt with that. And what I call this is perpetual dissembling.

Robert I Ellison: “For some 900 annual time series comprising streamflow and precipitation records, stream and lake levels, Hurst

…

henceforth referred to as the Hurst coefficient.”

So you can compute a statistic from time series data. I’m happy for you; I have never disputed that. That statistic does not define the time series.

The value of 0.72 calculated by Hurst from 849 years of Nile River flow records reveal an underlying tendency for climate data to cluster around a mean and a variance for a period and then shift to another state.

[ Revealing] that underlying tendency required more than the computation of the Hurst coefficient, namely Hurst’s other data analytic procedures that you referred to.

You object when I point out your misuse of words, but you thought it was a big deal when I misspelled Ghil as Gihl. We all make mistakes.

Still rattling on about what you imagine was a misuse of the word define. Give it a rest.

“What are the main characteristics and implications of the Hurst-Kolmogorov stochastic dynamics (also known as the Hurst phenomenon, Joseph effect, scaling behaviour, long-term persistence, multi-scale fluctuation, long-range dependence, long memory)?”

It revealed a big picture you don’t seem to get.

This was an extremely interesting and informative discussion. RE (Robert Ellison), what you posted was extremely interesting and the broad thrust of it isn’t being challenged. But I agree with MM (Matthew Marler) that precision in how it is articulated IS important. It’s not that MM doesn’t understand the main point and is “dissembling” or “nitpicking” it’s that someone like me, lurking and absorbing may take away imprecise wording as a fact, like Chinese whispers.

It’s how conceptually we approach certain words – it means one thing to a person from one discipline and something different to someone from another, hydrologist versus statistician. Words really really matter. Also, when RE was challenged on the point, the subsequent posts were really interesting (particularly the graphic), giving further detail that illuminated the original post. Minus the barbs – which weren’t necessary.

This looks like communication problem – we often argue more about the meanings of words than the ideas we are trying to convey with them.

Anyway, I thank you both for an interesting discussion.

“Overall, the “new” HK approach presented herein is as old as Kolmogorov’s (1940) and Hurst’s (1951) expositions. It is stationary (not nonstationary) and demonstrates how stationarity can coexist with change at all time scales. It is linear (not nonlinear) thus emphasizing the fact that stochastic dynamics need not be nonlinear to produce realistic trajectories (while, in contrast, trajectories from linear deterministic dynamics are not representative of the evolution of complex natural systems). The HK approach is simple,

parsimonious, and inexpensive (not complicated, inflationary and expensive) and is transparent (not misleading) because it does not hide uncertainty and it does not pretend to predict the distant future deterministically.” https://www.itia.ntua.gr/en/getfile/1001/1/documents/2010JAWRAHurstKolmogorovDynamicsPP.pdf

I don’t resile at all from describing as defining the pioneering works of Hurst on natural processes and of Kolmogorov on turbulence. These defined departures of natural systems and turbulent flow from expectations of random Gaussian noise – an idea of noise that is still promulgated – but that are best understood in modern terms of dynamical complexity. How would other than a pettifogging statistician express it?

Robert I Ellison: It revealed a big picture you don’t seem to get.

LOL! The Hurst coefficient reveals as much of the big picture as a tree does about the forest in dwells in. For the big picture you have to step back from a single statistic.

I don’t resile at all from describing as defining the pioneering works of Hurst on natural processes and of Kolmogorov on turbulence.

You meant to write something along the lines of: “The HK approach can, with much work and attention to detail and many statistics, be used to characterize the climate time series.” Instead you made a false claim about a single statistic.

“Overall, the “new” HK approach presented herein is as old as Kolmogorov’s (1940) and Hurst’s (1951) expositions. It is stationary (not nonstationary) and demonstrates how stationarity can coexist with change at all time scales. It is linear (not nonlinear) thus emphasizing the fact that stochastic dynamics need not be nonlinear to produce realistic trajectories (while, in contrast, trajectories from linear deterministic dynamics are not representative of the evolution of complex natural systems).

I am glad to see that you are back to stochastic dynamics, away from asserting that all climate processes are deterministic. How to tell whether data should be represented by a stationary or nonstationary stochastic process is another problem. What process in climate science has been shown to be stationary? Even in the presence of “abrupt” climate changes?

Koutsoyiannis was exploring ideas of stationarity and nonstationarity with reference to geophysical time series. An equivalent idea when climate series are viewed with a God’s eye may be the ergodic theory of dynamical systems. Within which are seen shifts and Hurst-Kolmogorov regimes. Koutsoyiannis has as well – as a practical hydrologist – defined deterministic as predictable and random as not. But everything in the world obeys the laws of classical physics – thus everything is deterministic in principle if not soluble in practice. Tim Palmer and Julia Slingo put it in terms of Lorenzian dynamical complexity.

“The fractionally dimensioned space occupied by the trajectories of the solutions of these nonlinear equations became known as the Lorenz attractor (figure 1), which suggests that nonlinear systems, such as the atmosphere, may exhibit regime-like structures that are, although fully deterministic, subject to abrupt and seemingly random change.”

Edward Lorenz in 1969 expressed the problem in terms of the immense computational expense of solving the laws of motion – embodied in the Navier-Stokes equation – in atmosphere and oceans.

“Perhaps we can visualize the day when all of the relevant physical principles will be perfectly known. It may then still not be possible to express these principles as mathematical equations which can be solved by digital computers. We may believe, for example, that the motion of the unsaturated portion of the atmosphere is governed by the Navier–Stokes equations, but to use these equations properly we should have to describe each turbulent eddy—a task far beyond the capacity of the largest computer. We must therefore express the pertinent statistical properties of turbulent eddies as functions of the larger-scale motions. We do not yet know how to do this, nor have we proven that the desired functions exist.”

So who to believe? Koutsoyiannis with his sophisticated but practical approach to geophysical time series. Where words matter less than the ability to design and fill dams. Or Matthew whose major occupation at CE is shadowing me with pettifogging criticism.

You meant to write something along the lines of: “The HK approach can, with much work and attention to detail and many statistics, be used to characterize the climate time series.” Instead you made a false claim about a single statistic.