by Ross McKitrick and John Christy

Note: this is a revised version to correct the statement about CFCs and methane in Scenario B.

How accurate were James Hansen’s 1988 testimony and subsequent JGR article forecasts of global warming? According to a laudatory article by AP’s Seth Borenstein, they “pretty much” came true, with other scientists claiming their accuracy was “astounding” and “incredible.” Pat Michaels and Ryan Maue in the Wall Street Journal, and Calvin Beisner in the Daily Caller, disputed this.

There are two problems with the debate as it has played out. First using 2017 as the comparison date is misleading because of mismatches between observed and assumed El Nino and volcanic events that artificially pinched the observations and scenarios together at the end of the sample. What really matters is the trend over the forecast interval, and this is where the problems become visible. Second, applying a post-hoc bias correction to the forcing ignores the fact that converting GHG increases into forcing is an essential part of the modeling. If a correction were needed for the CO2 concentration forecast that would be fair, but this aspect of the forecast turned out to be quite close to observations.

Let’s go through it all carefully, beginning with the CO2 forecasts. Hansen didn’t graph his CO2 concentration projections, but he described the algorithm behind them in his Appendix B. He followed observed CO2 levels from 1958 to 1981 and extrapolated from there. That means his forecast interval begins in 1982, not 1988, although he included observed stratospheric aerosols up to 1985.

From his extrapolation formulas we can compute that his projected 2017 CO2 concentrations were: Scenario A 410 ppm; Scenario B 403 ppm; and Scenario C 368 ppm. (The latter value is confirmed in the text of Appendix B). The Mauna Loa record for 2017 was 407 ppm, halfway between Scenarios A and B.

Note that Scenarios A and B also differ in their inclusion of non-CO2 forcing as well. Scenario A contains all non-CO2 trace gas effects and Scenario B contains only CFCs and methane, both of which were overestimated. Consequently, there is no justification for a post-hoc dialling down of the CO2 gas levels; nor should we dial down the associated forcing, since that is part of the model computation. To the extent the warming trend mismatch is attributed entirely to the overestimated levels of CFC and methane, that will imply that they are very influential in the model.

Now note that Hansen did not include any effects due to El Nino events. In 2015 and 2016 there was a very strong El Nino that pushed global average temperatures up by about half a degree C, a change that is now receding as the oceans cool. Had Hansen included this El Nino spike in his scenarios, he would have overestimated 2017 temperatures by a wide margin in Scenarios A and B.

Hansen added in an Agung-strength volcanic event in Scenarios B and C in 2015, which caused the temperatures to drop well below trend, with the effect persisting into 2017. This was not a forecast, it was just an arbitrary guess, and no such volcano occurred.

Thus, to make an apples-to-apples comparison, we should remove the 2015 volcanic cooling from Scenarios B and C and add the 2015/16 El Nino warming to all three Scenarios. If we do that, there would be a large mismatch as of 2017 in both A and B.

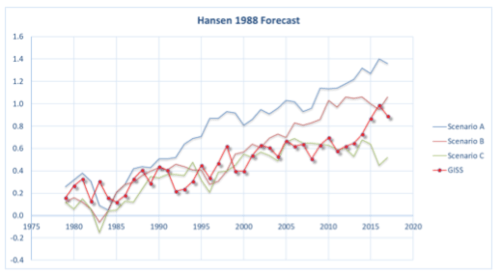

The main forecast in Hansen’s paper was a trend, not a particular temperature level. To assess his forecasts properly we need to compare his predicted trends against subsequent observations. To do this we digitized the annual data from his Figure 3. We focus on the period from 1982 to 2017 which covers the entire CO2 forecast interval.

The 1982 to 2017 warming trends in Hansen’s forecasts, in degrees C per decade, were:

- Scenario A: 0.34 +/- 0.08,

- Scenario B: 0.29 +/- 0.06, and

- Scenario C: 0.18 +/- 0.11.

Compared these trends against NASA’s GISTEMP series (referred to as the Goddard Institute of Space Studies, or GISS, record), and the UAH/RSS mean MSU series from weather satellites for the lower troposphere.

- GISTEMP: 0.19 +/- 0.04 C/decade

- MSU: 0.17 +/- 0.05 C/decade.

(The confidence intervals are autocorrelation-robust using the Vogelsang-Franses method.)

So, the scenario that matches the observations most closely over the post-1980 interval is C. Hypothesis testing (using the VF method) shows that Scenarios A and B significantly over-predict the warming trend (even ignoring the El Nino and volcano effects). Emphasising the point here: Scenario A overstates CO2 and other greenhouse gas growth and rejects against the observations; Scenario B slightly understates CO2 growth, overstates methane and CFCs and zeroes-out other greenhouse gas growth, and it too significantly overstates the warming.

The trend in Scenario C does not reject against the observed data, in fact the two are about equal. But this is the one that left out the rise of all greenhouse gases after 2000. The observed CO2 level reached 368 ppm in 1999 and continued going up thereafter to 407 ppm in 2017. The Scenario C CO2 level reached 368 ppm in 2000 but remained fixed thereafter. Yet this scenario ended up with a warming trend most like the real world.

How can this be? Here is one possibility. Suppose Hansen had offered a Scenario D, in which greenhouse gases continue to rise, but after the 1990s they have very little effect on the climate. That would play out similarly in his model to Scenario C, and it would match the data.

Climate modelers will object that this explanation doesn’t fit the theories about climate change. But those were the theories Hansen used, and they don’t fit the data. The bottom line is, climate science as encoded in the models is far from settled.

Ross McKitrick is a Professor of Economics at the University of Guelph.

John Christy is a Professor of Atmospheric Science at the University of Alabama in Huntsville.

Moderation note: As with all guest posts, please keep your comments civil and relevant.

There is a list of malapropisms with respect to global warming.

One is certainly worse than expected.

Since the time of the testimony, emissions were a little less than, but closest to scenario B.

Observed trends were a little more than, but closest to scenario C.

But both emissions and sensitivity have been better than expected.

This would appear to be good news – perhaps emissions aren’t a problem at all.

But I’m convinced now that in addition to the other lists of biases, climate advocates suffer from negativity bias, dismissing good news and clinging to narratives of catastrophe, even when observations are falsifying these ideas.

climate advocates suffer from negativity bias, dismissing good news and clinging to narratives of catastrophe, even when observations are falsifying these ideas.

They know they must scare us in order to tax and control us.

No one pays anyone to say everything is OK!

The CO2 emissions have been a little more than the emission scenario A. If atmospheric CO2 levels were lower than that scenario, then Hansen overestimated the airborne fraction of the emissions. So, humans emitted CO2 without any reduction (business as usual), atmospheric CO2 levels are lower than predicted by the bau scenario and the temperatures are even lower, at the scenario C level (extreme emission reductions).

I think Hansen somewhere wrote that he used a climate sensitivity of 4.1 °C/2xCO2

“he used a climate sensitivity”

GCMs don’t use a climate sensitivity. You can use a GCM to diagnose ECS, and they did that. They got 4.2°C/doubling from GISS II.

Nitpicking sematics are we Nick? So he used a model with an implicit sensitivity of 4.2, that’s pretty high for IPCC standards and probably due to the extreme earlly Budyko results which prompted Hansen to climate alarmism in the first place.

You have to look at the model Hansen used, and it was not a global model, and if you are comparing results and not specifying the GISS data source you are using, or specifying a global GISS, well then you are doing it wrong and getting the wrong result.

And not answering the question if his forecasts were accurate.

So you have to RTFR, and ATFQ!

Reblogged this on Climate Collections.

Judy,

Why didn’t you post the GIS Temperatures beyond the spike: http://www.woodfortrees.org/plot/gistemp/from:1988 ??

The spike was due solely to the El Nino.

If you plot beyond the spike, Hansen’s “C” is clearly the more correct outcome.

Hansen’s 2017 Scenario B prediction was not far off reality.

That does not matter.

What does matter is was warming different and more than during the warming into the Roman of Medieval warm periods or the warm periods before that during the recent ten thousand years. Not even close!

The bottom line is, climate science as encoded in the models is far from settled.

Why not spend more time understanding natural climate change causes? Even so called skeptics will not discuss anything other than CO2 sensitivity.

My thanks to Ross and John for cutting through the confusion and the misrepresentation to present a clear picture of the underlying facts.

w.

One often overlooked problem with Hansen’s temperature predictions based on emissions scenarios (and that is what they were, as anyone who reads the PDF of his testimony can see) is that he committed two important but fortuitously cancelling errors in his assumptions about CO2 concentrations — his Scenario A data assumed emissions would be lower than they turned out to be but that the resulting CO2 concentrations would be higher (i.e. it assumes sinks will not behave the way they actually did, increasing their uptake) Had he known “business as usual” emissions (the only independent variable, and the only one Congress can affect) would would be higher, using otherwise the same assumptions he would have predicted higher CO2 concentrations (instead of being quite close) and his temperature prediction would have been even farther off.

“his Scenario A data assumed emissions would be lower than they turned out to be”

His scenario A made no assumptions about tonnage emissions. He did not, in 1988, have access to any reliable data on them. That only became available after UNFCCC got governments to collect them. The scenarios were defined purely in terms of expected gas concentrations. We have the numbers.

Nick, as I’ve pointed out elsewhere, Congress has no ability to directly affect concentrations, only emissions. Therefore, three policy-based scenarios must be emissions scenarios (and are in fact directly labelled as such in the presentation).

This is pretty elementary logic — if the concentrations weren’t chosen to represent emissions scenarios, what on Earth else could they possibly have been chosen to represent, and what was the point of a hearing about them?

“Therefore, three policy-based scenarios must be emissions scenarios”

Well, they aren’t. But how can you usefully give a scenario about future emissions (tons) when you don’t know what they currently are?

So, emission scenarios made no assumptions about emissions. That makes his scenarios projections even worse.

The situation in 1988 was that we were clearly burning a lot of carbon. And CO2 was increasing in the air, and was accurately measured. That increase was taken as the measure of emissions. We still do that for methane.

Nick, after reading your post, I think you’re getting hung up on whether Hansen knew the tonnage of emissions in 1988 (something we still don’t really know with much reliability today), when really he was only concerned with their trend. We knew emissions were rapidly increasing in 1988, and the point of his testimony was to argue that freezing emissions at whatever level Y they currently were would save us from X degrees of warming.

Today we know (albeit with limited reliability) that CO2 emissions probably grew faster after 1988, but that carbon sinks also took in more than CO2 than expected.

In case it helps, here is the EPA’s chart (with the above caveats): https://www.epa.gov/sites/production/files/styles/large/public/2017-04/fossil_fuels_1.png

Nick,

“And CO2 was increasing in the air, and was accurately measured. That increase was taken as the measure of emissions. We still do that for methane.”

Well, that’s a pure measure of emissions. You have to know or correctly estimate the AF.

.. a poor measure

“You have to know or correctly estimate the AF”

No, ppm is measured. If tonnage emission is measured, you can deduce AF (which is noisy). But none of this is relevant to Hansen’s calculation. CO2 ppm is the estimate that he needs as input for his program, and it was his scenario. If tonnages were available, he might have tried to convert them to ppm, and he might even have got that wrong. But they weren’t, and he didn’t.

In case the problem still isn’t clear, imagine for a moment we live in a version of history where Hansen was taken more seriously and global emissions had flatlined in 1988, but temperatures took the same path as in our version. Wouldn’t it be perfectly plausible for Hansen to claim the policy change had prevented all that Scenario A warming, and scoffing at skeptics’ suggestion that temperatures wouldn’t have risen more rapidly had emissions exceeded the pre-1988 trend, perhaps partly due to increased carbon sink uptake? This is where climate science runs into serious issues with the “falsifiable” pillar of the scientific method.

Nick, they are emission scenarios, even if he used only atmospheric levels. The AF is standing between emissions and concentrations. He implicitly assumed some AF. He overestimated it.

talldave2: “Therefore, three policy-based scenarios must be emissions scenarios”

NickStokes: Well, they aren’t. But how can you usefully give a scenario about future emissions (tons) when you don’t know what they currently are?

Well, …, they are. If, as you imply, they are not useful scenarios, it would have been nice if the scenario-wallahs* had alerted us to that some decades earlier.

* Scenarists? Scenario-artists? Scenario-mongers? Scenario-smiths?

Reading this essay and the comments, a similarly-themed essay at RealClimate, Willis Eschenbach’s essay at WUWT and some others, it seems to me that Hansen and the other scenarists would have done us all a great favor if they had pointed out the uncertainties and guesses and ambiguities back then; they could have said that Hansen’s work was Heuristic, and to be improved upon, but not taken as a severe alarm that urgent action was needed.

“If, as you imply, they are not useful scenarios”

They are entiretly useful scenarios. They describe the evolution of trace gases in the air, which is what matters for both Hansen’s program and the real climate. As to how we might influence the concentrations of trace gases in the air – the answer is simple. CO2 is increasing because we are burning a lot of carbon. Burn less!

Why burn less? If we knew that what Hansen was stating was going to happen is what actually happened, then there is/was no cause for alarm. The current rate of warming is not a problem even if it continues. The rate being the important thing not the amount. If the rate is slow enough then the adjustments that are needed to be made will simply be made through migration and adaptation. Even if Hansen was right about the rate, as you seem to think, then he was still wrong about the need to do anything about it.

How about “scriptwriters?” They’re the ones who produce scenarios that are superficially credible to minds scientifically unequipped to deal with geophysical realities. Their work is only a step away from creating pure fiction. Small wonder that Hollywood loves them!

Nick — at any rate we are still left with the simple logic that either his scenarios were intended to represent his highly-confident (but wrong) prediction at to how temperatures would respond to concentrations would respond to emissions (as is clearly stated in his presentation) in which case he overestimated the strength of both relationships, or the scenarios were not based on emissions in which case his presentation had no relevance to policy.

Fortuitous cancelling errors are the bane of any scalar prediction system, like your cousin who bought Apple stock because he thought people would eat more of them, and insists he is therefore a skilled investor.

I was thinking burn more, but also be careful not to be wasteful. No CCS nonsense.

Pingback: The Hansen forecasts 30 years later | Watts Up With That?

“Here is one possibility. Suppose Hansen had offered a Scenario D, in which greenhouse gases continue to rise, but after the 1990s they have very little effect on the climate.”

He might also have been laughed out of the overheated presentation room where he shut off the AC and opened the windows, given that he’d just declared global warming due to human emissions was definitely a huge problem.

My primary complaint about Hansen’s 1988 testimony is that he referred to Scenario A as “business as usual”. To the contrary, his1988 paper (Hansen et al., “Global climate changes as forecast by Goddard Institute for Space Studies three-dimensional model”) described scenario B is “perhaps the most plausible of the three cases.” Scenario A not only included exponential growth in non-ghg gases such as CFCs, but also included a term for “hypothetical or crudely estimated trace gas trends” which equaled the (exponentially-growing) CFC term.

“he referred to Scenario A as “business as usual””

Too much is made of this. It doesn’t mean that he expects that is what will happen. It just means what will happen if nothing changes.

But anyway, the scenarios are not predictions, otherwise we would not have A, B and C. They are to cover the range of possibilities, and what matters for the prediction is the actual evolution (of gases) that happened.

“the scenarios are not predictions”. For the paper, I’d agree. The intent was to simulate a range of possibilities. But when he characterized Scenario A as “business as usual”, it became a prediction — what would happen without any significant policy change.

Scenario A is fairly close to B as far as CO2 was concerned, at least up to the present time. But A had no counteracting volcanic eruptions (as B did), and A included a wholly hypothetical forcing term for “other trace gases”. The difference in forcings (as projected to 2018) is quite considerable. This is why I consider A to have been an upper bound on ghg effects. As an upper bound, I have no problem with including a made-up term in order to include a buffer for unknowns. But it is inappropriate to then consider it “business as usual”.

Business as usual does not mean the most plausible. It simply means no action, no emission reductions. When it comes to CO2, that’s exactly what happened. If the CO2 emission data is accurate enough.

“When it comes to CO2, that’s exactly what happened.”

In fact, CO2 did follow quite closely scenario A. It was even closer to B, but there was little difference between them (for CO2). It was the relative slowdown in other gases that reduced the outcome forcing.

edimbukvarevic — CO2 is not relevant when comparing scenarios A&B; the concentrations remain quite close up to 2018. It’s the non-CO2 terms which are the primary difference between forcing profiles. See previous comment to Nick.

Pingback: The Hansen forecasts 30 years later |

Pingback: Analytical and Scientific Arrogance | POLITICS & PROSPERITY

The way I learned it was that if the hypothesis doesn’t fit the data, either there is something wrong with the data we are using to test the model, something wrong with the model, or both. So it seems the bottom line is in the last paragraph: this is far from settled. Short of a time machine…

Pingback: Much Ado about the Unknown and Unknowable | POLITICS & PROSPERITY

Ross McKitrick and John Christy, thank you for the essay.

Joel Achenbach (The Tempest) reminds us about what was said when Hansen shared his beliefs with Congress during, “the brutally hot summer of 1988″–i.e., “less than 10 years to make drastic cuts in greenhouse emissions, lest we reach a ‘tipping point’ at which the climate will be out of our control,” is all the time America had left.

Of course… nothing happened. All scientists should have been more skeptical back in ’88..

Well we did drastic cuts in fluorocarbon greenhouse gases, so we didn’t do nothing.

” Note that Scenarios A and B also represent upper and lower bounds for non-CO2 forcing as well, since Scenario A contains all trace gas effects and Scenario B contains none. So, we can treat these two scenarios as representing upper and lower bounds on a warming forecast range that contains within it the observed post-1980 increases in greenhouse gases. Consequently, there is no justification for a post-hoc dialling down of the greenhouse gas levels; nor should we dial down the associated forcing, since that is part of the model computation.”

If I understand that correctly, it is just wrong. Completely. Scenario B contained lots of trace gas effects, as did C. And the conclusion is completely wrong.

I have written a detailed analysis of the scenarios here, with links to sources and details. A quick summary of main sources:

1. Although Hansen didn’t graph the scenarios, we do have a file with the numbers, here. It is actually a file for a 1989 paper, but there is every indication the scenarios A,B,C are the same.

2. There are detailed discussions with graphs, from Gavin Schmidt recently, and from Steve McIntyre in 2008 (who got much more right than this article). I won’t give all the links here, because I will probably be sent to spam, but they are in my post linked above. SM also recalculated the scenarios from Hansen’s description; he gives numbers and plots.

In fact, the main reason Hansen’s result came between B and C was that methane and CFC’s were overestimated in B and even C. Here is the RC plot of the scenarios and outcomes:

https://s3-us-west-1.amazonaws.com/www.moyhu.org/2018/06/gavin1.png

Gavin also gives the combined forcings, which quantifies the placing of the outcome between B and C.

My calculation of the trends in the actual 30 yr prediction period 1988 to 2017 were

A: 0.302 B: 0.284 C: 0.123 with observed generally about 0.18

It doesn’t make sense to give error ranges, since the predictions don’t contain randomness.

Here is Gavin’s plot of the forcings. That is in effect the appropriate combined effect of the various trace gases, showing an outcome between B and C.

https://s3-us-west-1.amazonaws.com/www.moyhu.org/2018/06/gavin2.png

And here is my plot of the 1988-2017 trends for scenarios and surface temperature outcomes:

https://s3-us-west-1.amazonaws.com/www.moyhu.org/2018/06/bar.png

Allow Eli to post this again and encourage others to go read the long discussion of trace gas contributions in Appendix B of Hansen, Fung, Lacis, Rind, Lebedeff, Ruedy and Russell

https://pubs.giss.nasa.gov/docs/1988/1988_Hansen_ha02700w.pdf

The contributions of the trace gases after 1988 are substantial and shocking, by contrast CO2 is rather boring. Clearly if your point is to evaluate the model, you would run it again using observed forcings. If your point is to evaluate the scenario you would compare the assumed forcings in the paper to the observed ones to date as in the figure from Real Climate seen above. If your mission were to spit on Hansen you would mix and match as needed.

https://photos1.blogger.com/blogger/4284/1095/1600/Forcings.0.jpg

Thursday, June 01, 2006 As Mr Dooley says, trust everyone, but cut the cards…. Eli

“folk were claiming that Hansen had never said that scenario B was the most likely. Eli went and RTFR (J. Geo Res. 93 (1988) 9341), and sure enough Hansen et al. sure enough said that B was the most likely scenario

Until 2010, the difference in forcing between Hansens scenarios A and B all come from changes in concentration of chlorofluorocarbons, methane, and nitrous oxide and volcanic eruptions. You can see this in Fig. 2 from the paper. That figure shows (copy below) forcings from CO2 increases (top), CO2 + trace gases (middle) and CO2 + trace gases + aerosols from volcanos (bottom).

The dip in the bottom third near ~1982 represents the effect of the eruption of El Chichon, the later dips are guesses about when major eruptions will occur. Pinatubo came at in the 90s, a bit earlier than assumed, but the depth of the effect was about right.”

except no volcanoes were factored into A later.

So the only reason that B and C are anywhere near realityl is that they had a dose of volcanoes that did not occur.

Wonderful.

Nick- you are correct that B includes CFCs and methane, but not the other non-CO2 GHG’s. I missed that detail. I have revised the text above accordingly.

Ross,

B and C also have N2O. The point is that it is these gases that make the difference. CO2 was scarcely different in forecast in A and B, and the reality followed. What brought the forcing down was the unexpected pause in methane, and the reduction in CFCs that followed Montreal, which A and B were sceptical about (Montreal was 1989) but C allowed for.

Nick and Ross, good exchange here.

“Montreal was 1989”. Montreal was signed in Sept 1987; the US ratified it in April 1988. Jan 1989 was when it formally entered into force. By the time of Hansen’s testimony, the writing was on the wall about CFC reduction. However, the scenarios were created well before Montreal, possibly before Vienna (1985).

Nick,

Surely the error ranges should include errors of bias, not just randomness.

If you accept this, you need to accept that the error bounds should enclose most of all 3 projections because at the time in 1988 or so, all 3 were considered valid enough to publish. Therefore by 2016-7, the error estimate from the first graph above would be +/- 0.5C or so. Because the error has reached this much, it should be taken to be the error over the whole time period of the projections.

So where is the benefit from splitting hairs in arguments when the uncertainty of the T data is +/- 0.5C? Angels dancing on pins stuff? Geoff.

Geoff,

Yet again, scenarios are not predictions – they don’t have either error or bias. They are just an example of what you think might happen.

But it makes no sense to do a calculation of error or variance on the trend either. The scenario is just a simple mathematical function. Scenario A CO2 is an exponential a + b*1.015^n.

Nick,

I did noit use the terms ‘scenario’ or ‘prediction’, merely ‘projection’ which these clearly are.

There is value on putting confidence limits around projections. Most projections have ragged riders like ‘I’ll bet a lot on this being right’ or “This is an uncertain projection because we do not have good data to start wirh”

I am saying that it is best to express this more mathematically, by the visual use of boundaries on graphs, for example.

Part of the drive behind this suggestion is to encourage discipline for those sloppy researchers who studiously avoid proper confidence limits or calculated error bounds when they know it will weaken their stories. This is understandable, but unhelpful.

There remains a serious impediment in a lot of climate science, namely, the lack of formal, proper error calculations as a routine practice. Geoff.

Of course error and bias are of interest in discussing scenarios. Their projections are the product of their assumptions and if scenarios are going to be of any use one needs to understand how likely (subjective or objective, take your pick) their assumptions are to help evaluate the scenario’s projections.

The first thing anyone should do when evaluating projections is to understand the likelihood of the assumptions. Wheat from chaff and all that.

Hence it is well worth asking e.g. what the likelihood of the exponential fit is given past observations etc etc and carrying that through to its impact on the projections, and if you are doing post hoc assessment you have even more information.

“Of course error and bias are of interest in discussing scenarios. “

You can’t speak of error or bias unless you have a notion of what is right. And scenarios don’t have that (else why are there three of them?). The scientist is basically punting to the reader – I can calculate these options – which do you think is most likely? Or which would you prefer to try to achieve?

Hansen’s scenario A for CO2 is a 1.5% annual increase in the annual increment. How can you put an error measure on that?

I gave the example of an aircraft designer who gave performance figures for loads of 500kg and 1 ton. These are scenarios of how you might load your plane. How would you put an error figure on the 500 kg?

‘You can’t speak of error or bias unless you have a notion of what is right.’

And if you don’t ‘have a notion of what is right’ scenarios are useless.

‘have a notion of what is right’

Well yes.

The projection/prediction with error bars of what was going to happen anyway, independant of human influence, is the line that is missing.

The underlying theses is that warming is causal and we are the cause.

The assumption is that this is right.

Pointing out that projections on certain terms are error free because they are based on assumed future data does not mean that an estimate of error should not be made.

No where here is a Null Hypotheses tested against natural temperature change.

For meaning,that would be the projection/prediction worth seeing 30 years ago.

“Pointing out that projections on certain terms are error free “

The issue was whether the scenarios themselves should have error bars. And there just isn’t any basis for doing that. As to the projected temperatures, the normal thing nowadays would be to do an ensemble to estimate variability. But in 1988, that just wasn’t feasible.

“And if you don’t ‘have a notion of what is right’ scenarios are useless.”

OK, I’ll put it mathematically. A GCM is a function. It maps from a domain (scenarios) to a range (average temperature and much more). Like, say, y=f(x)=x^2. What does that function mean? You can say

let x=2, then y=4

let x=3, then y=9

It might be stochastic

let x=4, then y=16±2

It makes sense to talk of an error in y. But how can you quantify an error in x? What could it mean? It’s your choice.

‘But how can you quantify an error in x? What could it mean? It’s your choice.’

I choose a likelihood function on the domain, as does anyone else who wants scenario work to be useful.

“I choose a likelihood function on the domain”

Likelihood of what, though?

I’ll put it in computing terms. Hansen has a program that can be written in one line:

results=GCM(scenario)

You can enter any scenario you like. But the program takes a while to run, so you choose scenarios whose results you might find useful later, being somehow representative. That is a criterion, but how could you associate it with a likelihood function?

Scenarios are the product of their assumptions. Straightforward enough to develop likelihood functions for them (even if only subjective) and straightforward enough from there to derive one for the scenario.

Implicitly people do this when they use scenarios (e.g. your term ‘representative’ is the language of likelihoods), its just that there seems to be resistance to formalising this.

Clearly if your point is to evaluate the model, you would run it again using observed forcings. If your point is to evaluate the scenario you would compare the assumed forcings in the paper to the observed ones to date as in the figure from Real Climate seen above.

This would be a great point if Hansen’s presentation had quietly noted there were no particular emissions policy implications to his work since his model couldn’t be expected to tie any particular emissions scenario to any particular concentrations or temperature trend and thus could only be fairly judged against whatever concentrations actually developed, instead of claiming (with the AC turned off and the windows open on a hot day) that major emissions policy changes were justified because he had a strong understanding of how emissions affect concentrations affect temperatures, enabling him to predict with high confidence three different temperature trends based on the one independent variable (and the only one Congress can affect): emissions policy.

Hansen et al. sure enough said that B was the most likely scenario

Since they represent emissions policy scenarios, that was a political prediction more than a scientific one (that is, he was predicting the policy in that statement, not its result, which he already claimed to know with high confidence). It would be unfair indeed to judge Hansen on his ability to predict the path of emissions policy, as opposed to its effects on concentrations and temperatures.

Sorry, my comment there probably should have gone into a different bucket.

At any rate, based on their comments I suspect Eli and Nick perhaps have simply not seen Hansen’s presentation, so I link it below. He explicitly refers to C as “draconian emissions cuts.” He laudably admits to some “major uncertainties” with respect to GCS and ocean heat uptake (but not regarding the relationship of emissions to concentrations)… but then expresses “a high degree of confidence” in his conclusions anyway.

http://image.guardian.co.uk/sys-files/Environment/documents/2008/06/23/ClimateChangeHearing1988.pdf

As Monty Python might say, “And now for something completely different.”

Rather, than go after Dr. Hansen’s predictions I choose to challenge the IPCC’s best estimate of ECS as being 3.0.

At the end, I document that I can support the Lewis & Curry value of ECS being much lower, 1.66.

Stay with me I hope the trip will be worth it. It is unfortunate that I can’t post pictures. Everything comes from a link to my OneDrive.

For the UAH analysis I use the data from Mauna Loa for CO2. I do have a very precise fit. It is a quadratic fit with a sine wave on top.

https://1drv.ms/f/s!AkPliAI0REKhgZh4Jee-1Gw8oXbzdA

I am expecting an update on UAH from Dr. Spencer any day now so this is with last month’s data. (BTW, I did receive the update and I think what I have here will do.)

https://1drv.ms/u/s!AkPliAI0REKhgZkASsIZ4gfFm9e4AA

Note that the figure does include a pause line. I added that feature because as the temperature drops, if it does, I anticipated a return of the pause line. BTW, its starting point is not cherry picked. I actually calculate where the slope would be minimal.

That figure is interesting but here is what I am after. We have all seen Dr. Spencer’s figure which shows how the models perform with respect to the balloon data. I changed that figure slightly.

Instead of the balloon data I used a five-year moving average of the UAH data and its solution in red from the above figure. Instead of the model data I substituted the ECS values of the best estimate based on a value of 3.0.

https://1drv.ms/u/s!AkPliAI0REKhgZkBPGtRgiAEFBAaEw

Pay attention to one more thing. Not long ago Nic Lewis and Dr. Curry estimated that the ECS value of 3 is off by about a factor of 2. I believe their estimated value was 1.66. That is shown on the chart. It would seem with that value we are finally correlating with measurements. How in the world could the value be 3.0?

I don’t know what the answer is but how serious is CO2 if the ECS value is 1.66. Is it really worth the expense we are going through?

It gets even better. Dr. Spencer reviewed what Nic Lewis and Dr. Curry did.

http://www.drroyspencer.com/2018/04/new-lewis-curry-study-concludes-climate-sensitivity-is-low/

Here is a very important statement by Dr. Spencer.

“If indeed some of the warming since the late 1800s was natural, the ECS would be even lower.”

Bingo!

With my cyclical fit I do think the ECS is lower because I assume the cyclic fit is natural sources.

Dr. Spencer used this figure.

https://1drv.ms/u/s!AkPliAI0REKhgZkClctphXouBBnNLg

I have a much lower value of ECS that seems to work quite well with this figure.

It is one thing to ask how important CO2 is if the ECS is 1.66. It is something else if it is lower than 0.5. Some have suggested this value or lower.

Now for the part that matched the Lewis & Curry ECS value.

I used the 5-year moving average as the basis for the evaluation and assuming that CO2 is responsible for everything this is what I got.

y[i]=b[1]+b[2]*ln(co2/co21)/ln(2) The b values are what I guess.

My characterization goes like this.

co21=D*’x[1]^2+E*’x[1]+FF

co2=D*’x[i]^2+E*’x[i]+FF

I ignored the sine wave portion of my CO2 fit.

https://1drv.ms/f/s!AkPliAI0REKhgZh4Jee-1Gw8oXbzdA

That ECS value is very close to the 1.66 identified by Lewis and Curry.

It is beyond me how a value of ECS of 3.0 can be at all justified.

An effective TCR in excess of 2 can easily be justified by fitting temperature to CO2. The scaling here is exactly 100 ppm per degree and as you can see it works well. For the range of 300-400 ppm, the sensitivity is 2.4 C per doubling.

http://woodfortrees.org/plot/esrl-co2/mean:240/mean:120/derivative/scale:12

Using much lower numbers, dangerously underestimates past warming, which is odd because supposedly their method considered it.

Jim D: You were so often corrected with your missleading method and WfT -operations to blame the whole T-change on CO2 instead to make the correct way: net T-change vs. net forcing change! IMO you make it intentionally and no further discussion is useful.

This relation derived with 60 years of data is better guidance because it accounts for proportionate factors to CO2. CO2 accounts for 70-100% of that change and the rest is correlated enough to make CO2 a good fit on its own to the forcing change. There are reasons these curves look so similar and why projecting warming from CO2 alone works so well for this period.

Do we really need to listen to people who still think that urban areas show warming more than rural areas? BEST proved that rural areas warmed at the same rate.

Do we really need to listen to people who think round stations are “contaminated” even though we all know (as Dr. Curry knows) that statistically stations that show less warming than they should are just as common as those that show more warming than they should.

I like reading and listening to climate scientists to get the science. Policy should follow the science, not the other way around.

statistically stations that show less warming than they should are just as common as those that show more warming than they should.

“statistically” stations show the temperature that occurs at that station, who ever said they show something different than they should. stations are placed to measure temperature at that place and the temperature they measure is the temperature they should measure. If temperatures are different in different kinds of places, we learn from that, we don’t just say it must be wrong.

… and if the “climate scientists” are actually a bunch of huckster lining their (and their crony buddies) pockets at the expense of the poor and middle class, then looks to me like you are getting pseudo-science. Suggest you always be wary of any group that scurries into the dark when faced with honest inquiry. Fact is, we have no idea what increasing levels of CO2 will bring – simply beyond our ability to determine. Use energy wisely, and we will be just fine.

That’s some serious “but what if…” absurdity. But hey, what if all scientists who are pretending that CO2 is not the primary forcing are nothing but hucksters? Then it looks to me like you are being duped by the oil and gas industries!

Remember how Heartland was paid by the cigarette companies to tell us that cigarette smoking did not cause cancer? Took some people several decades to finally admit they were duped.

Fact is, we do know that CO2 is the primary forcing.

No it is the sun that is the primary forcing.

Scott on UHI,

My home city of Melbourne has been studied for years as a candidate for UHI effect.

University scientists think the effect is real.

Would you like to read this and linked references and report back on UHI? Geoff

https://www.researchgate.net/publication/266267164_The_urban_heat_island_in_Melbourne_drivers_spatial_and_temporal_variability_and_the_vital_role_of_stormwater

Melbourne

Yes it has UHI

NO, the UHI does not impact the global record

WHY?

1. It is rather rare. Cities that large make up a tiny portion of all records.

2. If you are smart your algorithm will DE BIAS the series.

Here; Raw data is a trend of 1.07

De Biased: .42

http://berkeleyearth.lbl.gov/stations/151813

But Hey, Skeptics live in a world where they never check the data.

1. They never actually COUNT the number of stations that are in high

population centers

2. They never talk about adjustments that cool the record.

But here is a question for you.

Of the 40K stations in the database, where do you think Melbourne ranks in terms of population density?

Hi Steven,

Much of the Australian historic temperature data are from max/min thermometers that do not record the time of day when their maxima or minima were reached. From other studies, like the Melbourne Uni ones, we can surmise that the Tmax and Tmin will not usually happen at a time when UHI is strong for the day. So then you suggest we use a methodology to take a prescribed amount of temperature from a reading that was not showing it in any case.

Then you say, well, if it was not showing UHI, where is the argument?

The argument comes at transitions to other methods of recording Tmax and Tmin, such as selecting the highest and lowest of 24 hourly readings each day. While this can be valid if sel-contained, it is not valid to transition from the old Tmax/Tmin with empirical UHI adjustment, to the later method.

The empirical adjustment is also wring, wrong wrong for several reasons, one just given, another that there is a lack of study of the relation between population and UHI (though I not in denial that there might be one – it is simply fiendish hard to get it right in every case because critical metadata are not there).

UHI is a big problem. It cannot be satisfactorily adjusted in hindsight from LIG thermometers with max/min pegs. I’ve spent day after day trying to find ways to account for it in Australian historic data and have failed to make progress every time. There is no useful difference between urban and rural, because the more rural you get, the worse the data quality in general and noise overtakes the effort before you can get to an answer. besides, it is easy to imagine scenarios where a 1 person population creates high UHI effects.

These are some reasons why I prefer UAH data when its application is appropriate. Yes, I have read and re-read the adjustments that are applied. There is a difference between logical, OK adjustments and adjustments made because the data look better for them. The UAH data satisfy these needs, in my view, better than any other global scheme including BEST.

You ask “Of the 40K stations in the database, where do you think Melbourne ranks in terms of population density?”. I guess I know the rough answer to that, but for reasons just given (and more not) it is the wrong question. The right question is “What damage have we done to data integrity by attempting to make illogical, under-researched and capricious adjustments for UHI?” Face it, UHI happens and it can have a large effect. The trouble is with “can have” that cannot be turned into “does have”.

Keep up the good work. You’ll catch up soon, we hope. Geoff.

Scott Koontz: “Policy should follow the science, not the other way around.”

Assuming you believe that settled science predicts global warming to an extent which must be considered dangerous to the health of the planet; and that we must greatly reduce the world’s carbon emissions to avoid serious damage to ourselves and to the environment, then what would be your proposed carbon reduction targets, implemented over what period of time?

What governmental policies, what technical and political strategies, and what kinds of specific actions would you propose be adopted in furtherance of those carbon reduction targets, as they are to implemented over the timeframes you propose?

The question could be turned around. Based on what you know so far, is it better to be at 2100 with (a) 600-700 ppm and rising CO2 levels or (b) less than 500 ppm and CO2 stabilized or declining. If you were setting a target, which would you favor?

Jim D: “The question could be turned around. Based on what you know so far, is it better to be at 2100 with (a) 600-700 ppm and rising CO2 levels or (b) less than 500 ppm and CO2 stabilized or declining. If you were setting a target, which would you favor?”

Defenders of today’s mainstream climate science talk endlessly about the truth of the science, but say very little concerning what specific actions must be taken, and what kinds of difficult choices must be made, to get from here to there in terms of achieving specifically-stated emission reduction targets over a specifically-stated carbon reduction schedule.

Scott Koontz and Jim D, you are being given a golden opportunity here to break that pattern. Let’s see if you will take it.

OK, the question I originally asked was straightforward, and so why does it need to be turned around? But let’s play Jim D’s game and suppose that we as a nation decide to choose his option (b) as a basis for establishing America’s carbon reduction targets, as would be stated in terms of percent reduction from a Year 2020 baseline.

What specific emission reduction targets would you propose, stated in terms of percent reduction from a Year 2020 baseline? What would be your proposed schedule for achieving those emission reduction targets? What governmental policies, what technical and political strategies, and what kinds of specific actions would you propose be adopted in furtherance of those carbon reduction targets, as they are to be implemented over the timeframes you would propose?

It needs to be turned around because first you need to state what an ideal world would look like in 2100, then figure out the technology to get there. Get the questions in the right order. If you don’t agree that a stabilized climate below 500 ppm is better than 650 ppm and rising, we can stop there and discuss that instead because you are then saying no need to even try. “What do we want?” is the first question, “How do we get there?” is the second in any policy discussion.

As for numbers, I would say (caveat: my own numbers) that even a 50% reduction in emission rates between 2020 and 2100 would stabilize the climate at 2 C. That works out at reducing by 2.5 GtCO2 per decade from today’s 40 GtCO2 emission rate. This averages less than 1% per year, which I don’t think is asking the impossible. The current growth rate is still 2% per year, so a turnaround is needed from growth to reduction, but modest.

“then what would be your proposed carbon reduction targets, implemented over what period of time?”

Shouldn’t the be left to the governments? The problem is that the world has been “successfully” stalled by the same tactics used by cigarette companies and the “scientists” they paid to announce that cigarettes were not harmful to you. Note that we are still hearing from the same groups that second-hand smoke is OK for your kids.

What would I personally propose? Who cares? Nothing will happen as long as the scientists who are paid to muddy the waters convict the politicians (who are paid by oil interests) stop messing around. Throwing a snowball on the senate floor is a prime example of the lunacy.

So here we are 30 years later.

Winters are a little bit warmer in many places. We are told sea level has increased by about three inches but we don’t notice when we go to the same beaches of 30 years ago. There is less sea-ice around the poles, but we still get about the same snow. It melts a little bit earlier most years. And that is about everything we notice. What a huge let-down after 30 years of massive hysteria from the media and the scientists. Nobody notices anything except it is a little bit nicer. So much for global warming.

We are told sea level has increased by about three inches but we don’t notice when we go to the same beaches of 30 years ago.

Profoundly ridiculous.

I’ve been going to the same beach-front house since the early 70’s. Coastline and beaches haven’t changed in the least. Waterfront is still at the same distance. In the early 90’s I told my parents we should sell it because sea level rise would make it lose all value. I’m glad they didn’t pay any attention to me. It is worth a lot more now, and at the present sea level rise rate could go for over a century more.

I am right now at the exact same beach I first visited in 1962 and every year since. (NW USA) While I could not tell you what the sea level actually is, there has been NO NEED for anyone to do anything about sea level rise in the last 60 years.

What’s ridiculous is that Scripps Institution of Oceanography makes no contingency plans to vacate its lower (beachfront) campus…while its grant-grabbing employees feed polemical fodder for catastrophic sea-level-rise scenarios to the media..

United States Naval Academy: where they can’t hit much of anything with a torpedo unless they know the temperature of the ocean water through which it will travel. Since probably at least WW2:

https://i.imgur.com/rqbjazg.png

“The citizens of Hyde County have dealt with flooding issues since the incorporation of Hyde County in 1712,” said Kris Noble, the county’s planning and economic development director. “It’s just one of the things we deal with.”

In Hyde County, their Tar Heel friends to the south, they have had subsidence rates of 1” or more per decade. It’s well known the Eastern Seaboard is sinking like a rock. Even at the time of Benjamin Franklin’s birth, that area had flooding problems.

To really tug at the heartstrings, you could have shown a picture of Bangkok flooding, where subsidence rates in the past were 40 times the GMSLR.

https://i.imgur.com/qQUJVtl.png

Winters are a little bit warmer in many places.

Last winter was a lot colder in many places. Go Figure!

One winter is weather.

There is less sea-ice around the poles, but we still get about the same snow.

Actually, I have seen news stories in recent years of snow in Rome, the Holy Land and even Egypt.

Actual measured precipitation has increased as temperatures increased. The Texas State Climate Scientist has said that is true in Texas over the past hundred years or so.

Ice core data shows it snows more in warmer times, that is when sea ice is less.

Weather anomalies get reported a lot more these days.

” add the 2015/16 El Nino warming to all three Scenarios”

This is getting to be desperate stuff. Why not add the two big La Nina’s (2008 and 2011/12) which were primarily responsible for the downward excursion, which the 2016 El Nino restored?

In fact, of course, ENSO events were not part of Hansen’s predictions.

Hansen: The Sky Is Falling

Hansen et al. (2016) continue to trumpet massive climate alarms requiring “negative CO2” etc. That would “only” require “89-535 trillion dollars”! What then would keep us from descending into the next glaciation? Why bother with scientific validation when you can reap windfalls from alarms?

Young People’s Burden: Requirement of Negative CO2 Emissions

https://arxiv.org/ftp/arxiv/papers/1609/1609.05878.pdf

Actually, it is arguable. Illustrates the use of manufactured nonsense to generate panic and vast over reaction that will doom millions to poverty and lower living standards. Perfect example of a the use of fear to bludgeon folks into kowtowing to the radical left.

Where to start with this shoddy analysis? How about with something simple:

“Hansen added in an Agung-strength volcanic event in Scenarios B and C in 2015, which caused the temperatures to drop well below trend, with the effect persisting into 2017. This was not a forecast, it was just an arbitrary guess, and no such volcano occurred.”

Had McKitrick and Christy read Hansen et al. (1988) more carefully, they would have seen that the GISS modeling was initiated in 1983 and continued through 1985. With respect to volcanic aerosols, the authors explained their modeling parameters as follows:

“Stratospheric aerosols provide a second variable climate forcing in our experiments. This forcing is identical in all three experiments for the period 1958-1985, during which time there were two substantial volcanic eruptions, Agung in 1963 and El Chicón in 1982. In scenarios B and C, additional large volcanoes are inserted in the year 1995 (identical in properties to El Chicón), in the year 2015 (identical to Agung), and in the year 2025 (identical to El Chicón), while in scenario A no additional volcanic aerosols are included after those from El Chicón have decayed to the background stratospheric aerosol level. The stratospheric aerosols in scenario A are thus an extreme case, amounting to an assumption that the next few decades will be similar to the few decades before 1963, which were free of any volcanic eruptions creating large stratospheric optical depths. Scenarios B and C in effect use the assumption that the mean stratospheric aerosol optical depth during the next few decades will be comparable to that in the volcanically active period 1958-1985.”

Note that a high level of stratospheric aerosols *did* occur because of Mount Pinatubo’s 1991 eruption, so McKitrick’s and Christy’s gratuitous shot at Hansen et al. is even more misplaced.

Hansen quoted above “which were free of any volcanic eruptions creating large stratospheric optical depths. ”

Silly me though volcanos might create smaller optical depths. Geoff.

‘Optical depth’ was used synonymously with ‘optical thickness’ in the paper.

Magma,

Do you mean that material brought into the stratosphere increases the optical depth or its synonym optical thickness? Surely they are decreased. Geoff.

“How accurate were James Hansen’s 1988 testimony and subsequent JGR article forecasts of global warming?”

Hansen’s Senate presentation was based on research that he and colleagues had been working on since at least 1982. Their 24-page 1988 JGR paper was submitted January 25, 1988, five months before Hansen’s Senate testimony. The use of “subsequent” in this commentary is misleading, or deceptive.

A rather curious but mislead statement at the top of the post

What Hansen Fung, Lacis, Rind, Lebedeff, Ruedy, Russell, and Stone did was graph the FORCINGS

https://photos1.blogger.com/blogger/4284/1095/1600/Forcings.0.jpg

“was graph the FORCINGS”

And they also gave the explicit formulae that related forcings to concentrations.

To my humble eyes, on the vertical axis it reads °C, that’s not forcing that is warming and includes an over the top climate sensitivity.

Forcings were stated as changes in temperature in the 1988 article. FWIW there is a simple proportionality.

Your point is somewhat like an organiker friend of Eli’s who insists that it is wrong to use wavenumbers for just about everything.

To continue a bit. The emphasis on the global temperature curve is indeed naive. The paper is much richer than that, predicting patterns of warming. Eli has something to say about that

http://rabett.blogspot.com/2018/07/hansen-1988-retrouve.html

https://2.bp.blogspot.com/-f6UtO9sKY9s/WzuFYvSetFI/AAAAAAAAEZQ/2E7LCXyTprIcSFPjcMaY4tE8amOpWI6UgCLcBGAs/s1600/Untitled.png

Eli

I read your interesting article.

What was missing from your analysis and hansens original screed! are the coloured anomalies for the last time there was considerable arctic amplification from around 1918 to around 1942.

The modern warming came after a cold period around 1955 to 1969 or so and consequently it gives a picture that is out of context and exaggerated if it is not related to the earlier warming.

Hansen used the Mitchell curves and other data and must have been aware of earlier warmimg

Tonyb

Indeed – one reason the response is lower than expected is because the Hot Spot failed to materialize. This means both the negative lapse rate feedback and much of the positive water vapor feedback did not occur as modeled.

The result is global warming at the low end, which is exactly what the observations indicate.

Monolithic (single-box) energy balance models assume that the land and ocean warm at the same rate when they don’t, and with the delay in the ocean warming comes a delay in the water vapor feedback and hot spot. This is why EBMs over historic data would underestimate the ECS. They are just too simple to represent the multi-box system that is the climate system.

“Everything should be made as simple as possible, but not simpler. – Albert Einstein ”

Skeptics complain that the climate system is too complex to model, but then while saying that they also rely on this type of simplicity. Go figure.

models assume

You are very good at finding reasons why models have failed, but not so good at pointing out any validation or verification.

This does not distinguish models from superstition.

Not according to the literature.

http://iopscience.iop.org/article/10.1088/1748-9326/10/5/054007/meta

Please provide a citation for your assertion.

Please provide a citation for your assertion.

Of course, the IPCC is an obvious choice:

http://jonova.s3.amazonaws.com/graphs/hot-spot/hot-spot-model-predicted.gif

For those willing to look, linear trends are quite calculable and demonstrative from NOAA’s RATPAC, from RSS’ MSU, and from NASA’s MSU:

https://i2.wp.com/turbulenteddies.files.wordpress.com/2018/06/hotspot_2017.png

Now, though the further back one goes, the more instrument and other RAOB uncertainties one encounters, one can calculate from the beginning of the RAOB era, 1958, and find a hint of a hot spot:

https://i0.wp.com/turbulenteddies.files.wordpress.com/2018/07/hot_spot_1958_thru_2017.png

But neither the intensity, nor the vertical or horizontal extent would appear to constitute a hot spot.

An actual literature citation which backs your assertions that “the Hot Spot failed to materialize.”

Not annotated pictures.

An actual literature citation which backs your assertions that “the Hot Spot failed to materialize.”

Not annotated pictures.

Consider if you are suffering from de_ni_al, secondary to confirmation bias.

Choosing to ignore even the IPCC and choosing not to examine the data yourself is certainly consistent.

The citation does not back up your claim.

Which was: “the Hot Spot failed to materialize. This means both the negative lapse rate feedback and much of the positive water vapor feedback did not occur as modeled.”

A citation which claims this, please.

TE, you either like or don’t like monolithic EBMs being used for ECS. I think they miss observed delaying processes which make them way too simple for ECS.

Re: “Indeed – one reason the response is lower than expected is because the Hot Spot failed to materialize.”

You’ve gone back to repeating your usual falsehoods on this topic. How tedious. The hot spot exists, since the tropical upper troposphere warms faster than the tropical near-surface. That has been shown in paper after paper. For example:

In satellite data:

#1 : “Contribution of stratospheric cooling to satellite-inferred tropospheric temperature trends”

#2 : “Temperature trends at the surface and in the troposphere”

#3 : “Removing diurnal cycle contamination in satellite-derived tropospheric temperatures: understanding tropical tropospheric trend discrepancies”, table 4

#4 : “Comparing tropospheric warming in climate models and satellite data”, figure 9B

In radiosonde (weather balloon) data:

#5 : “Internal variability in simulated and observed tropical tropospheric temperature trends”, figures 2c and 4c

#6 : “Atmospheric changes through 2012 as shown by iteratively homogenized radiosonde temperature and wind data (IUKv2)”, figures 1 and 2

#7 : “New estimates of tropical mean temperature trend profiles from zonal mean historical radiosonde and pilot balloon wind shear observations”, figure 9

#8 : “Reexamining the warming in the tropical upper troposphere: Models versus radiosonde observations”, figure 3 and table 1

In re-analyses:

#9 : “Detection and analysis of an amplified warming of the Sahara Desert”, figure 7

#10 : “Westward shift of western North Pacific tropical cyclogenesis”, figure 4b

#11 : “Influence of tropical tropopause layer cooling on Atlantic hurricane activity”, figure 4

#12 : “Estimating low-frequency variability and trends in atmospheric temperature using ERA-Interim”, figure 23 and page 351

Re: “This means both the negative lapse rate feedback and much of the positive water vapor feedback did not occur as modeled.”

The hot spot is not about the positive water vapor feedback; it’s about the lapse rate feedback. And positive water vapor feedback has been observed, including in the troposphere. Please go do some reading on this. For example:

“Upper-tropospheric moistening in response to anthropogenic warming”

“Global water vapor trend from 1988 to 2011 and its diurnal asymmetry based on GPS, radiosonde, and microwave satellite measurements”

“An analysis of tropospheric humidity trends from radiosondes”

“An assessment of tropospheric water vapor feedback using radiative kernels”

Re: “Of course, the IPCC is an obvious choice:”

What you just did was horribly misleading. As I’ve noted elsewhere, you’re using a misleading color-scale and a misleading time-frame that renders your model-data comparisons invalid.

[Hint: the two panels from your fabricated image don’t cover the same time-frame, and they don’t use the same color-scale]

http://blamethenoctambulantjoycean.blogspot.com/2018/02/myth-ccsp-presented-evidence-against.html

You also conveniently left out what your own 2006 source said about the misleading, out-dated HadAT2 image you’re abusing:

“Systematic, historically varying biases in day-time relative to night-time radiosonde temperature data are important, particularly in the tropics […]. These are likely to have been poorly accounted for by present approaches to quality controlling such data […] and may seriously affect trends”

https://downloads.globalchange.gov/sap/sap1-1/sap1-1-final-all.pdf

Of course, climate science didn’t stop in 2006 with the report you abused. Scientists have been working to remedy the aforementioned issues. You simply continue to ignore the relevant evidence on this, as expected. I’ll present some illustrative images depicting what you’re avoiding:

https://twitter.com/AtomsksSanakan/status/1013105778763468800

https://twitter.com/AtomsksSanakan/status/1013106417170108417

Since 1979, HotSpot?

RATPAC? No.

RATPAC only reliable stations? No.

UAH MSU? No.

RSS MSU? No.

NOAA STAR MSU? No.

By the way, with regards to IUK, the data reputedly indicating a hot spot,

do know that the data set the paper was based on is flawed.

Per the web site:

“If you downloaded data prior to 1 May 2015, please obtain the corrected version 2.01 or 2.2015. The original version 2.0 had a date-registration error which affected temperatures.”

Re: “Since 1979, HotSpot?”

Like usual, you’re just willfully ignoring the evidence cited to you. That is, after all, how you dodge evidence of the hot spot.

And congratulations on cherry-picking 1979 as your start point, when it’s well-known that radiosonde analyses under-estimate post-1970s tropospheric warming trends, due to 1980s changes in radiosonde equipment. I even quoted this being pointed out by the source you abuse. Yet you still dodge the point. Amazing.

We both know that explains why you don’t want to look at pre-1959 radiosonde data, lest you run into the hot spot that you’re committing to denying. For example, take the outdated HadAT2 analysis you initially cited via JoAnne Nova’s garbage, denialist blog:

https://4.bp.blogspot.com/-ylmxpHIufh4/Wq3B7WTk8GI/AAAAAAAABTg/twZeoZnXglsQLwJoXKaMxzUjJJbIDvp1ACLcBGAs/s1600/HadAt2%2Bimage.png

(Figure 11 of: “Revisiting radiosonde upper-air temperatures from 1958 to 2002”)

Re: “RATPAC? No.”

Wrong.

https://4.bp.blogspot.com/-f2Gv1rCXRAA/Wq24QHsiXHI/AAAAAAAABTE/rjrqwQWftLckYf9vRhDqBRGhn-zIb1AnACLcBGAs/s1600/RATPAC%2Bimage.PNG

(Figure 4 of: “Radiosonde Atmospheric Temperature Products for Assessing Climate (RATPAC): A new data set of large-area anomaly time series”)

Re: “By the way, with regards to IUK, the data reputedly indicating a hot spot, do know that the data set the paper was based on is flawed.”

Did you know the subsequent versions of IUKv2 still show the hot spot, as even denialists/contrarians like John Christy and Roy Spencer admit?

Seriously, your evasions are becoming tedious.

Re: “UAH MSU? No.

RSS MSU? No.

NOAA STAR MSU? No.”

Wrong again:

http://journals.ametsoc.org/na101/home/literatum/publisher/ams/journals/content/clim/2015/15200442-28.6/jcli-d-13-00767.1/20150309/images/large/jcli-d-13-00767.1-t4.jpeg

(Table 4 of: “Removing diurnal cycle contamination in satellite-derived tropospheric temperatures: understanding tropical tropospheric trend discrepancies”)

RSS’ amplification ratio is anomalously lo, due to RSSv3 under-estimating mid- to upper tropospheric warming. RSS corrected this in their subsequent work,resulting in amplification rations on par with NOAA/STAR. That’s covered in papers such as:

“Sensitivity of satellite-derived tropospheric temperature trends to the diurnal cycle adjustment”

“Troposphere-stratosphere temperature trends derived from satellite data compared with ensemble simulations from WACCM”

So RSS and NOAA/STAR show the hot spot. UAH is the outlier that doesn’t.

By they way, you conveniently overlooked other satellite-based analyses, such as the UW analysis that shows the hot spot and which I cited above. I wonder why (I already know why; it’s because they show the hot spot that you’re committed to denying).

https://twitter.com/AtomsksSanakan/status/1013529128983826434

https://twitter.com/AtomsksSanakan/status/1013528056261218306

And let’s not even get started on re-analyses like ERA-I that also show the hot spot you’re committed to denying:

https://twitter.com/AtomsksSanakan/status/1013236156929060864

You really should know better at this point, since ERA-I was pointed out to you before and you admitted it showed the hot spot. But here you are, conveniently cherry-picking analyses in order to avoid that point. Amazing.

“Hmmm… That is interesting. The ERA indicates a Hot Spot. It’s skinny and subdued, but it’s there.”

https://judithcurry.com/2016/08/01/assessing-atmospheric-temperature-data-sets-for-climate-studies/#comment-800423

“Second, applying a post-hoc bias correction to the forcing ignores the fact that converting GHG increases into forcing is an essential part of the modeling.”

There seems to be some ignorance about GCMs here. Converting GHG increases into forcing is not a part of the modelling. GCMs like Hansen’s work with gas concentrations directly. Forcings are diagnosed, frequently from the model output.

Pingback: Researchers: Father of Global Warming's Theory Devastated by Actual Data | PoliticsNote

“Inferred” is more accurate. “Diagnosed” belies more assurance than is properly warranted, considering the large uncertainties inherent to this entire exercise.

There is far more important kinds of absurdity to this debate about Hansen 1988.

(1) It is cited as evidence in the climate policy debate about the validity of climate models. We’re told it is valid evidence because of blog posts. No matter what journalists say, it’s weak tea.

(2) What happened to the peer-reviewed literature? If this was a milestone 25 year climate prediction, we should have seen papers about it in Nature or Science. When will we see papers about the 30 year history? With the fate of the world at stake, tipping points and such, I hope they’re working fast.

(3) Why fiddle with these “adjustments” to the model? Why not rerun it with actual emissions and volcanoes since its publication? That also eliminates any effect from tuning.

I have found one (only one) published – but not peer-reviewed – paper attempting to do so in a major journal: “Skill and uncertainty in climate models” by Julia C. Hargreaves at WIREs: Climate Change, July/Aug 2010. She attempted to input the actual emissions data since 1988 and compare the resulting forecasts with actual temperatures. Hargreaves has a PhD in astrophysics from Cambridge.

The result: “efforts to reproduce the original model runs have not yet been successful.”

Gated: http://onlinelibrary.wiley.com/doi/10.1002/wcc.58/abstract

Ungated copy: http://www.jamstec.go.jp/frsgc/research/d3/jules/2010%20Hargreaves%20WIREs%20Clim%20Chang.pdf

The final irony: given the decades of work to keep the archives of methods and data about climate change in the right hands, what might be definitive test is ruined by failure to archive.

” She attempted to input the actual emissions data since 1988 and compare the resulting forecasts with actual temperatures. “

Really? Where? I can’t see anything in the text to indicate that she did that, and I think it is very unlikely. What she did do was to analyse the skill of Hansen’s results using bayesian-style methods.

It’s true that the sentence quoted about “efforts to reproduce the original model runs” suggests that somebody might have, but no reference is cited. In fact the GISS Model II code used at the time is archived and available to download and use (there has even been a support forum, although the link is now dead). It is not known if this is the version that Hansen used for this paper, the computations went on over years. Some Scenario A results are from 1983.

Nick,

(1) Hargreave explicitly says that she wanted to run the code to consider other variables and examine the various outputs of the model.

(2) Got to love climate science! Rather than run the model and produce hard results, we get advocates for policy action saying that its easy to run. They prefer to talk big about the model than re-run it (or another contemporaneous model) and get definitive results.

Thirty years of these games have produced little public support for major policy action. Perhaps 30 years more of this will work.

But, as they say in AA, “Insanity is …”

Nick Stokes: Really? Where? I can’t see anything in the text to indicate that she did that,

I agree with you.

There are two issues here as usual. Since the paper provides algebraic formulii for forcings by the different greenhouse gases it would be straightforward to input the observed emissions data (this is what Gavin Schmidt did at Real Climate)

http://www.realclimate.org/images/anthro_h88_eff.png

The second issue is the more detailed geographic distribution of the warming for which you would have to get the model code to run, Anybunny who has ever tried to do so knows that this is a real time burner

Third, Julia is on Twitter. Ask her.

Re: “(2) What happened to the peer-reviewed literature? If this was a milestone 25 year climate prediction, we should have seen papers about it in Nature or Science. When will we see papers about the 30 year history?”

You never see relevant papers, because you willfully ignore them. I cited some literature on this for you, no less that three times:

https://twitter.com/AtomsksSanakan/status/1010692624855044096

https://twitter.com/AtomsksSanakan/status/1010693846475436032

https://twitter.com/AtomsksSanakan/status/1010692193651187712

You simply ignore the literature, allowing you can repeat your misleading distortions here.

Re: “The result: “efforts to reproduce the original model runs have not yet been successful.””

You’re quote-mining again, even though you’ve been previously corrected on this. Stop.

https://twitter.com/AtomsksSanakan/status/1010697244264271872

Atomsk’s Sanakan: That looks like a quote-mine.

What exactly is your objection to a “quote mine”? What was written was intended by the author to be taken seriously, and most likely intended to be true. Unless the meaning of the quote has been distorted by removing it from the context, not a problem with this quote, the quote should not be ignored just because it is, say, uncomfortable, or not in line with someone’s “pursuit of happiness”.

Re: “What exactly is your objection to a “quote mine”?”

Do you know what a “quote-mine” is? Because your question suggests that you don’t. The following page will give you a laymen’s level introduction:

https://en.wikipedia.org/wiki/Quoting_out_of_context

With that in place, it should be blatantly obvious (to anyone who’s intellectually honest) why I object to quote-mines.

Atomsk’s Sanakan: Re: “The result: “efforts to reproduce the original model runs have not yet been successful.””

You’re quote-mining again, even though you’ve been previously corrected on this. Stop

OK, so how did removing the context change the meaning? Efforts to reproduce the model runs have been successful?

Re: “OK, so how did removing the context change the meaning?”

I explained this already. Please pay attention.

Once again:

https://twitter.com/AtomsksSanakan/status/1010697244264271872?ref_src=twsrc%5Etfw%7Ctwcamp%5Etweetembed%7Ctwterm%5E1010697244264271872&ref_url=https%3A%2F%2Fjudithcurry.com%2F2018%2F07%2F03%2Fthe-hansen-forecasts-30-years-later%2F

Atomsk’s Sanakan: Once again:

That took me to twitter (I guess, I don’t have an account), and Hargreaves’ paper, which I have already read.

Did someone reproduce the original model runs?

“Did someone reproduce the original model runs?”

That is the problem with quote mining. We actually have no facts. There are no references. It is a passing comment in an unpublished paper. The quote suggests only that it hasn’t been done successfully (to JH’s knowledge); it is quite consistent with the proposition that it hasn’t been done at all. Or if it has, JH hasn’t heard of it.

Nick Stokes: It is a passing comment in an unpublished paper. The quote suggests only that it hasn’t been done successfully (to JH’s knowledge); it is quite consistent with the proposition that it hasn’t been done at all. Or if it has, JH hasn’t heard of it.

Has it been done? It would be hard to provide a full reference list of all the times it has not been done.

Granting the soundness of Hausfather’s comments, would it not be a good idea to run the model now with reference to diverse scenarios over the next 30 years.

You and I could compare its accuracy to the accuracy of Javier’s “conservative” forecast, and to other forecasts.

“would it not be a good idea to run the model now with reference to diverse scenarios over the next 30 years”

I guess we would find that interesting. Somebody would have to commit a lot of time and effort to it.

Nick Stokes: I guess we would find that interesting.

That might be the closest we come to agreement.

I would donate my 1988 computer, but my wife threw it away:

https://i.imgur.com/3FvXJ9F.png

In other words, a ridiculous waste of time.

Pingback: About Hansen’s powerful demo that climate models work! - Fabius Maximus website

Reblogged this on Utopia – you are standing in it!.

Ross McKitrick,

Thank you for this interesting post. It is short, succinct and clear.

The last sentence is worth restating:

We need more posts like this. And we need more scientists, economists and policy advisors who are objective and prepared to challenge the CCC (catastrophic climate change) consensus.

All of the science is based on utilising the Stefan-Boltzmann equation to calculate temperatures from various algebraic manipulations of flux values and so-called radiative balance.

These “facts” are turned into code for computer models.

In this article I wrote I claim the algebra used to calculate temperatures calculated by summing discrete fluxes algebraically is mathematically wrong.

If even the simplest model is mathematically incorrect the rest collapses.

Someone tell me why I am wrong.

https://www.dropbox.com/s/mko3w38vqouozpb/Simple%20Model%20of%20greenhouse%20mathematics.docx?dl=0

“The central idea in the papers of Clausius and Thomson was an exclusion principle:”Not all processes which are possible according to the law of the conservation of energy can be realized in nature.””

“These “facts” are turned into code for computer models.”

GCMs apply radiative transfer equations locally, not to spatial averages over regions.

His 1981 Science paper produced a better forecast and had an equilibrium sensitivity nearer 3 C per doubling which is more in line with today’s models than his 1988 model which ran hotter.

Jim D: His 1981 Science paper produced a better forecast and had an equilibrium sensitivity nearer 3 C per doubling which is more in line with today’s models than his 1988 model which ran hotter.

It is a shame that for policy advocacy purposes he went beyond what he had shown in the peer-reviewed literature. And it is a shame that he went along with the trick of making sure the hearing room was hot instead of air-conditioned.