The title of this post is taken from my AGU Fall Meeting poster presentation on the afternoon of Tuesday Dec. 4 (tomorrow).

You can view the poster from the comfort (?) of your terminal at where you can click on the View-ePoster tab.

For full transparency of the number crunching the poster links to the

Excel spreadsheet from which the graphs were extracted, allowing the reader equipped with Excel (2000 or later) to audit all graphs directly and experiment with alternatives, some of which I’ve provided for with sliders. (Microsoft’s free viewer will allow all this except for the ability to experiment, but I would expect Excel 2000 to be pretty cheap these days. I had high hopes for OpenOffice’s CALC but it turned out to be a nonstarter for non-toy spreadsheets.) Please let me know of any glitches you encounter. Only macros signed by Microsoft are used so Excel 2007 onwards should be happy, though I don’t believe 2000 knows how to read macro signatures and hence lives in a perpetual state of paranoia concerning viruses.

Global warming of some kind is clearly visible in HadCRUT3 (Figure 1,

resistor-color-coded red in the poster) for the three decades 1970-2000. However the three decades 1910-1940 show a similar rate of global warming. This can’t all be due to CO2 since the emissions data from the Carbon Dioxide Information Analysis Center (CDIAC) show that human-emitted CO2 wasn’t rising anywhere near as fast then as during 1970-2000. Both the population and its per-capita technology increased enormously in the intervening 60 years, with WW2 technology capable of obliterating cities with both conventional and nuclear weapons that WW1 generals could only dream of, and with WW3 postponed as sheer MAD-ness.

Figure 1

It would seem therefore that rising climate since 1850 is a lot more complex than can be explained by our rising CO2 emissions.

My hypothesis is that there is less to HadCRUT3 than meets the eye. I

hypothesize the following.

1. I collect all the so-called multidecadal ocean oscillations into one

phenomenon I call a quasisawtooth, namely a sawtooth lacking its first

harmonic or fundamental. Sawtooth waves occur naturally as the result of a sudden perturbation away from equilibrium followed by a slow return to equilibrium. The several 100,000 year deglaciation cycles of the late Quaternary are one example; this might be another, albeit with its harmonics filtered differently due to whatever lies between the perturbations and our perception of them (speculated on at the right of the poster, where I forgot to point out that the effect is seen in the oceans because the crust beneath is only 20% the thickness under the continents and almost nothing along the mid-Atlantic ridge). I remove this from the data (Figure 1) by subtracting it, giving the orange curve in Figure 2 labeled DATA – SAW.

Figure 2

2. I further hypothesize that all remaining natural fluctuations in modern climate have as their slowest component the 21-year Hale or magnetic cycle. I filter out this and all higher frequencies with a low-pass filter designed to aggressively block such frequencies. Its frequency response is given as the curve F3 in Figure 5.

Figure 5

F3 is constructed as the convolution of three box or moving average filters of respective widths 21, 17, and 13 years. The first gives F1 which by itself takes out both the Hale cycle and the 11-year solar (TSI) cycle. The second then gives F2 by bearing up at the one-third point of the first side lobe, while the third pushes down on the two-thirds point to give F3. (This filter can be constructed at

woodfortrees.org as I noted in my previous post here early in 2012.)

The effect is to block essentially all frequencies with shorter periods than 22 years. At most 0.4% of any such frequency gets through. What remains is the green curve in Figure 2 labeled F3(DATA – SAW). This is clearly global warming, whatever the cause; the poster calls it Observed Global Warming, color-coded green.

These two operations on HadCRUT3 take place on the left or experimental side of the poster, which deals with observation (in this case HadCRUT3) and its analysis (in this case by subtracting low frequencies and filtering high frequencies).

The right or theory side of the poster deals with the rationalization of observation, the half of science that goes beyond the mere reporting of

experience by hypothesizing explanations for it. Figure 3, color-coded blue, graphs the formula immediately below it, both before (in orange) and after (in blue) applying F3. (It can be seen that the filter makes essentially no difference except for a one-decade end-effect artifact where the curve is changing rapidly. In that context the (literally) side point is made that all odd decades since 1870 have trended positively while all even ones have trended more weakly and often

negatively, verifiable at Wood For Trees.)

Figure 3

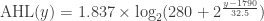

The formula is based on known ideas due to Arrhenius in 1896 and Hofmann in 2009 (that the portion of atmospheric CO2 above the preindustrial level is growing exponentially), with the added twist that the oceanic heat sink delays the impact of radiative forcing variations on HadCRUT3 by 15 years, analogously to the overheating of a CPU being delayed by the addition of a heatsink with no fan, what I refer to as the Hansen delay. I call this the Arrhenius-Hofmann-Hansen or AHH law.

The rest of the poster, color-coded violet (purple, whatever), deals with the similarity to the curve in Figure 2: they are equal to within millikelvins. For the period to 1950 the standard deviation of their difference is half a millikelvin. After 1950 there are two bumps that need explaining; the best I could think of was brown-cloud pollution from uncontrolled western civilization emissions abating in the 1970s and then resuming with the industrialization of nonwestern civilization, but there may well be other explanations. Whatever the explanation however the main point is that multidecadal residual (MRES) is inconsequential in the context of global warming.

The hypothesis then is that multidecadal climate has only two significant components: the sawtooth, whatever its origins, and warming that can be accounted for 99.98% by the AHH law as measured by the R2 of its fit to observed global warming (and could be brought even closer to 1 with a good story for MRES).

Because filter F3 rises slowly on the left of its cutoff there is the worry that some multidecadal phenomenon was overlooked by sneaking into the Hale octave. Something like this seems to have happened on the high-frequency side of the SOL band, perhaps some ENSO noise from DEC (decadal band) getting into the TSI band. However the HALE band looks much cleaner than the TSI band, suggesting that nothing entered from the MUL (multidecadal) side. And since MRES as shown in Figure 10 is essentially flat by comparison with SAW and AGW, it would appear that those multidecadal variations not accounted for here are either too small to matter or have been inadvertently lumped in with (i.e. are inseparable from) one of SAW, AGW, or HALE.

Figure 10

With enough parameters one can make any two curves equal to within any desired precision. The judgement to be made here is whether the nine parameters used here have any chance of achieving a comparably accurate fit on random data in place of HadCRUT3. This was an objection raised to Mann’s methodology, and I would be interested to see if it applies here.

After the comments responding to this post, this might not be the poster I want any more, but meanwhile you go to the conference with the poster you have, not the poster you want. :)

In any event this poster is offered as a preliminary report on work still in progress. One reason for advertising it here at an early stage is that the denizens of Climate Etc. seem well motivated to poke holes in theories of global warming, which I view as a positive benefit of skepticism, as distinguished from flat denial. There seems to be a lot of the latter here too but I haven’t found it as useful.

JC comments: This is an invited guest post. I think that this is an intriguing analysis, but I have not looked at this in any detail. This is a technical post, please keep your comments on topic.

I am at the AGU this week, I will have a post on the meeting later this week.

Confucius say:

Beware results of frequency analysis if most of relevant information occurs at one end of a non-periodic time-series.

If we really understand it all then even if the average global climate does not rise as fast as projected and despite the fact it is godawful cold outside, C)2-producting humanity can still be seen to be the destroyer of the Earth because it should be even colder.

By analogy what Vaughan Pratt is trying so hard to tell us non-believers is, Look, look, the witch didn’t float so she must’a not been a witch. Next!

+100

-100

When you’re incapable of commenting on content, just spit in the writers’ general direction? Thanks for contributing and giving climate skeptics a good name.

Then, you do not find “non-believers” to be objectionable ad hom? Good.

Nice job Vaughan. I still think perfect matches in an imperfect world are scary though. Especially since it can be hard to separate cause and effect.

https://lh3.googleusercontent.com/-7yQZf4cRGG0/UL4YWvB6AnI/AAAAAAAAF3Q/M50BKvLQWzk/s720/TSI%252015%2520year%2520lag%2520with%2520sh.png

Then I am a bit of a thermo dinosaur playing with psych charts and such :)

One question (not the only one but the most obvious) that comes to my mind is the influence of volcanic eruptions. Their effect is strong enough to affect the outcome after the the removal of high frequency phenomena but lumping that effect with the others in the sawtooth sounds rather overfitting than potentially justified by other means.

Up to a point Lord Cooper. Have you ever seen a global temperature response from a volcano? They don’t actually exist, nor are they present in the reconstructions.

The observation is made that cycles of less than 20 years are filtered out, so presumably volcanoes are treated as noise and suppressed.

I took Pekka’s point to be about sustained volcanism, which would need to be sustained for at least 25 years to get through the F3 filter. If seismic events at the crust-mantle boundary are responsible as I suggested, sustained volcanism might be so well correlated with this (whether positively or negatively does not matter) that they may be inseparable.

I had intended, but forgot or ran out of space, to make the point that one cannot choose between well correlated phenomena based solely on observation. Volcanism vs. my crust-mantle/mantle-core boundary explanations is such a situation. Unlike the latter, the former has been the subject of much discussion ,and to save space I focused on new ideas at the expense of old. A longer writeup would need to do justice to both.

What I have in mind can be seen from this Fig 2 from the paper of Lean and Rind.

There we see that volcanic influence has led to cooling by up to 0.25 K and with a decay that has taken a few years. Averaging over periods up to 22 years leaves still a signal of a few tens of mK. As the effect is purely cooling without a compensating warming phase it’s not averaged out but it influences the analysis and reaching an accuracy of mK range in presence of such random external influence seems to indicate overfitting.

Vaughan,

Taking into account what you tell in some of your comments about your goals my comment is not directly relevant to your work as stated.

Going beyond that this detail along many others mentioned in other comments or unmentioned lead one to conclude that reaching the level of accuracy you have reached is probably not very significant. It may indicate that allowing as much freedom for the choice of model as you have used and as many explicit parameters as you have is likely to allow for the observed accuracy as long as the data is reasonably well behaving.

A separate issue is that one may compare the results that you obtain using approach with that of Lean and Rind who try to find out how much of the variability can be explained by solar and volcanic forcing and “explained” by ENSO. I put the latter explained in quotes because ENSO is not really an explanation but another index that tells about the same Earth system as the temperature data. Solar irradiation and volcanic activity are external to the system of atmosphere and oceans while ENSO is internal.

Pekka, place show me a Thermometer record which shows anything like the temperature change shown in that figures, as a result of volcanic aerosols.

In the sciences one tests a hypothesis against the data. If the data is different from hypothetical result is different from reality, start over.

You are completely unable to show a temperature record that shows the model line shape in Figure 2b., because its not true.

@Pekka: Going beyond that this detail along many others mentioned in other comments or unmentioned lead one to conclude that reaching the level of accuracy you have reached is probably not very significant. It may indicate that allowing as much freedom for the choice of model as you have used and as many explicit parameters as you have is likely to allow for the observed accuracy as long as the data is reasonably well behaving.

That’s certainly a possibility that worries me, Pekka. However I would expect that the 9 parameters of my SAW+AGW model are not enough to reach the observed accuracy for more than 1% of other randomly constructed “reasonably well behaving data,” and likely much less.

One way to test this would be to perturb HadCRUT3 slightly by adding a dozen or so sine waves each with randomly chosen phase, small amplitude, and frequency below that of the Hale cycle (since F3 effectively kills all higher frequencies). Do this say a thousand times, and for each note the resulting R2. If 99% of the R2’s were significantly less than 99.98% then consider HadCRUT3 “special” with respect to this model, in the sense that it would seem not to contain the sort of noise being added in this test.

Would this kind of test satisfy you?

Vaughan,

The problem in testing the significance of this kind of findings is in estimating the “effective degrees of freedom” involved in the selection of the form of the model. I cannot know exactly what you have done but probably you have looked at the data and pondered what kind of parameterization might work. You may have also tested several alternatives and finally picked the one that performed best. It’s impossible to evaluate well the role your selective process plays in the success.

Testing with perturbed data would require an equal amount of pondering and search for the best performing model to even approach comparability.

Literature on statistical technical analysis used by people who hope to make money in the stock market by such tools is quite revealing. A number of papers have been written on testing the predictive power of models based on history time series. Some of the papers list known caveats that lead to spurious positive results and methods have been proposed to get rid of such false positives. The problem is that such methods are likely to get rid also of some valid positives.

The most difficult problem is always the human role in putting in information that’s not counted among the free parameters fitted to the history. This problem applies to all simple fits to history data, be that by you, Scafetta, or anyone else. That applies also to the climate modelers who have developed their large models knowing at least qualitatively how the numerous choices that they have made and continue to make affect the outcome. For this reason they can never tell with reasonable precision the statistical significance of the agreement found in hincasting. They’ll never know, how much implicit tuning their models contain.

Pekka, I agree wholeheartedly with your comments. As I think you’ve gathered, my main goal is not to predict climate, or even explain it, but only to describe the multidecadal part of HadCRUT3. Explanations enter only as a motivation for descriptions that are at least consistent with the known physics and that are analytic by virtue of belonging to the class of functions containing the constant functions and closed under linear combination, exponentials, logs, and sines. The multidecadal part seems to lend itself it to simple such descriptions.

There is much more to say about this, particularly as regards the role of F3 in reducing dimension, but after spending a day writing some 25 paragraphs about filtering technology and dimension reduction I realized I should instead organize it as a post and therefore put it to one side for the time being so I can respond more immediately to other comments.

Not to worry Pekka. Volcanic cooling stays up there, never descends into the troposphere. Such volcanic cooling as has been claimed is nothing more than misidentified La Nina cooling incidents whose timing accidentally followed an eruption. Best known is the claimed Pinatubo cooling that has nothing to do with Pinatubo. That is not surprising if you consider that the entire temperature curve is a concatenation of side by side El Nino peaks and La Nina valleys. After all his filtering Vaughn Pratt still could not get rid of them and says that “…multidecadal climate has only two significant components: the sawtooth, whatever its origins, and warming…” He does not understand the sawtooth and is wrong about warming. His analysis is brilliant but signifies nothing.

@Arrak: After all his filtering Vaughn Pratt still could not get rid of them

Since “them” (the southern oscillations) have a period in the vicinity of 7 years, I can assure you that the 21-year F3 filter got rid of them completely. Saying it didn’t is simply contradicting its frequency response with no justification.

Vaughn – five or six years is more likely from observation. I don’t doubt that you can make them invisible but they have been a real feature of climate since the Isthmus of Panama rose from the sea. You need their precise locations to compare with dates of volcanic eruptions. Müller shows four independent temperature curves (NASA, Met Office, NOAA and Japanese) to demonstrate warming since 1880. There are errors but far more striking is the precise correspondence of El Nino peaks among them going back to 1880. You could probably place Tambora on one of his longer term curves and get the lowdown on the year without summer.

Vaughan Pratt

Although I have to admit that I am not really sure I understand all you are saying, I have some comments or questions.

As I see it you take a temperature record with strong multi-decadal warming and cooling cycles plus lots of annual ups and downs (sawtooths) and smooth it by filtering out various assumed short term or long term cycles and end up with a smooth curve that shows exponential warming, presumably from AGW.

This is essentially handling everything except GH forcing as background noise, which can be filtered out to end up with the real residual signal, as I understand it.

Before F3 smoothing you remove all the multidecadal ocean oscillations This apparently eliminates the strong early 20th century warming cycle 1940-1970 (which is statistically indistinguishable from the warming cycle of the late 20th century, 1970-2000). After F3 smoothing, the early 20th century warming cycle is completely gone. How realistic is this?

You indicate that you have built in a 15-year time lag, calling this the Hansen effect. Doesn’t Hansen posit a much longer time lag in his “pipeline” postulation?

You take out the TSI impact of the 11-year solar cycle. Does this smoothing also consider any other solar mechanisms (e.g. Svensmark), or does this even matter to the analysis? How is the unusually high level of 20th century solar activity handled?

Is the 1940-1970 MRES smoothing for increased aerosols a convenient fit or are there empirical data to support it? Same question goes for the 1970-1980 reduction in MRES and the increase after 1990.

A final question: was the intent of this study to end up with the underlying exponential warming curve or did that just happen after all the noise was filtered out?

Sorry if these questions are too simple – but I am just trying to understand what you have written

Max

This seems pretty straight-forward.

HadCRUT3 – CO2 – Hale = quasisawtooth

Then publish as: HadCRUT3 – Hale – Saw = CO2

In the glacial/interglacial sawtooth pattern, the amplitude of each tooth is nearly equal. Why are the 1880 & 2000 teeth in the quasisawtooth blunted versus the 1940 “tooth”?

This does have a similar look to Foster and Ramstorf statistical exercise. Perhaps there is merit in this approach. The fit seems too precise given the errors in the temp records though. It seems difficult to remove all the dynamical processes so very accurately though as to be missing any bias in your assumptions.

The fit seems too precise given the errors in the temp records though.

If a million temperature measurements each have an uncertainty (however defined) of one degree, then a parameter inferred from them will have an uncertainty of 1/sqrt(1000000) = one millikelvin.

Bias is always a problem, but I didn’t get the sense that bias was your primary complaint.

“If a million temperature measurements each have an uncertainty (however defined) of one degree, then a parameter inferred from them will have an uncertainty of 1/sqrt(1000000) = one millikelvin.”

If – and only if – they are measuring the SAME THING. Measuring the fuel efficiency of 1 million different cars does not pin down the fuel efficiency of one particular car UNLESS they are all the same make and model, driven over the same route at the same speed, etc etc. If they are not the same thing, then the only inferences we can make relate to DIRECTLY UNMEASURABLE statistical qualities of the sample – the mean, standard deviation etc etc, such as “our fleet average fuel consumption is x MPG”, or – digging further – “On average, California drivers in our fleet consume 15% more fuel per mile than Texas drivers”. While these statements may be accurate, they do not tell you about the individual cars or drivers, only about the qualities of the whole, or sub-samples thereof. They can help you to predict what changes will have the largest impact on average, but they do not tell you the impact on the individual concerned (in this case, particular drivers and/or particular cars).

So the questions we must ask are:

Is a global average temperature useful to us?

If so, how? What does it tell us that other measurements do not?

Is spacial average more useful than temporal average for particular sites / areas / regions?

“Is a global average temperature useful to us?”

It tell you most of earth is covered by oceans.

I think bias was the main question though I appreciate the answers to Max A’s questions they much more clearly state my curiosities. You have avoided his question regarding if the fit came naturally or by design

Having seen Vaughan’s development of this idea earlier on this forum, I think the first realization comes from seeing how CO2 has followed a simple functional form with time, and combining that function with T as a theoretical function of CO2, which gives T as a function of time. Fitting that to any kind of smoothed temperature trace shows only a few anomalous but temporary bumps. It is very persuasive that these are the main things going on at the century scale.

But it is very telling that much else is happening on decadal scales in more recent times.

@Neil Fisher: Is a global average temperature useful to us?

This is an important question. On less than a decadal scale I would say not: regional temperatures are more interesting to the region in question.

However two or three decades is enough time for regional phenomena to be assimilated into the global temperature. Hence regions can’t exhibit multidecadal phenomena because on that time scale they’ve become global.

So if you’re studying El Nino say then regional temperature is important. But if you’re studying a 75-year cycle, or a slow trend like global warming, then only global temperature matters.

That’s just my opinion, and I’m happy to be persuaded otherwise.

“This is an important question. On less than a decadal scale I would say not: regional temperatures are more interesting to the region in question.”

Ah – I gather from this reply that this is the reason for the 30 years minimum so often quoted as required for climate rather than weather. And while I certainly appreciate the logic (and yes, it does make sense!), I think that from what we can see from direct measurements (~ 150 years) and infer from proxies (up to millions of years) that it is apparent there are psuedo-cycles (your saw) that extend across several orders of magnitude (perhaps best described with a fractal) – everything from day/night, through summer/winter, PDO/AMO, the approximately 80, 300 and 1500 year cycles all the way through the 100k Milankovitch and perhaps beyond. It is difficult to believe (for me anyway) that we are aware of even the existence of some of these, let alone their magnitude and phase (where we are in terms of each cycle right now).

The point is: is the saw wave that you subtract to show the underlying trend merely bumps on the teeth of a yet longer psuedo-cycle that you have not considered (may not even be aware of)? Teeth on teeth, as it were (hence the fractal description earlier). It would certainly make an interesting study to determine the fractal dimension of your saw and then “zoom out” to the next level and re-apply your method – it seems to me that this may even provide a better fit to the available data (inc long term proxies) than the single level you have already calculated – certainly the change in the projections would be most interesting! Certainly it would be difficult to calculate the exact phase relationships, and so there may be more uncertainty than we might hope (and is displayed by your work so far), meaning that several projections (with the phase of the longer cycles differing) may match the available data, but this is quite intriguing to me. Alas, this is beyond my abilities and resources to investigate, but I hope you might be intrigued enough by the concept to investigate – if you do, I would very much appreciate another post here at Judith’s blog outlining the results!

Thanks for engaging with us here BTW – so many of your colleges have been “burnt” by blog interactions in the past and I hope you will not be one of them. I believe that if you ignore the ad hom and other dross, you can pick up some useful “peer” review from interested laymen. I would like to think I fit that description, but I highly doubt it ;-)

Neil, I’m truly sorry I overlooked your second December 7 comment back when you made it. Thank you for your insight, as well as the intriguing idea that SAW might be just a part of a larger fractal.

However AGW makes even SAW hard to see, particularly after 1950, and I would expect it completely washes out anything much slower than SAW. With a reliable temperature record going back further than 1850 (and even 1850-1900 is a stretch according to many) one could do better.

The best candidates there would seem to be CET (Central England Temperature) for 1660-1900, the various ice core samples, and the International Tree Ring Data Bank maintained by NOAA.

Global warming would seem to have hit CET about a century earlier than HadCRUT3 because it’s so regional. While it has always reflected global temperature, Hubert Lamb’s premise, it samples only 0.01% of the Earth’s surface making it much more sensitive to industrialization in that region. The green curve here at Leif Svalgaard’s site starts out cleanly but is pretty ragged during 1900-1980, though it cleans back up after 1980, perhaps on account of more consistently applied emissions controls in that neck of the woods. (I’m using the Sun’s Hale cycle as a canary-in-the-mine because that portion of the climate spectrum seems to be unusually free of interfering natural signals compared to the rest.)

I believe that if you ignore the ad hom and other dross, you can pick up some useful “peer” review from interested laymen.

Yes, overall I’d say the response here has been a plus, at least in terms of my understanding of the issues raised.

The main downside of the “dross” is that it makes the thread much harder to follow. At some point I may try to address this by collecting the more salient criticisms, questions, and other contributions in one place.

Great questions, Max!

How realistic is this?

There are two parts to my analysis of HadCRUT3: describe, then explain.

Questions like yours about realism of a description can only concern explanations of it, not the description itself. As someone perfectly capable of doing the relevant arithmetic I stand strongly behind my description, but nowhere near as strongly behind my explanation. So to answer your question (which I take to be about explanation), not much until I get buy-in from others about whether my explanation (in terms of seismic events at the two mantle boundaries) is at all realistic.

Doesn’t Hansen posit a much longer time lag in his “pipeline” postulation?

Where? And how much longer?

You take out the TSI impact of the 11-year solar cycle. Does this smoothing also consider any other solar mechanisms (e.g. Svensmark), or does this even matter to the analysis?

The simple answer is that it doesn’t matter because the phenomenon Svensmark points to, namely the interaction between the galactic magnetic field and the Sun’s, operates on the same 21-year cycle that F3 removes.

However it’s an interesting question nonetheless. One grad student in hydrology asked me during the poster session this afternoon whether it would ok for him to cite Svensmark’s paper in support of his analysis of cycles in Indian hydrology. I told him that the papers of Ney and Dickinson on the same subject in respectively 1959 and 1975 would serve that purpose much better, not only for priority but also because they did not have the axe to grind that Svensmark does.

How is the unusually high level of 20th century solar activity handled?

Numbers, please.

Is the 1940-1970 MRES smoothing for increased aerosols a convenient fit or are there empirical data to support it?

If you’re referring to the 1950-1980 “bump” in MRES, how is it “convenient?” I wish it would go away. Please play with the Excel spreadsheet so that you can see what I mean. To the question “who ordered that?” it wasn’t me.

Same question goes for the 1970-1980 reduction in MRES and the increase after 1990.

Same answer.

Was the intent of this study to end up with the underlying exponential warming curve or did that just happen after all the noise was filtered out?

Great question. My analysis was in two steps: describe, then explain.

The tendency in climate science has been to eyeball the data and proceed right away to the explanation. All along the “underlying exponential warming curve” was in the back of my mind, but it seemed to me intellectually dishonest to infer it from inadequately described data such as the 162 numbers in the raw HadCRUT3VGL times series, which was just a mess of numbers.

I addressed this concern by reducing 162 numbers to 9. Part of this was done by applying F3, which I estimate to reduce the dimension from 162 to 16.

By putting up with a poor R2 (well less than 1), one can typically lop off a few more dimensions.

In this case the dimensionality went from 16 to 9 with an R2 of 0.9998.

Whereas I only play a statistician on YouTube, MattStat/MatthewRMarler is a real statistician, so I would defer to him on the question of whether 16 –> 9 vs. 0.9997 was significant. What say you, Matt?

(I asked Persi Diaconis this question a couple of months ago and he inclined towards significance. Seems like an interesting question.)

Dr. Pratt, you write “The simple answer is that it doesn’t matter because the phenomenon Svensmark points to, namely the interaction between the galactic magnetic field and the Sun’s, operates on the same 21-year cycle that F3 removes.”

I have enormous difficulty with this claim, but my expertise is not sufficient to really dispute it. But I think it is wrong As I understand things, Svensmark’s point relates to the strength of the sun’s magnetic field. This changes little over the Hale cycles. It is far more related to the sort of measurements Livingston and Penn are making on the magnetic strength of sunspots, which has been decreasing steadily ever since measurements started around 1998. I dont think that this magentic effect shows any sort of 22 year cycle. It is more likley associated with the 189 year cycle of planetary alignment.

But we really need someone like Leif Svalgaard to comment on this claim.

vrpratt: All along the “underlying exponential warming curve” was in the back of my mind

This was what I meant by finding the correct filter to match someone’s expectation. And, if in fact that is the correct function, then you found the best filter to reveal it. There is a symmetry: if you know the characteristics of the noise, you can design a filter that will reveal the signal; if you know the signal, you can filter away ad lib until you have revealed the signal. If both are in doubt, a clear result is ambiguous. There are different ways to say this: instead of testing a hypothesis, one may say that you have “rescued” the hypothesis. Or, the procedure itself has a type 1 error rate of 1.0, when the nominal value is 0.05.

Or, consider the hypothesis that there is a 1000 year period, and we are nearing or in the “next” peak: (a) you can assume it’s true and filter until you have it clearly confirmed; or (b) you can assume it’s false and filter until it’s removed (which you did by focusing on the short recent time series.)

to test whether you have found something reliable, keep your full model prediction: model = “smooth” + “rough”; compare to future data.

MattStat, “one may say that you have “rescued” the hypothesis.”

I like that. Couldn’t you compare “rescue” attempts? Use the same rescue solar and compare. When you get into smoothing you are throwing away lots of information that needs some reasonable standard method to validate.

https://lh3.googleusercontent.com/-7yQZf4cRGG0/UL4YWvB6AnI/AAAAAAAAF3Q/M50BKvLQWzk/s720/TSI%252015%2520year%2520lag%2520with%2520sh.png

I threw solar TSI smoothed to 11 yma and used the 15 year lag in that. With a little SAW I could nail solar pretty easy.

vrpratt: Whereas I only play a statistician on YouTube, MattStat/MatthewRMarler is a real statistician, so I would defer to him on the question of whether 16 –> 9 vs. 0.9997 was significant. What say you, Matt?

It’s really hard to tell.

If you would like your modeling result to be taken seriously as a guide to future planning (I don’t mean to presume to know your motives), then keep track of the squared prediction error, the sum of the squared prediction errors (CUSUM), and the square root of the mean prediction error (RMSE) over the next 20 years. That will provide better information for whether you have a significant result, by any definition of significant.

I used to “lean” toward AGW, and a result like you got. Now I “lean” toward the 1000 year cycles and the idea that we are near a peak. The two ideas make sharply different forecasts for the next 20 years. I am 65, so I may not live long enough to decide which leaning was correct. But the test of the model is in the future data. If you see Dr. Diaconis soon, I would be interested in his responses to my comments. I can’t really believe they are worth his time, but who knows?

vrpratt and MattStat

re: 1000 year cycle

Loehle and Singer evaluated nine temperature reconstructions and found a climate cycle about 1500 years (or 1200) long that may correspond to the Pleistocene Dansgaard-Oeschger (DO) oscillations. See:

Craig Loehle and S. Fred Singer, Holocene temperature records show millennial-scale periodicity. Canadian Journal Earth Science Vol. 47 pp 1327-1336 (2010).

Bond event zero?

http://s1114.photobucket.com/albums/k538/Chief_Hydrologist/?action=view¤t=Vance2012-AntarticaLawDomeicecoresaltcontent.jpg

http://journals.ametsoc.org/doi/abs/10.1175/JCLI-D-12-00003.1?journalCode=clim

vrpratt and MaxStat

On AGW attribution, what is you NULL hypothesis?

Your filtering exercise shows an underlying accelerating upward trend. However, I also expect a natural underlying accelerating upward trend from the 1500 year cycle. e.g. there is

1) a mild global cooling from the Holocene Climatic Optimum

2) A millenial scale oscillation of ~ 1500 years per Loehle & Singer above

(i.e. an approximately linear rise from the Little Ice Age – or better

an accelerating natural warming since the LIA)

3) A 50-60 year multidecadal oscillation.

4) A 22 year Hale solar cycle (or conventionally 11 year Schawb) (See WGR Alexander et al. (2007) Linkages between solar activity, climate predictability and water resource development).

How then do you distinguish anthropogenic contributions, from CO2 etc. from that Null Hypothesis?

5) AND account socio economic impacts on the temperature data.

(See Ross Mckitrick’s recent papers)

Robustness

Have you evaluated the potential to hindcast/forecast from a two portions of the data and then compare the projections against the third portion?

Have you any comments on the relative physical and statistical validity of your methods compared with those of Nicola Scafetta? cf

Testing an astronomically based decadal-scale empirical harmonic climate

model versus the IPCC (2007) general circulation climate models

or see the links above

Physicality

Consider the recent WUWT comments of physicist Robert Brown of Duke U

On certainty: Truth is the Daughter of Time

So what do your results mean?

Do I understand you to assume an exponential CO2 rise to cause an exponential temperature rise?

From Beers law etc., the warming contribution of CO2 is logarihmic to the concentration. Consequently wouldn’t combined impact of exponential and logarithmic be an approximately linear warming contribution? e.g. as per Scafetta 2011 above?

But it is very telling that much else is happening on decadal scales in more recent times.

@David L. Hagen: On AGW attribution, what is you NULL hypothesis?

That there is no essential difference between the AHH law as I formulated it and observed global warming as I defined it.

How then do you distinguish anthropogenic contributions, from CO2 etc. from that Null Hypothesis?

By its good fit to the data since the onset of serious industrialization. I would be very interested to see how well your null hypothesis fits the data. over that period. My expectation would be that a good fit is not possible, but I enjoy being surprised in matters of science.

@David L. Hagen: Have you evaluated the potential to hindcast/forecast from a two portions of the data and then compare the projections against the third portion?

That’s a rather low bar compared to simply deleting the last few decades. My analysis depends critically on the Keeling curve, so if you try to predict 2010 by deleting all data after 1980, you only have 22 years of Keeling curve to go on. If instead you delete a middle third while retaining the last third as you suggest then you’ve retained the critical part of the Keeling curve, which sounds like cheating.

What you really want to know is how well the model predicts when you don’t know any part of the future, not just HadCRUT3 but also the Keeling curve.

So I deleted everything after 1980 and did the fitting based only on data from 1850 to 1980. (So far only RobertInAz has shown any interest in auditing my work—he’s welcome to play with http://clim.stanford.edu/hadcrut3to1980.xls which does all this.) Here are the changes from fitting to HadCRUT3 to 2010.

The parameters are in three groups, the timing/amplitude of SAW, its shape, and the three CO2 parameters.

Timing/amplitude barely changed:

Period: 151 years no change

Trigger: 1924.46 –> 1924.47 (essentially no change)

Amplitude: 1.80 –> 1.81 (very small change)

The three shape parameters controlling the amplitudes of the 4th and 5th harmonics and their common phase shift also barely changed:

Amp4: 0.14 no change

Amp5: 0.47 –> 0.44 slight decrease

Phase Shift: .03 –> .032 slight increase

The biggest change was in estimating CO2 and its impact.

Natural CO2: 287.3 –> 281.1 (large decrease)

Sensitivity: 2.83 –> 2.43 (large decrease)

I blame these big shifts in those two parameters on our rather incomplete understanding of CO2 up to 1980, relative to what we have now, namely 1850-2010.

Hansen delay did not change:

Hansen delay: 15 years no change

Is there a peer-review process for posters at AGU meetings?

no

Seems to me that this blog is doing a pretty good job already of peer-review.

25 comments from about a dozen individuals in just a couple of hours.

A process simialr to this will be the future replacement for old-fashioned peer-review…and far better than Phil Jones’s gut feel:

‘ I have a feel for whether something is wrong – call it intuition. If analyses don’t seem right, look right or feel right, I say so’

aut viam inveniam aut faciam

Yeah, ain’t it great. And medical decisions will be made in the same way. Need a new liver? We better post on the blog and see what folks say. There is a difference between feedback and peer review.

I would not make too much of the lack of peer-review for the AGU general meeting. It’s been that way for years and those attending know that to be the case. I’ve viewed it as ‘well, good here is an open forum for members.’ It serves multiple purposes–good and not so good, depending on point of vision. Yawn.

‘point of vision’ –>> ‘point of view’ (oops, thinking of Persistence of Vision)

mwg practiced medicine in the recent past because medical references seem to be popping up from time to time ;)

Peter

No medical practice. Medicine is interesting from a number of perspectives including uncertainty, science, rules-of-thumb, ethics, etc., and it has been a significant area of interest over the years for decision theory and artificial intelligence researchers in part because it has non-trivial elements of uncertainty, serious outcomes, extra-medical considerations, etc. The uncertainty and gravity surrounding some medical decisions and the sheer number of these decisions suggest that they might provide useful insights for some of our ‘one-chance’ environmental decisions. Just a personal bias operating here ;O)

mwg your take on the ubiquity of medical issues and their relevance to theories of general decision-making is an interesting and valid one. I have often thought, however, that the better practicioners were slower and more methodical in their approach to diagnosis and were better in patient relationships.

The very bright ones seemed more remote and bored with the whole thing and yet, to get into medical school in Australia one had to be pretty bright. There are, however, a few very hard workers who manage to get the pass levels required for Uni entrance and these ones seem generally to be better at their vocation.

@Latimer Alder: Seems to me that this blog is doing a pretty good job already of peer-review.

Lord Monckton took “peer review” quite literally.

Poster sessions are a means of gathering critical information on an idea you think is good. It might also have a hole a truck could be driven through that you missed in the blinding flash of creative genius that generated the poster session. Someone will wander up and stare at your work for a bit and then say, “have you considered …?” You will either then say “of course …” or stare back like a poll-axed steer. The obvious is often unaccounted for.

Yes. What a pity the authors didn’t take that approach before submitting the paper with this scheme to the journal:

http://judithcurry.com/2012/08/24/a-modest-proposal-for-sequestration-of-co2-in-the-antarctic

They got plenty of advice on this thread, but the paper has already been submitted and the Lead author did not accept most of the comments:

Presentation of am AGU general meeting 10 minute talk or a poster session is not viewed as anywhere near the equivalent of peer-reviewed journal publication. It serves other purposes.

Yes, though not at the journal level. It is easier to find AGU-FM posters that contradict each other than Nature articles.

Has the temperature data been considered in light of Dr. Ross McKittrick’s statistical analysis that brings doubt to its validity? I am not sure if the HadCRUT3 data has similar problems.

And…I am NOT a scientist so I suspect my question above might have already revealed as much ;-) I just wonder if the underlying data regarding warming is accurate.

Mike, in addition to data accuracy, there are quite a bit of filters and delays in the pipeline. These can do magic to get things aligned.

How do we validate the Arrhenius-Hofmann-Hansen’s Law? Any suggestions by the authors of the law anywhere in the science?

The historical record would show that one could not have a change in temperature without a change in atmospheric CO2.

There would never be a case where temperature fluctuated and CO2 didn’t or that CO2 fluctuated and temperature didn’t.

I refute it thus

http://i179.photobucket.com/albums/w318/DocMartyn/last400KYEPICADomeCCO2vsTemp.jpg

How do we validate the Arrhenius-Hofmann-Hansen’s Law?

“Control-Knob Law” is a lot easier to say.

“Control-Knob Law” is a lot easier to say.

Control is a redundancy.

LOL Doc !!!

These might be of interest to folks whose knowledge of calculus and statistics is not covered with rust, as mine is. “Harmonics” caught my eye; my recollection is that “harmonics” can cancel or amplify component signals.

Markonis, Y., and D. Koutsoyiannis. “Climatic Variability over Time Scales Spanning Nine Orders of Magnitude: Connecting Milankovitch Cycles with Hurst–Kolmogorov Dynamics.” Surveys in Geophysics (2012). doi:10.1007/s10712-012-9208-9

Related to paywalled paper Scafetta, 2012?

Scafetta, Nicola. “Multi-scale Harmonic Model for Solar and Climate Cyclical Variation Throughout the Holocene Based on Jupiter–Saturn Tidal Frequencies Plus the 11-year Solar Dynamo Cycle.” Journal of Atmospheric and Solar-Terrestrial Physics 80, no. 0 (May 2012): 296–311. doi:10.1016/j.jastp.2012.02.016

http://www.sciencedirect.com/science/article/pii/S1364682612000648

Pooh, Dixie

For preprints see Nicola Scafetta’s home page e.g. see.Scafetta N., 2012. Multi-scale harmonic model for solar and climate cyclical variation . . .PDF PS Scafetta’s updated graph is at the page bottom.

For full presentation graphics see Scafetta’s Presentation at 2012 SORCE Science Meeting – Annapolis, MD, 18-19 Sept., 2012.

For his earlier technical paper & discussion see:

Scafetta N., 2012. Scafetta N., 2012. Testing an astronomically based decadal-scale empirical harmonic climate model versus the IPCC (2007) general circulation climate models. (Science and Public Policy Institute). Web: PDF

As reported by

Watts, Anthony, Y. Markonis, and Demetris Koutsoyiannis. “New Paper from Markonis and Koutsoyiannis Shows Orbital Forcings Signal in Proxy and Instrumental Records” Scientific. Watts Up With That?, November 4, 2012.

http://wattsupwiththat.com/2012/11/04/new-paper-from-markonis-and-koutsoyiannis-shows-orbital-forcings-signal-in-proxy-and-instrumental-records/

Markonis and Koutsoyiannis, 2012

http://www.itia.ntua.gr/en/docinfo/1297/

http://www.springerlink.com/index/10.1007/s10712-012-9208-9

David L. Hagen: Many thanks! :-)

Dr Pratt

A law no less, and to think Reimann only has a hypothesis to his name.

LAW

1) An empirical generalization; a statement of a biological principle that appears to be without exception at the time it is made, and has become consolidated by repeated successful testing; rule (Lincoln et al., 1990)

2) A theoretical principle deduced from particular facts, applicable to a defined group or class of phenomena, and expressible by a statement that a particular phenomenon always occurs if certain conditions be present (Oxford English Dictionary as quoted in Futuyma, 1979).

3) A set of observed regularities expressed in a concise verbal or mathematical statement. (Krimsley, 1995).

THEORY

1) The grandest synthesis of a large and important body of information about some related group of natural phenomena (Moore, 1984)

2) A body of knowledge and explanatory concepts that seek to increase our understanding (“explain”) a major phenomenon of nature (Moore, 1984).

3) A scientifically accepted general principle supported by a substantial body of evidence offered to provide an explanation of observed facts and as a basis for future discussion or investigation (Lincoln et al., 1990).

4) 1. The abstract principles of a science as distinguished from basic or applied science. 2. A reasonable explanation or assumption advanced to explain a natural phenomenon but lacking confirming proof (Steen, 1971). [NB: I don’t like this one but I include it to show you that even in “Science dictionaries” there is variation in definitions which leads to confusion].

5) A scheme or system of ideas or statements held as an explanation or account of a group of facts or phenomena; a hypothesis that has been confirmed or established by observation or experiment, and is propounded or accepted as accounting for the known facts; a statement of what are held to be the general laws, principles or causes of something known or observed. (Oxford English Dictionary, 1961; [emphasis added]).

6) An explanation for an observation or series of observations that is substantiated by a considerable body of evidence (Krimsley, 1995).

Forgot to add auxilary hypothesis to my previous list. Given the nature of the blog topic I feel it would not be complete without it.

The introduction of an auxiliary hypothesis should always be regarded as an attempt to construct a new system; and this new system should then always be judged on the issue of whether it would, if adopted, constitute a realadvance in our knowledge of the world. An example of an auxiliary

hypothesis which is eminently acceptable in this sense is Pauli’s exclu-

sion principle. An example of an unsatisfactory auxiliary hypothesis would be the contraction hypothesis of Fitzgerald andLorentz which had no falsifiable consequences but merely served to restore the agreement between theory and experiment.

“Auxiliary hypothesis” implies that there is a main hypothesis. I prefer to think of hypotheses competing on an even field, rather than in terms of a reigning champion and a challenger.

“…but meanwhile you go to the conference with the poster you have, not the poster you want… In any event this poster is offered as a preliminary report on work still in progress…”

Are we looking at the graph with the jaundiced eye of skeptic, for the sake of the health and credibility of future scientific endeavors, or with a shopkeepers desire to fill shelf space with whatever the government will buy?

Neither. I’m looking at HadCRUT3 with an eye to describing it as simply as possible. I would be thrilled if anyone could offer me a simpler description.

Its the utility of the description you should be worried about (and this is context specific), not the simplicity. Simplicity can be useful, but not always.

The simplest description is that this its a temperature series.

I fear for the future of Western civilization. AGW prognosticating has become a smithy’s craft. From an age of technology, reason and hope we have turned a dark corner to the disinformation age where schoolteachers trade in their sheepskins for hammers to pound out the coffin nails to be used to bury science.

So if this is a work in progress, what are you shooting for? A milliKelvin?

Free from preconception and bias, what can we really know about the theory that humans cause global warming?

■We know that global warming is not proven science. Just what is the circumstantial evidence for global warming?

■We know that climate change is not unusual. It’s not even unusually rapid.

■We also know that the myth of a scientific consensus belies the actual fact of an ideologically-driven consensus supported by fraud and corruption.

■We know that the global warming alarmists have become further and further removed from the kind of rationalism that a dispassionate search for truth requires.

■We see the failure of academia and note its precipitous decline in a sense of truthfulness among AGW scientists in proportion to the reality-inspired cognitive dissonance of the confused Climatology belief system.

■We see global cooling. We see all of the other completely natural explanations for climate change that global warming alarmists ignore.

■We know now about all of the errors in historical land measurements, and how NASA is the next CRU; and, we know how more accurate evidence from satellite data does not show any dangerous global warming at all.

■We have learned that the atmospheric CO2 levels as measured at Mauna Loa are totally erroneous — the mere product of a cottage industry of fabricating data by a father and then his son.

■We all smelled the carcass of stinking fish in Copenhagen and the Leftist-lib agenda is all too clear to ignore the real truth about the global warming hoax.

Some circumstantial evidence is very strong, as when you find a trout in the milk. ~Henry David Thoreau

Wagathon, you’re a loon. Please remember not all of us are when you start tossing around “we.”

It should be implicit that when I use the royal “we,” I do not mean to include hypocrites. Is that better?

If by “better” you mean better at showing you’re a loon… yes. It is better. Otherwise, you’re saying anyone who disagrees with your stupid comments is a hypocrite, and that’s…

Loony.

For example, understanding that global warming is not a proven science and that there is no circumstantial evidence for global warming alarmism — which is why we see goats like political charlatans like Al Gore showing debunked graphs like the ‘hockey stick’ to scare the folks — and, not understanding that climate change the usual thing not the unusual thing and that the climate change we observed can be explained by natural causes is the only thing that really separates we the people from superstitious and ignorant government-funded schoolteachers on the issue of global warming… that and the fact that global warming alarmists do not believe in the scientific method nor most of the principles upon which the country was founded.

I’d say that comment pretty well demonstrates it. Either people who disagree with you, including our hostess, are hypocrites and apparently ignorant, or you’re a loon.

Guess which seems more likely.

Don’t be embarassed if you do not understand that the ‘we’ of science prefer the scientific method to guessing. Bob Carter points to what is missing–independent verification: “the essence of scientific methodology is the free sharing of data, and the unfettered and unprejudiced discussion of those data. Issuing statements of ‘consensus’ or ‘authority’ is antithetical to good science, and especially so in circumstances where the originating organizations have been established with political intent, have acted to restrict public debate or have a financial conflict of interest. Those familiar with the global warming issue will know that (IPCC) authority rules, despite it being well known that some IPCC practitioners of warming alarmism have flouted correct scientific procedures since the 1990s. And, anyway, a science truth is so not because the IPCC, the Royal Society or the Minister for Science asserts it to be so, but because it is based upon a hypothesis that has survived repeated testing by many independent scientists.”

What, successfully evading testing doesn’t count? How naively unpolitical of you!

I think this thread gives a further indication of just how desperate the warmists, including our hostess, are becoming. The more empirical data we collect, the more it gives a stronger and stronger indication that adding CO2 to the atmosphere has a negligible effect on global temperatures. This is, of course, heresy; it does not conform to the religion of CAGW. As Ronald Coarse noted “If you torture the data long enough, it will confess.”. Unfortunately, torturing data is not an indictable offense in any country. Ohterwise I would be delighted to make a citizen’s arrest, and turn Vaughan over to the authorities for prosecution.

I note our hostess claims that this is a technical post. I beg to differ. This is sheer propoganda.

Yes, the certainty (no significant effect) is growing and the desperation of warmists too.

Oh do come off it Jim, the use of epicycles to explain and predict complex phenomena has a long history. When they show the instruments of torture to the ‘denialists’ you will change your tune.

There is no need to torture data anymore, the drones will save us all.

The Cripwell:

I note our hostess claims that this is a technical post. I beg to differ. This is sheer propoganda.

And this is sheer denialism.

And this is nothing but a loser who has nothing better to do with his life than to go around the internet and regurgitate political slogans like ‘denialism’.

Andrew

Do you have to stop breathing when you type, I wonder?

Jim you are hundred percent right on carbon dioxide having a negligible effect. I count it as zero because of Ferenc Miskolczi and because of the failed predictions from IPCC. Miskolczi elaborated his theory in a 2007 paper according to which the greenhouse gases collaborate by feedbacks to keep the IR transmittance of the atmosphere constant. He even calculated theoretically that the optical thickness of the atmosphere in the infrared should have a value of about 1.86. This corresponds to an IR transmittance of 15 percent. He was attacked in the blogosphere because his theory requires water vapor feedback to be negative, the exact opposite of IPCC. This was vital to these guys who needed the positive feedback to produce their outrageous warming predictions. The theory was up in the air until 2010 when Miskolczi was able to put it to an experimental test. What was required was a direct comparison between his theory and the greenhouse theory on infrared absorption by the atmosphere. His theory says that the IR transmittance of atmosphere should not change when more CO2 is added to it. With the greenhouse theory the opposite is true – adding CO2 will lower the IR transmittance of the atmosphere. Using NOAA database of weather balloon observations Miskolczi was able to demonstrate that the IR transmittance of the atmosphere did not change for 61 years while carbon dioxide percentage increased by 21.6 percent. This is exactly what his theory had predicted and was a clear victory over IPCC. If so, it follows, we should be able to show in other ways whether the greenhouse effect works or not. Fortunately we can. In 2007 IPCC predicted from the greenhouse theory that global warming in the twenty-first century shall proceed at the rate of 0.2 degrees per decade. We are now in the second decade of this century and there is no sign whatsoever of this predicted warming. The fate of scientific theories that make wrong predictions is to be consigned to the trash heap of history. The greenhouse theory has already made two wrong predictions First, that adding carbon dioxide to air will reduce atmospheric IR transmittance (it didn’t); and second, that it will cause twenty-first century warming (it didn’t). That’s enough to earn it a place in that trash basket of history. Greenhouse warming theory, R.I.P.

This is the most recent of 2 decades worth of work trying to identify periodic filters and decay rates that can smooth the observed trend and get a relatively straightforward function of CO2 as a result. This is either the Holy Grail or else a carefully constructed flimsy imitation. That is: if this is the signal of CO2, you have constructed the best filters to reveal it; if this is not the signal of CO2, you have constructed the best filters to reveal something conforming to someone’s expectations.

Whether you have found the signal of CO2 is as uncertain as with all the other phnomenological model fitting efforts.

The best test of models is how well they are matched by future data. What is your model for the data collected after the last of the data used in estimating model parameters? What is your model for the next 30 years, say possibly 3 models as Hansen did for 3 realistic CO2 scenarios?

What is your estimate of the transient climate effect, say a doubling of CO2 over a span of 70 years?

There is an apparent period of 1000 years or so, that produced the Minoan Warm Period, Roman Warm Period, Medieval Warm period, etc. That is, it is “apparent” to some. If you subtract out the best estimate of that periodic function, how much remains to be accounted for by CO2?

Remember to smile, wink and chuckle when you say “millikelvin accuracy” and “99.98%” aloud.

Just to add a couple of comments in the same vein.

First why not subtract some CO2 series (possibly one with some basis in reality) from the temp series and then play around to explain the residue? Answer that question and you start to expose the problem with the reverse approach – methodologically they are much the same.

Second why not hold out 50% of your temp time series (random selection of each data point perhaps), do your analysis on on one half and check the fit to the other. Don’t even need to wait for the future to see if it works.

On Dec 4 your post refers to Dec 4 as “tomorrow”. Is a puzzlement!

tangled web weaving is tricky business

I mailed my post to Judith on December 3 and she said she’d post it that evening. Due to time pressure she wound up posting it on the morning of Dec. 4 without editing it accordingly. Less puzzled now, King of Siam?

Thanks for clearing that up, Anna.

Yep. I’m even whistling a happy tune, that I picked up on the docks.

From 1850 to 1980 SO2 emissions rose from almost 0 to 140,000 Gigagrams per year in 1980 and then dropped down to 110,000 Gigagrams around 2000 and then it started to rise again as China began to burn a lot more coal.

http://sunshinehours.wordpress.com/2012/09/14/are-we-cooling-the-planet-with-so2/

You forgot to account for 7 Pinatubos rise of SO2 by 1980 and a 1 Pinatubo drop from 1980 to 2000.

Good point, one that I had the very fortunate opportunity to discuss with Pieter Tans from NOAA Boulder this afternoon when he dropped by my poster. (Perhaps I should call him Al since he and James Butler are the two al’s in my “Hofmann et al” in the poster.)

SO2 (which cools) and brown cloud pollution (which warms) are too well correlated to separate. The only question is which dominates. MRES suggests brown cloud dominates, which Pieter had no quarrel with. Had MRES gone down instead of up it would support that SO2 dominates.

sunshine

The problem with the aerosol explanation for the mid-century cooling is that it begs the question:

If human aerosols (SO2) were responsible for the mid-century cooling, could it not be that their removal was largely responsible for the late century warming (rather than GHGs)?

Hans Erren has plotted this for the USA with the same line of reasoning:

http://members.casema.nl/errenwijlens/co2/usso2vst.gif

Max

“could it not be that their removal was largely responsible for the late century warming (rather than GHGs)?”

Of course.

If 1 Pinatubo of CO2 causes .5C warming, then removing 1 Pinatubo of SO2 should causes .5C of warming.

Ooops. “If 1 Pinatubo of SO2 ” (not CO2 which I typed inadvertently)

” the oceanic heat sink delays the impact of radiative forcing variations on HadCRUT3 by 15 years”

Does this mean the current temperature plateau has its root in what happened 15 years earlier ?

Well the bottom of the Oceans is at 4 degrees so the water there came from polar sources, melted ice/seawater or chilled winter, high salt brines.

The movement of cold water to the bottom is why the Oceans are cold. The Oceans are not cold at the bottom because they are at thermal ‘equilibrium’ with the sea bed.

Strange but true:

The average temperature of the ocean, top to bottom, is 3.9C.

The temperature below 300 meters is a fairly constant 3C all over.

The freezing temperature of seawater is -1.8C.

The maximum density of seawater occurs at -1.8C.

Matter at 4C has a radiative emittance of 335W/m2.

The power delivered to the earth from the sun is 1366W/m2 at top of atmosphere.

Projecting solar power onto a sphere reduces it by a factor of 4 to 341W/m2.

It could be just a coincidence that the average temperature of the ocean is almost precisely that of a spherical black body illuminated by a 1366W/m2 source. Then again maybe it isn’t just a conincidence.

I agree with the general analysis of Mr. Pratt’s but not the extrapolation going forward. We are only now beginning to see some of the Earth system feedbacks begin to kick in from previous decades of CO2 emissions. An ice free summer Arctic and melting permafrost are just two of the Earth system feedbacks that will alter the future shape of the curve. This quadratic rounding or leveling may occur later in the century or next century but we’ve got several jumps upward to come in the deacdes ahead. This is just getting started.

Skeptical warmist

Baseless beliefs of certainty are for religious zealots and realclimate bloggers. Here we expect more.

As Niels Bohr pointed out decades before Yogi Berra, “Prediction is very difficult, especially about the future.” Please don’t view my extrapolations as predictions, there’s a difference. As extrapolations they are perfectly fine.

Ray Pierrehumbert told me this morning at AGU that the permafrost threat was greatly overblown. I have no opinion either way, so if you disagree with Ray please take it up with him, not me.

My comment is more about Earth system feedbacks. Hansen’s last few papers have made some excellent points about these as well as the overall Earth system level of equilibrium. Once big things like permafrost, Greenland and Antarctica really chart to change, the feedbacks fall heavily into the positive side of things. This rounding of your curve of course does not and cannot include these, but they could be significant.

Wondering how much Ray is an expert on permafrost or up on the latest resarch there– really, I’m wondering…I’ve got no idea what his expertise in this very specialized area is, for the latest research would seem to contradict his comments:

http://www.newscientist.com/article/dn22549-arctic-permafrost-is-melting-faster-than-predicted.html

Hi Dr. Pratt

There is no beef in the CO2; the beef is in the mid-Atlantic ridge.

http://www.vukcevic.talktalk.net/SST-NAP.htm

It is shame you left out the bit about the low frequencies and the ocean floor.

A shame indeed. But I only attributed ocean oscillations to that effect. Are you able to account for global warming the same way?

North Hemisphere yes, Enso possibly, not within miliKelvin, not globally, but to a point and to a degree where can be taken seriously, hopefully by those to whom true cause matters more than a personal conviction.

this doesn’t make any sense to me

it’s making more sense to me now, the order of graphs confused me

Well done, and with maybe two parameters more, dr. Pratt might be able to reduce the residual well into the microkelvin range! And congratulations to the people who have measured and processed surface temperatures for over a century for their remarkable achievement.

Actually I’ve pretty much had it with these least-square fits to a single signal with only a few degrees of freedom “explaining” everything and more, with wildly diverging conclusions but always stated with confidence. Whether done by professional scientists or amateurs. In spirit, it comes pretty close to astrology in my opinion.

Three more parameters and he can draw elephant. Oh goody.

About right. For an R2 of 99.98% I’d calculated seven, shall we split the difference? ;)

The occurrence of the records by decade [i.e., by decade by state]… makes it obvious that the 1930s were the most extreme decade and that since 1960, there have been more all-time cold records set than hot records in each decade.

However, there are only 50 states, and this is a number that isn’t large enough to give the best statistical results… [a better metric is a ] year-by-year numbers of daily all-time record high temperatures from a set of 970 weather stations with at least 80 years of record… There are 365 opportunities in each year (366 in leap years) for each of the 970 stations to set a record high… Note the several years above 6000 events prior to 1940 and none above 5000 since 1954. The clear evidence is that extreme high temperatures are not increasing in frequency, but actually appear to be decreasing. The recent claims about thousands of new record high temperatures were based on stations whose length-of-record could begin as recently as 1981, thus missing the many heat waves of the 20th century.

John R. Christy, PhD, Alabama State Climatologist, The University of Alabama in Huntsville / Senate Environment and Public Works Committee,

1 August 2012 (One Page Summary)

(Data: NOAA/NCDC/USHCNv2)

FAIL

A Cornell statistics professor explains why you Do not smooth time series.

And if you do, you never ever use the smoothed data as input to another analytic.

With four parameters I can fit an elephant, and with five I can make him wiggle his trunk. ~Jon von Neumann

There are three kinds of lies: lies, damned lies, and statistics. ~Mark Twain

Lessee, first you massage away inconvenient data (sawtooth). You pretend that there was a semblance of something that could be called global temperature sensing in the late 19th and earlyt 20th century. You smooth the questionable, massaged data into curve that looks like about 30 zillion (rough estimate) curves in nature. Then drop off the past 15 years of data. Then you fit it.

Are you f*cking kidding me?

Briggs, William. “Now I’m going to tell you the great truth of time series analysis. Ready? Unless the data is measured with error, you never, ever, for no reason, under no threat, SMOOTH the series! And if for some bizarre reason you do smooth it, you absolutely on pain of death do NOT use the smoothed series as input for other analyses! If the data is measured with error, you might attempt to model it (which means smooth it) in an attempt to estimate the measurement error, but even in these rare cases you have to have an outside (the learned word is “exogenous”) estimate of that error, that is, one not based on your current data.

“If, in a moment of insanity, you do smooth time series data and you do use it as input to other analyses, you dramatically increase the probability of fooling yourself! This is because smoothing induces spurious signals—signals that look real to other analytical methods. No matter what you will be too certain of your final results! Mann et al. first dramatically smoothed their series, then analyzed them separately. Regardless of whether their thesis is true—whether there really is a dramatic increase in temperature lately—it is guaranteed that they are now too certain of their conclusion.”

David

It’s an old saw, but worth repeating here.

Three statisticians go hunting. When they see a rabbit, the first one shoots, missing it by a foot on the left.

The second one shoots and misses it by a foot on the right.

The third one shouts: “We’ve hit it!…

Max

The mean weight of all statiscians in the world is 3 lbs.*******

Includes urn.

Being in love means never having to say you’re sorry. Being in statistics means never having to say you’re certain.

Bazinga.

Data sets are like people. Torture them enough and they’ll tell you whatever you want them to say.

Celebrating your birthday is good for your health. Statistics prove the more of them you celebrate the longer you are likely to live.

Shoe size is highly correlated with income and education level.

A statistician is a person who draws a mathematically precise line from an unwarranted assumption to a forgone conclusion.

The great majority of people have more than the average number of legs.

Scientists use statistics as a drunk uses a lampost; for support rather than illumination.

Vaughan, I’m not sure where you’re going with this ? Does it, or will it have any predictive value ?

Of course it has no predictive value. He cut the fit off at 1995 because it fails at that point. It’s worthless.

David, It is a problem with the American education system, half of our scientists are below average.

According to a new model developed for AR5, 97% of climate scientists are above average.

And the other half are engineers.

(Again apologies for not getting to all comments promptly.)

@J Martin: Does it, or will it have any predictive value ?

Five years, perhaps not. But ten years, it strongly suggests that 2010-2020 (an odd decade since its 3rd digit is 1) will trend up, very likely strongly. Reasons:

(i) Every odd decade since 1870 has trended up more strongly than its predecessor. This despite the fact that there have been sustained downward trends, much stronger than in recent decades.

(ii) If SAW continues it will trend up.

(iii) SOL looks extremely likely to trend up.

(iv) AGW has been trending up for over a century.

So if 2010-2020 trends down it is hard to imagine stronger evidence than that against my hypotheses!

Looking further into the future is risky. Conceivably new technologies could price fossil fuel energy out of the market, in which case CO2 emissions might drop right off the Keeling curve. Or the permafrost might melt and dwarf the impact of increasing CO2.

But if neither of those things happen, and the Keeling curve stays on its predicted track, then I would predict continuation of the green curve in Figure 2, for two reasons.

1. Because the causal link between CO2 and temperature is well understood (pace those who insist otherwise).

2. Because even if we’d never heard of CO2 we’d still have this separation of multidecadal climate between Figures 1 and 2 into an oscillating component and a rising component, whose upward-curvature has continually been getting steeper and shows no sign of abruptly turning into a downward curvature. Those who claim 2000-2010 proves otherwise are ignoring SOL in Figure 11, which accounts for the pause in 2000-2010. One decade gives no information at all about multidecadal climate, which is the subject of this poster.

Vaughan Pratt | December 7, 2012 at 7:50 pm | Reply

“But ten years, it strongly suggests that 2010-2020 (an odd decade since its 3rd digit is 1) will trend up, very likely strongly. Reasons:”

Let me boil those reasons down for you: numerology.

Incredible.

Are you a fan of bible codes too?

I should add that anyone who can separate multidecadal climate defined as F3(HadCRUT3) into the sum of an oscillating component and a concave-downwards trend (the opposite of what I called Observed Global Warming which is concave-upwards) will have the immediate attention of a lot of people.

The whole idea that multidecadal climate is something that can be so precariously defined reveals an academic mindset in the extreme. Those of us who insist that geophysical processes need to be carefully observed and analyzed incisively without precious preconceptions can only smile at such hubris. I’ll say nothing more here..

Having read this twice, my faith in climate models, already negligible, managed to sink even further.

The purveyors of climate models have a product to sell and obviously seek to protect their own jobs/careers. I am certain you can model some of the factors affecting the Earth’s climate accurately, but they are dwarfed by the number of factors about which we have little understanding, or whose existence we have not yet even recognized. The modellers want you to think otherwise and that there is no GIGO or doubt in climate science.

Climate modellers can be relied on to be shrill in defense of their ‘beautiful creations’, using derision and sneers as their principal defense.

Like an increasing number of people, I deeply resent these models, which are responsible for the hugely wasteful and expensive economic decisions taken by our gullible ‘political elite’, who are stupid enough to be taken in by their highly dubious projections.

Watts et al. demonstrate that when humans alter the immediate landscape around the thermometer stations, there is a clear warming signal due simply to those alterations, especially at night. An even more worrisome result is that the adjustment procedure for one of the popular surface temperature datasets actually increases the temperature of the rural (i.e. best) stations to match and even exceed the more urbanized (i.e. poor) stations… the adjustment process took the spurious warming of the poorer stations and spread it throughout the entire set of stations and even magnified it.

~John Christy, EPW Testimony (1 August 2012)

Christy is a professed Christian. That’s an automatic fail in warmist circles.

Maybe conversion to heathen might be a great career move.

A couple things I did NOT know about Christy but I do now.

1) Christy was a lead author in IPCC 2001

2) His doctoral thesis advisor was Keven Trenberth